Support

How to install a custom SSL certificate

The container msa_front is installed with a default self-signed certificate to allow SSL when connecting to https://localhost. While this is fine for most of the lab trials, you may want to install your own certificate to ensure a certified connection to your setup.

Prerequisites

To install your certificate you need to have the certificate file (server.crt) and the associated private key (server.key)

Installation

Update the docker-compose file

In order to persist the certificate during the container image update you first need to update the docker-compose file.

Edit the file docker-compose.yml and add msa_front in the volumes section and update the service msa_front with

volumes:

- "msa_front:/etc/nginx/ssl"

Restart your setup with docker-compose up -d

If you are running a docker swarm then you’ll only have to update the service msa_front with

volumes:

- "/mnt/NASVolume/msa_front:/etc/nginx/ssl"

you need to create the director /mnt/NASVolume/msa_front manually first

|

Install the certificate

-

copy the two files server.crt and server.key on your docker host

-

copy the 2 files in the front container:

docker cp server.key server.crt $(docker ps | grep msa_front | awk '{print $1}'):/etc/nginx/ssl/ -

force recreate front to refresh certificates container:

docker service update --force FRONT_SERVICE_NAME -

browse to https://localhost (you may have to clean your cache)

How to support TFTP for managed entity operations

If a managed entity use TFTP protocol during backend operation, like a restore, an MSA variable must be set with the IP of the MSactivator™. Then msa_sms container must be restarted

POST https://localhost/ubi-api-rest/system-admin/v1/msa_vars

[

{ "name": "UBI_SYS_EQUIPMENTS_IP", "comment": "", "value": "<MSA_IP>" }

]

docker-compose restart msa_sms (standalone) docker service update --force ha_msa_sms (HA)

Troubleshooting

Containers / Docker Engine

Install the MSactivator™ behind a proxy

Often the MSactivator™ has to be installed on a host that sits behind a proxy. This requires some specific configuration on the host, on docker and also on the MSactivator™ containers themselves:

Proxy setting on the host OS where the proxy needs to be specified and used. This is needed to download all the necessary docker packages as well as docker-compose on the machine. Those settings are different depending on the OS that might be used.

Once installed, docker does not inherit the proxy settings from the host machine. Proxy settings need to be specified in dockers configuration file to download the necessary MSactivator™ images.

However, along with that, the "no proxy" option should be specified for the internal container to containers communication. Falling to do so will result in all the traffic routed the proxy.

The easiest way to do that is to edit the file docker-compose.yml and add the following to the service msa_dev:

environment:

http_proxy: "<PROXY URL>"

https_proxy: "<PROXY URL>"

no_proxy: "localhost,127.0.0.1,linux_me_2,linux_me,msa_cerebro,camunda,msa_alarm,db,msa_sms,quickstart_msa_ai_ml_1,msa_ui,msa_dev,msa_bud,msa_kibana,msa_es,msa_front,msa_api"

If your proxy also rewrites the SSL certificates you will face errors such as fatal: unable to access 'https://github.com/openmsa/Workflows.git/': Peer’s Certificate issuer is not recognized. when installing the MSactivator™.

To solve this you also need to add the following to the msa_dev environment.

GIT_SSL_NO_VERIFY: "true"

How do I check that the Docker containers are deployed ?

On a Linux system from the directory where you ran the docker-compose up -d command (working directory), run docker-compose images.

$ docker-compose images

Container Repository Tag Image Id Size

----------------------------------------------------------------------------------------------------------------------------

quickstart_camunda_1 camunda/camunda-bpm-platform 7.13.0 9c773d0a9146 257.7 MB

quickstart_db_1 ubiqube/msa2-db c1aa0013c4d8b8c39682034a23d395be8e4d8547 48151f3aa621 158.2 MB

quickstart_linux_me_1 quickstart_linux_me latest 3d0eb1ca1738 533.1 MB

quickstart_msa_api_1 ubiqube/msa2-api 6068794aa30160fb5696bb5c96253a4b1fb3536b 4f1ff5300692 1.179 GB

quickstart_msa_bud_1 ubiqube/msa2-bud 43ee454c22b9ed217dac6baba9a88a345a5422c2 d0b6a867c236 725.9 MB

quickstart_msa_cerebro_1 lmenezes/cerebro 0.9.2 cd2e90f84636 268.2 MB

quickstart_msa_dev_1 quickstart_msa_dev latest 704b5286200a 521.2 MB

quickstart_msa_es_1 ubiqube/msa2-es 037a2067826b36e646b45e5a148431346f62f3a6 f99566a82028 862.6 MB

quickstart_msa_front_1 ubiqube/msa2-front 03f833a9c34c8740256162dee5cc0ccd39e6d4ef 0557476a3f74 28.91 MB

quickstart_msa_sms_1 ubiqube/msa2-sms 0107cbf1ac1f1d2067c69a76b107e93f9de9cbd7 e263e741f926 759.5 MB

quickstart_msa_ui_1 ubiqube/msa2-ui 47731007fb487aac69d15678c87c8156903d9f51 0f370eab1fe6 40.66 MB-

msa_front: runs NGINX web server

-

msa_api: runs the API

-

msa_sms: runs the CoreEngine daemons

-

msa_ui: runs the UI

-

db: runs the PostgreSQL database

-

camunda: runs the BPM

-

es: runs the Elasticsearch server

-

bud: runs the batchupdated daemon

Verify that all the containers are up.

This will also show you the network port mapping.

$ docker-compose ps

Name Command State Ports

----------------------------------------------------------------------------------------------------------------------------------

quickstart_camunda_1 /sbin/tini -- ./camunda.sh Up 8000/tcp, 8080/tcp, 9404/tcp

quickstart_db_1 docker-entrypoint.sh postg ... Up 5432/tcp

quickstart_linux_me_1 /sbin/init Up 0.0.0.0:2224->22/tcp

quickstart_msa_api_1 /opt/jboss/wildfly/bin/sta ... Up 8080/tcp

quickstart_msa_bud_1 /docker-entrypoint.sh Up

quickstart_msa_cerebro_1 /opt/cerebro/bin/cerebro - ... Up 0.0.0.0:9000->9000/tcp

quickstart_msa_dev_1 /sbin/init Up

quickstart_msa_es_1 /usr/local/bin/docker-entr ... Up 9200/tcp, 9300/tcp

quickstart_msa_front_1 /docker-entrypoint.sh ngin ... Up 0.0.0.0:443->443/tcp, 0.0.0.0:514->514/udp, 0.0.0.0:80->80/tcp

quickstart_msa_sms_1 /docker-entrypoint.sh Up 0.0.0.0:69->69/tcp

quickstart_msa_ui_1 /docker-entrypoint.sh ./st ... Up 80/tcpUser Interface

I can’t login to the user interface (UI)

Here are a set of useful CLI commands that you can run from the working directory…

Check the status of the database:

$ sudo docker-compose exec db pg_isready

/var/run/postgresql:5432 - accepting connectionsMonitor the logs of the API server. Run the cmd below and try to login. Report any error from the logs:

docker-compose exec msa-api tail -F /opt/jboss/wildfly/standalone/log/server.logIf the API server is not responding or if you can’t login after a few minutes, run the command below to restart the API server and monitor the logs:

$ docker-compose restart msa_api

Restarting quickstart_msa_api_1 ... doneThen monitor the logs as explained above.

Wildfly startup failure fatal KILL command

If Wildfly fails to start with an error similar to fatal KILL command then you probably haven’t allocated enough memory to your docker engine

The above steps should resolve common reasons why the MSactivator™ is not functioning properly. If there is something that is still not working properly, then please contact UBiqube.

CoreEngine

CoreEngine nor starting or restart fails

If the CoreEngine doesn’t (re)start properly, for instance when running

docker-compose exec msa-sms restart

you can check the CoreEngine configuration logs:

docker-compose exec msa-sms cat /opt/sms/logs/configure.log

How do I enable debug logs on the CoreEngine ?

The CoreEngine logs are available on the msa_sms container in the directory /opt/sms/logs.

If you are designing a Microservice or simply operating the MSactivator™, you might need to monitor the logs of the configuration engine.

Run the CLI command below to tail the logs

$ sudo docker-compose exec msa-sms tail -F /opt/sms/logs/smsd.logBy default, DEBUG logs are not enabled.

To enable the DEBUG mode, you need to connect to the container 'api' and execute the CLI command tstsms SETLOGLEVEL 255 255

$ sudo docker-compose exec msa-sms bash

[root@msa /]# tstsms SETLOGLEVEL 255 255

OK

[root@msa /]#This will activate the DEBUG mode until the service is restarted.

Execute tstsms SETLOGLEVEL 255 0 to revert to the default log level.

| this will only enable DEBUG mode for the configuration engine (smsd.log) |

How do I permanently enable debug logs on the CoreEngine modules

The CoreEngine is in charge of configuration but also monitoring, syslog collecting, syslog parsing, alerting,…

The debug mode can also be enabled permanently for the various modules of the CoreEngine.

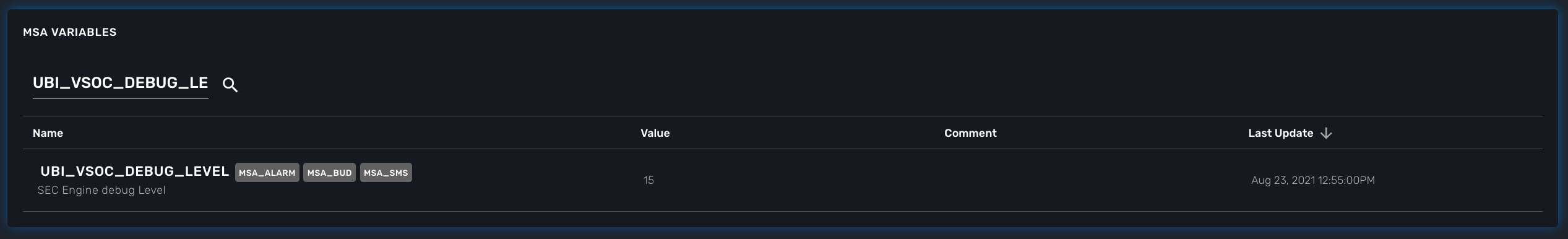

To enable the CoreEngine logs to debug mode, you need to set the global setting UBI_VSOC_DEBUG_LEVEL to 15 (default value is 0)

Restart the containers by using the action needed to reflect this setting.

docker container restart $(docker ps -q -f name=msa_alarm); $(docker ps -q -f name=msa_bud); $(docker ps -q -f name=msa_sms)

Monitor the configuration logs

docker-compose exec msa-sms tail -F /opt/sms/logs/smsd.logMonitor the SNMP monitoring logs

docker-compose exec msa-sms tail -F /opt/sms/logs/sms_polld.logMonitor the syslog parser logs

docker-compose exec msa-sms tail -F /opt/sms/logs/sms_parserd.logMonitor the syslog collecting logs

docker-compose exec msa-sms tail -F /opt/sms/logs/sms_syslogd.log| for monitoring, syslogs, parser, enabling the DEBUG logs may result in a huge volumes of logs so you need to use this carefully. To revert the configuration use the CLI cmd above with 1 instead of 15. |

Monitor the alarm logs

The Alarm module logs are available on the msa_alarm container in the directory /opt/alarm/logs.

docker-compose exec msa-alarm tail -F /opt/alarm/logs/sms_alarmd.log