|

|

|

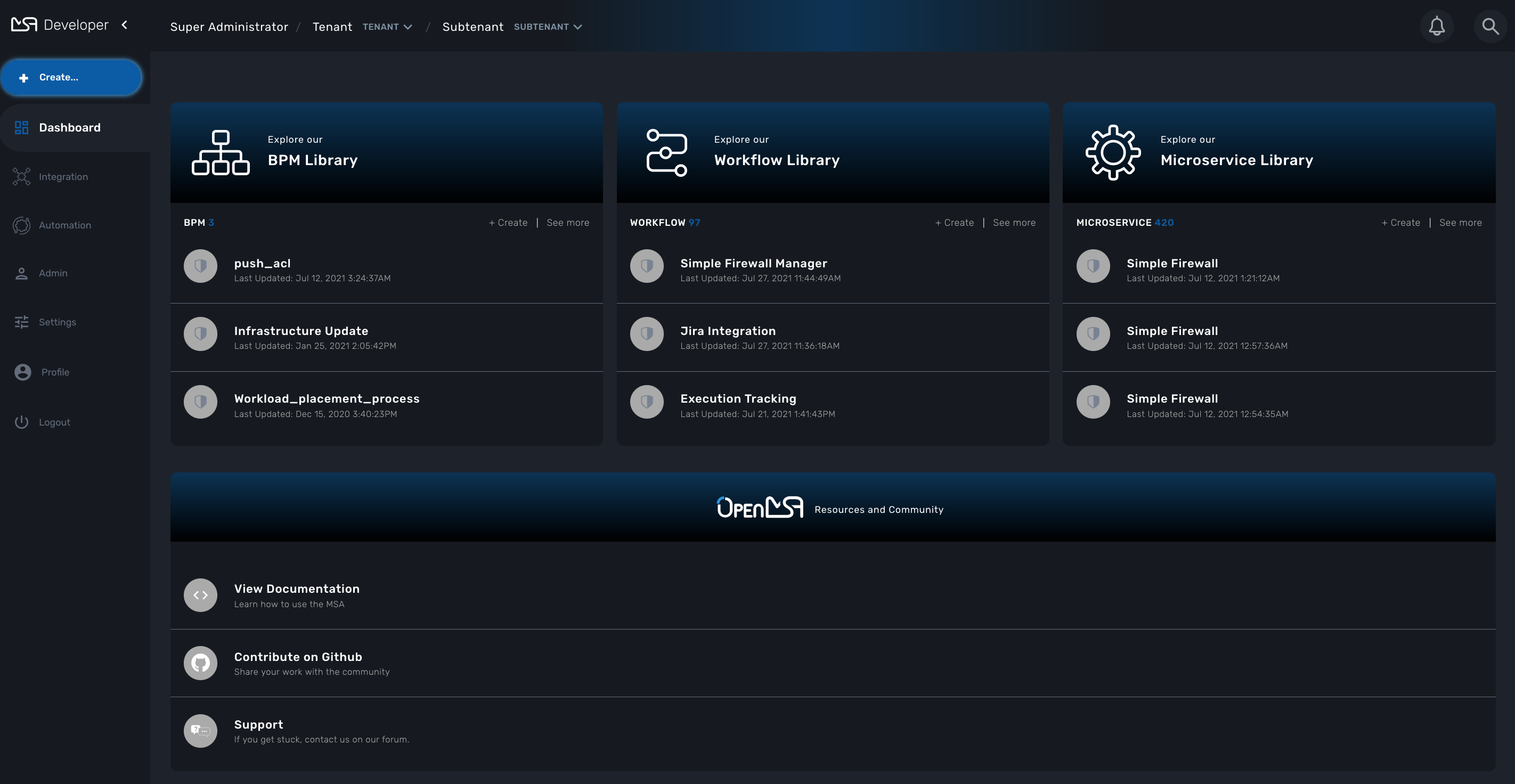

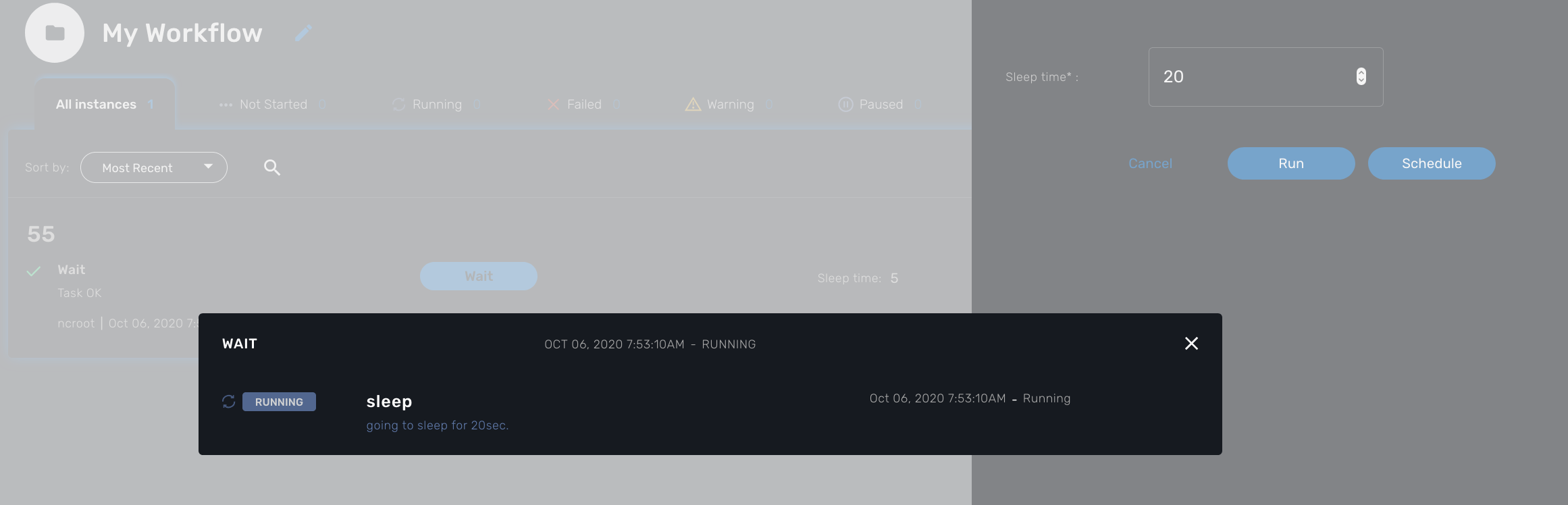

This documentation contains a set of tutorials and examples to help you get familiar with the MSactivator™ as a development platform.

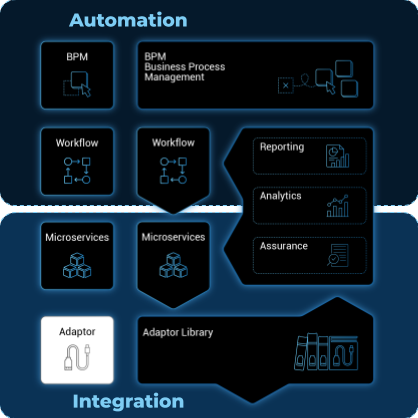

Developer Portal

The developer portal provides the tools to design, develop and test automated and integrated processes.

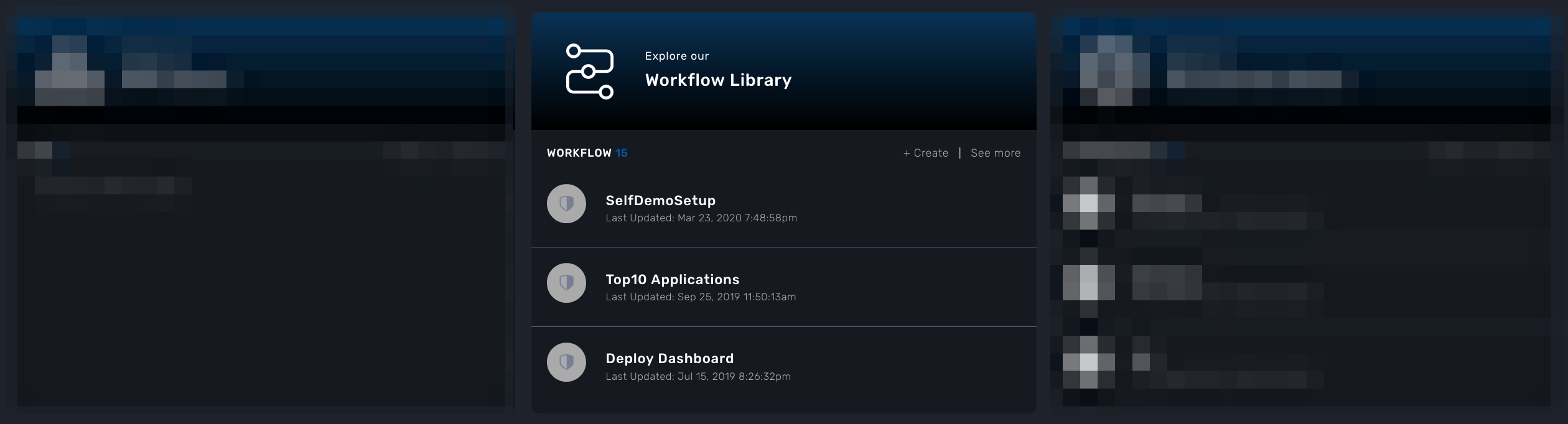

After connecting to the developer portal you will see the 3 swimlanes for the automation and integration provided by the MSactivator™.

You can either start creating new libraries or view and edit the existing ones.

BPM Editor

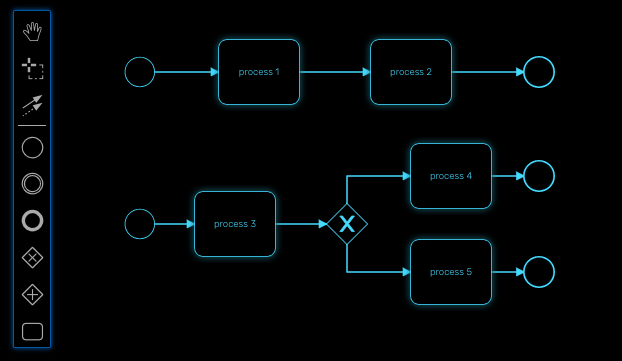

The BPM editor is a web based UI for designing BPM processes.

Overview

The MSactivator™ provides a web based user interface editor for designing BPM (Business Process Model).

BPM are sitting at the top of the automation layer and the editor will allow you to create BPM in a codeless way.

BPM design

To create a new BPM from the developer portal, click on "+ Create" from the swimlane "BPM Library."

You can also browse through the existing BPM by clicking on "See more".

| you need to select a sub-tenant to see the BPM. |

BPMN elements

The MSactivator™ BPM engine supports the following BPMN elements:

| Bear in mind that a gateway is not a task! You have to determine facts and needs before reaching a gateway. |

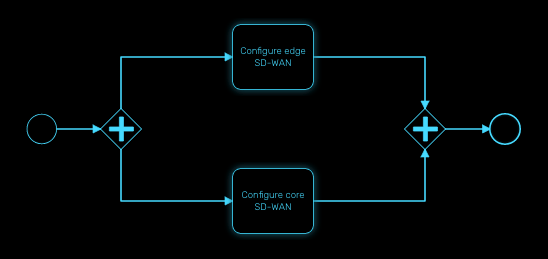

Parallel gateway (AND)

Gateways can also be used to model concurrency in a process.

The most straightforward gateway to introduce concurrency in a process model is the Parallel Gateway, which allows forking into multiple paths of execution or joining multiple incoming paths of execution.

The functionality of the parallel gateway is based on the incoming and outgoing sequence flow(s):

-

fork: all outgoing sequence flows are followed in parallel, creating one concurrent execution for each sequence flow.

-

join: all concurrent executions arriving at the parallel gateway wait at the gateway until an execution has arrived for each of the incoming sequence flows. Then the process continues past the joining gateway.

Note that a parallel gateway does not need to be ‘balanced’ (i.e., a matching number of incoming/outgoing sequence flows for corresponding parallel gateways). A parallel gateway will simply wait for all incoming sequence flows and create a concurrent path of execution for each outgoing sequence flow, not influenced by other constructs in the process model. So, the following process is legal in MSactivator™:

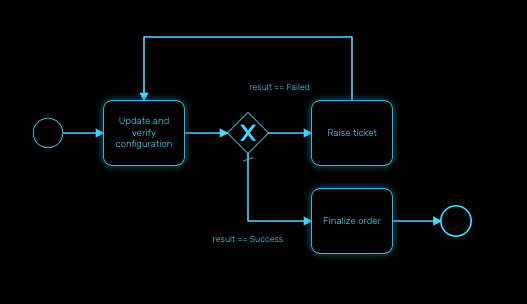

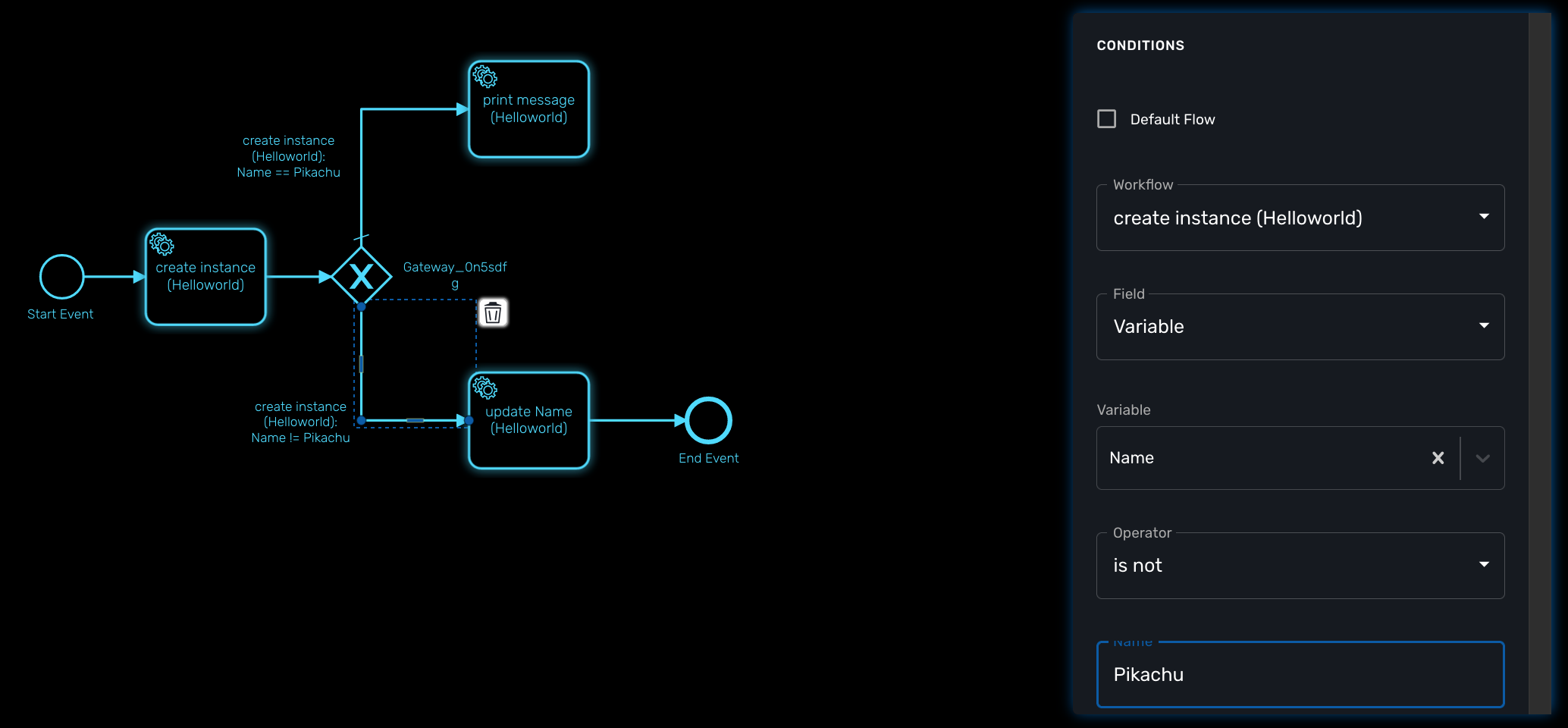

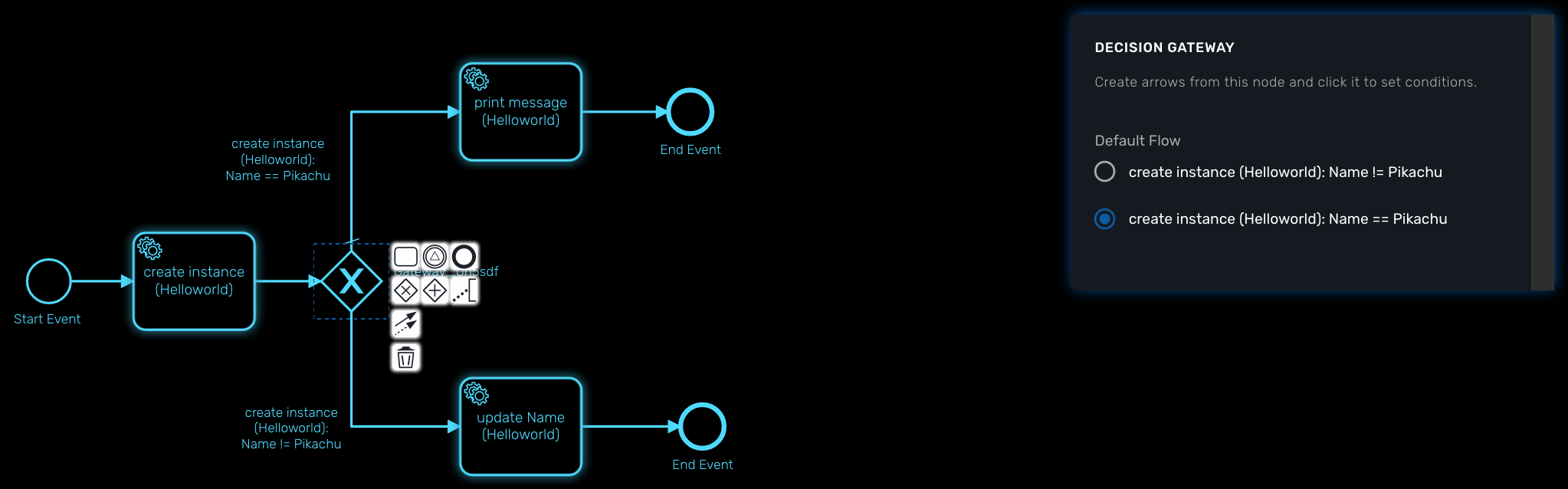

Decision gateway (XOR)

The XOR gateway will let you model a decision in the process.

When the execution arrives at this decision gateway, all outgoing sequence flows are evaluated in the order in which they have been defined. The sequence flow whose condition evaluates to ‘true’ is selected for continuing the process.

To configure the gateway, you need to select the outbound link from the gateway, choose the inbound task and configure the condition to transition to the next BPM task.

Configure a decision gateway

To configure a decision gateway, you need to configure each of its outbound links and select one of the outbound link to be the default flow.

Default flow

Click on the decision gateway ans select the default flow of the process when there is not suitable condition based on the result of the inbound task.

Workflow

For each outbound link, select the inbound workflow that will be used as the condition source for the link.

Field

Select the field to use to test the condition. Field can be "Variable" or "Result Status".

With "Variable", you can choose one of the workflow variable and test its value for the decision.

With "Result Status", the decision will depend on the execution status of the workflow.

Design a BPM process

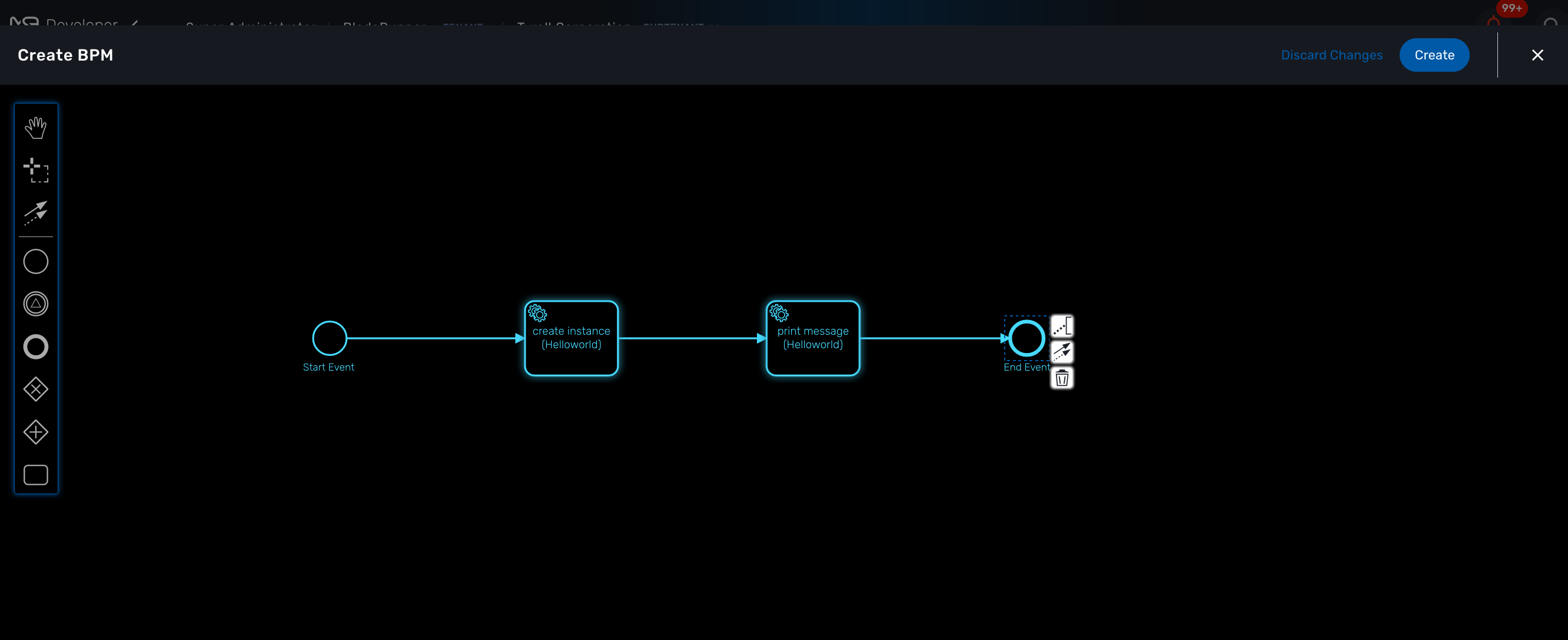

Create a new BPM

Click on "Create" to create a new BPM editor.

Use your mouse to add tasks and link them together.

| A BPM process must have a StartEvent and an EndEvent. |

| Only one executable BPM process can be specified in a BPM definition. |

You can save your BPM at anytime and edit it later. When you save you BPM, you need to select a sub-tenant.

| A BPM is associated to a single sub-tenant. |

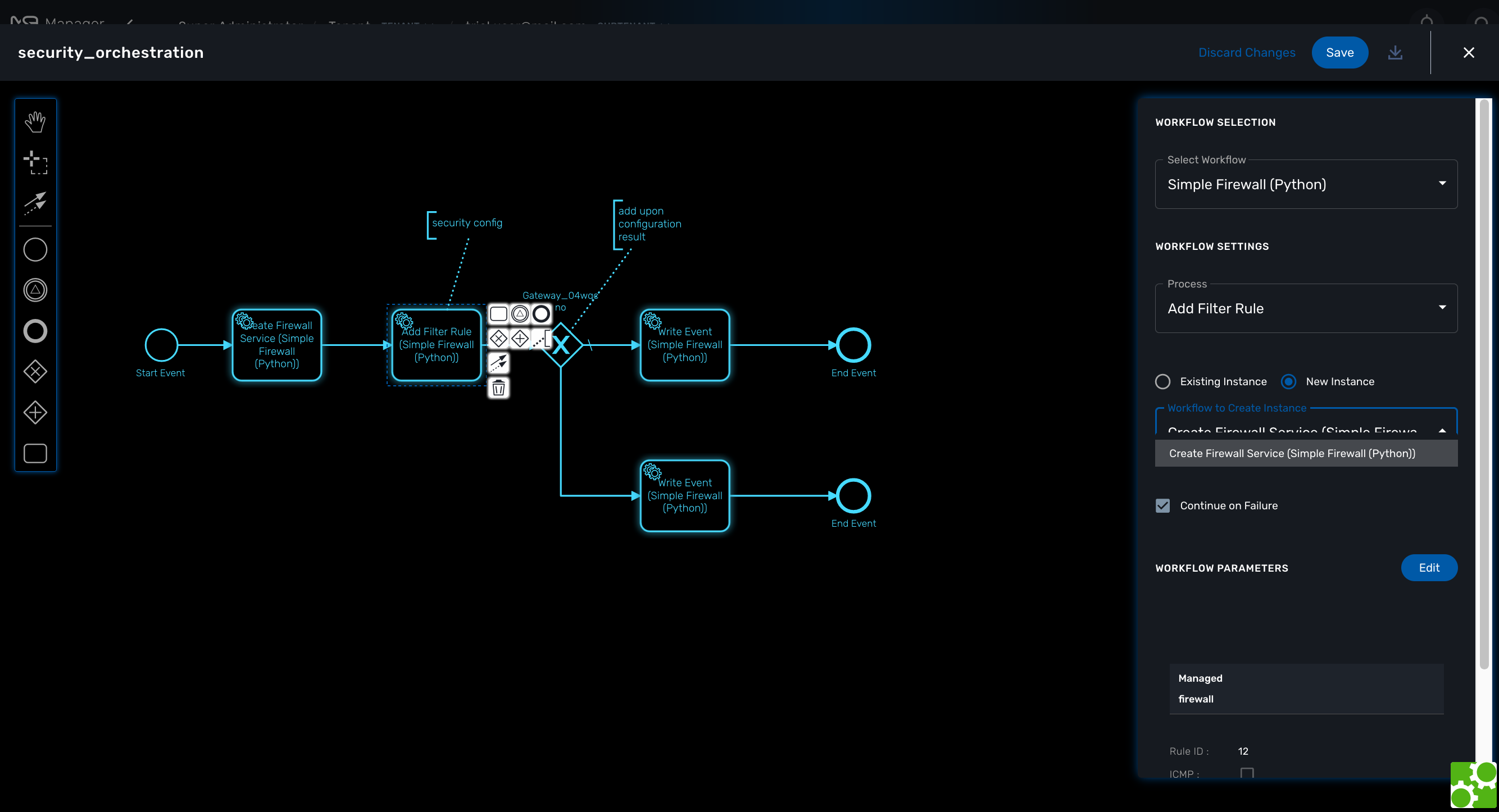

Connecting workflows and processes

Select an executable task to see the list of workflows (based on the sub-tenant selected), then select a process and provide its input parameters.

If the process you select in a BPM task is a "UPDATE" process (see Workflow design for more details), you’ll have the possibility to select either an existing Workflow instance or use a new instance created by one of the previous BPM task.

This is extremely useful for BPM designers for chaining tasks together.

Execution flow control

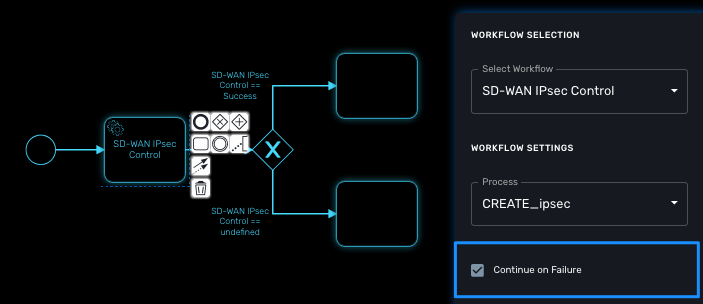

By default, the BPM execution will stop whenever the associated workflow process execution fails but you may need to make sure that the BPM continues to execute despite the failure. This is typically the case when there is a decision gateway where execution is routed based on the status of the process execution.

To allow the BPM process to continue executing after a workflow process execution fails, you need to edit the BPM task and check "Continue on Failure."

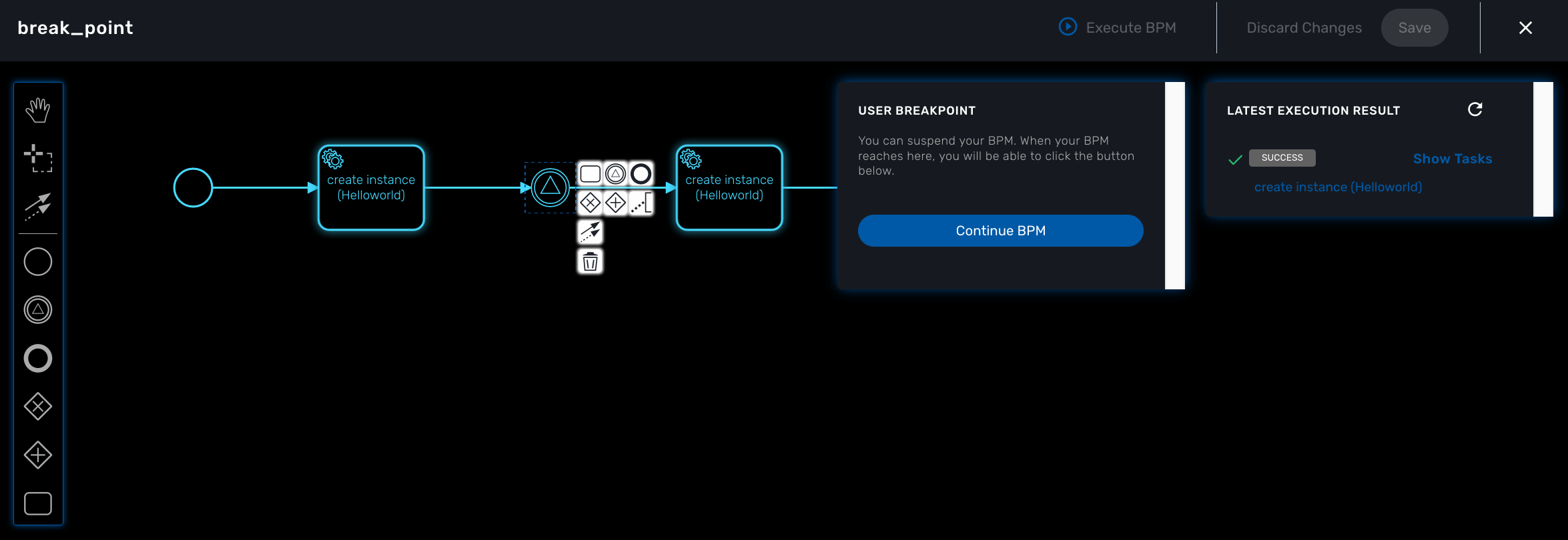

Execution breakpoint

With the execution breakpoint you can create pauses in the BPM flow execution. The BPM process will run, stop and wait for the user to select the breakpoint symbol and click "Continue BPM" to resume it’s execution.

Breakpoints can be used for debugging a complex BPM process without triggering all the workflow and doing a step by step execution. It can also be used to organise a complex BPM into several part and allow for manual validation of each intermediate steps.

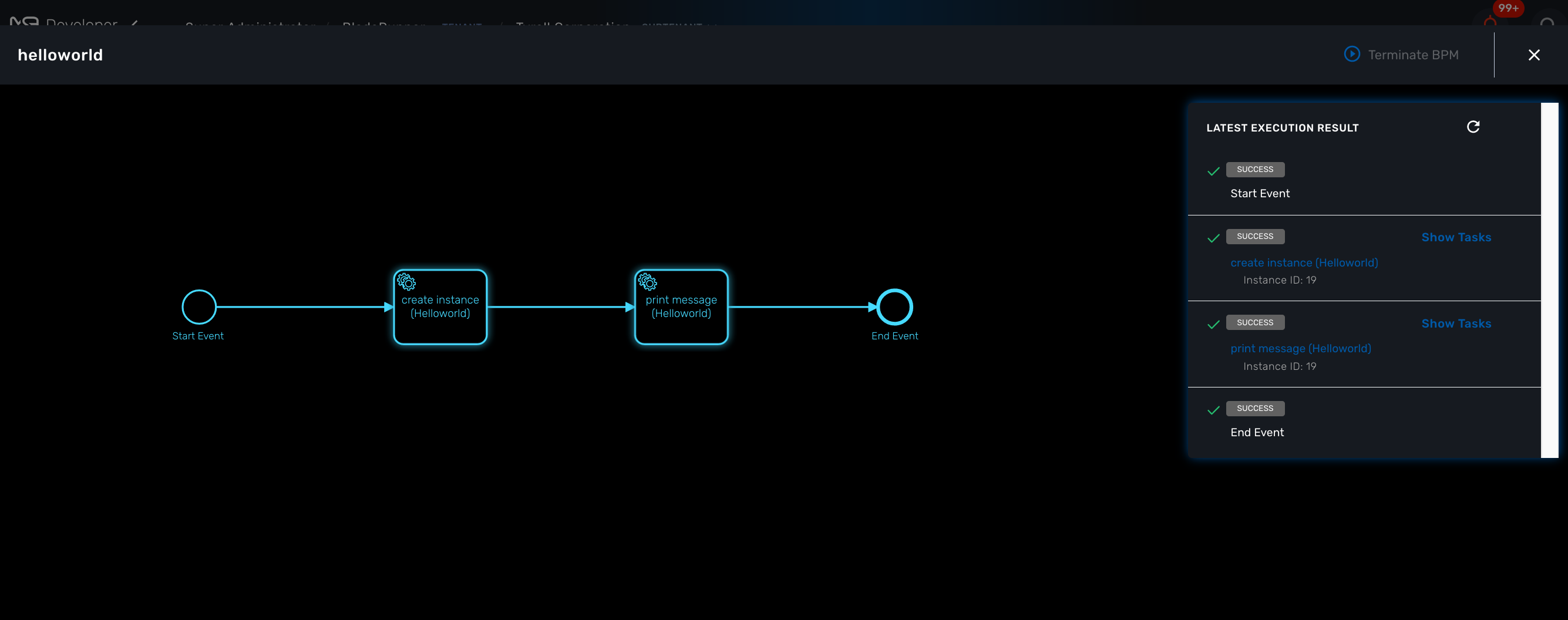

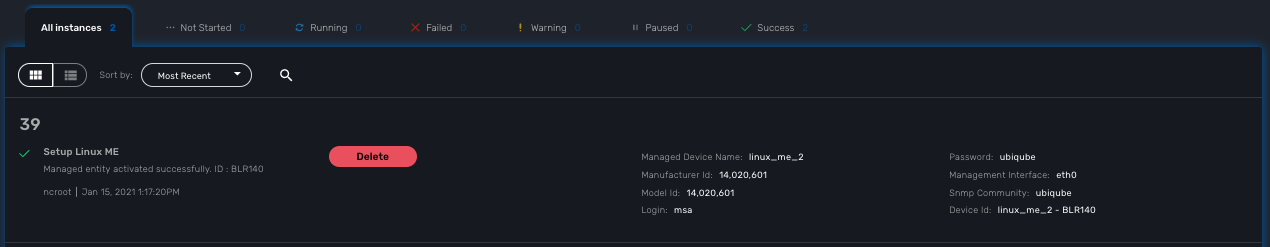

Execution tracking

The BPM engine will start executing the BPM tasks one by one and the status of the current workflow process execution will be updated live in the view "LATEST EXECUTION RESULT" while the detail of the process execution will be displayed.

![]()

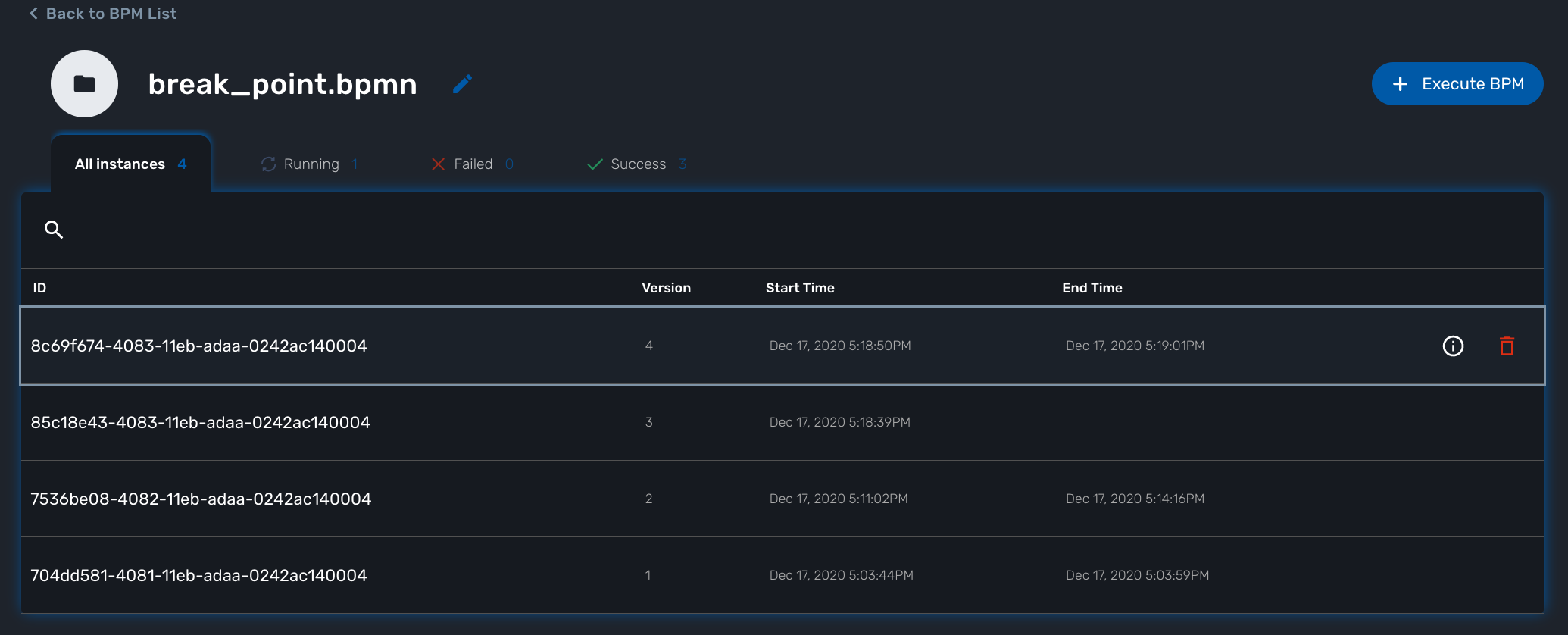

BPM instances management

BPM are associated to a subtenant in a way which is very similar to workflows, you can manage the instances of BPM executions.

For instance, if you executed a BPM with a breakpoint, you don’t need to leave the BPM execution screen open. You can trigger the execution, close the screen and later, select the instance and open it.

Getting Started Developing BPM

The BPM (Business Process Modeling) will allow you to design your processes to automate and then execute these processes.

Uses of BPMN

Business Process modeling is used to communicate a wide variety of information to a wide variety of audiences. BPMN is designed to cover many types of modeling and allows the creation of end-to-end Business Processes.

The structural elements of BPMN allow the viewer to be able to easily differentiate between sections of a BPMN Diagram.

The MSactivator™ provides the support for executable BPM Processes.

With the BPM design console, you can design your BPM and connect the BPM elements to Workflows.

The MSactivator™ provides a partial support of the BPMN 2.0 specification and you will be able to use Event and Activities.

will let you specify the beginning and the end of the process execution.

Integration Task will allow you to specify the flow of execution of your BPM.

Use text annotation to add some description to your BPM elements.

Not supported yes

Example

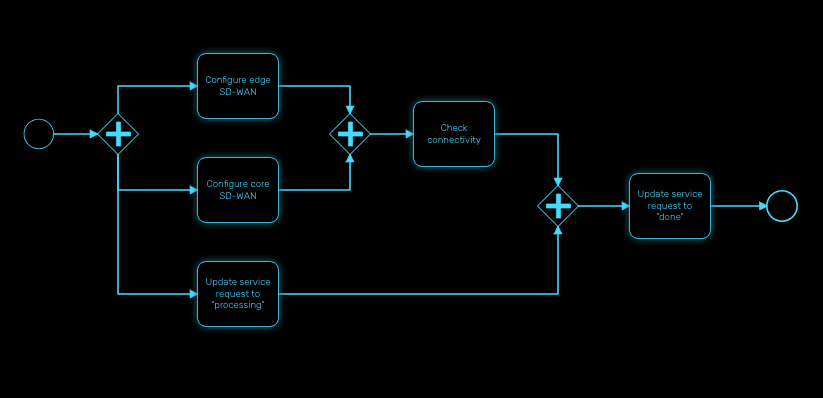

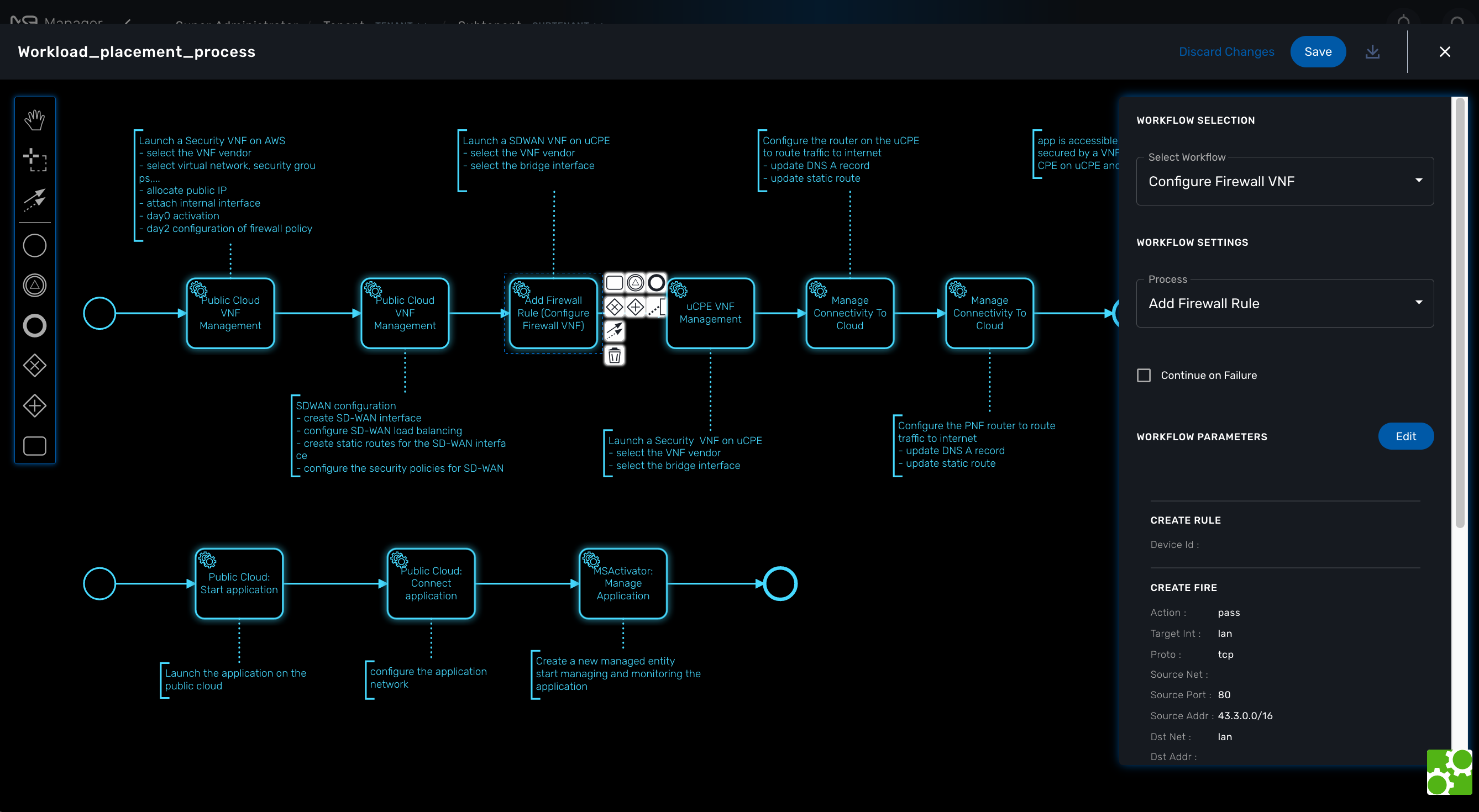

The BPM below is made of 1 start event, 5 integration task and an end event.

Each integration task calls a workflow from the workflows that are associated to the current customer.

Create or Edit a BPM

To create a new BPM you need to select "Automation" on the left menu. This will list any BPM available for the current customer.

You can either edit a BPM or create a new one from this screen. Use you mouse to add elements and link them together.

For each task, you need to select a workflow and one of the process from that workflow.

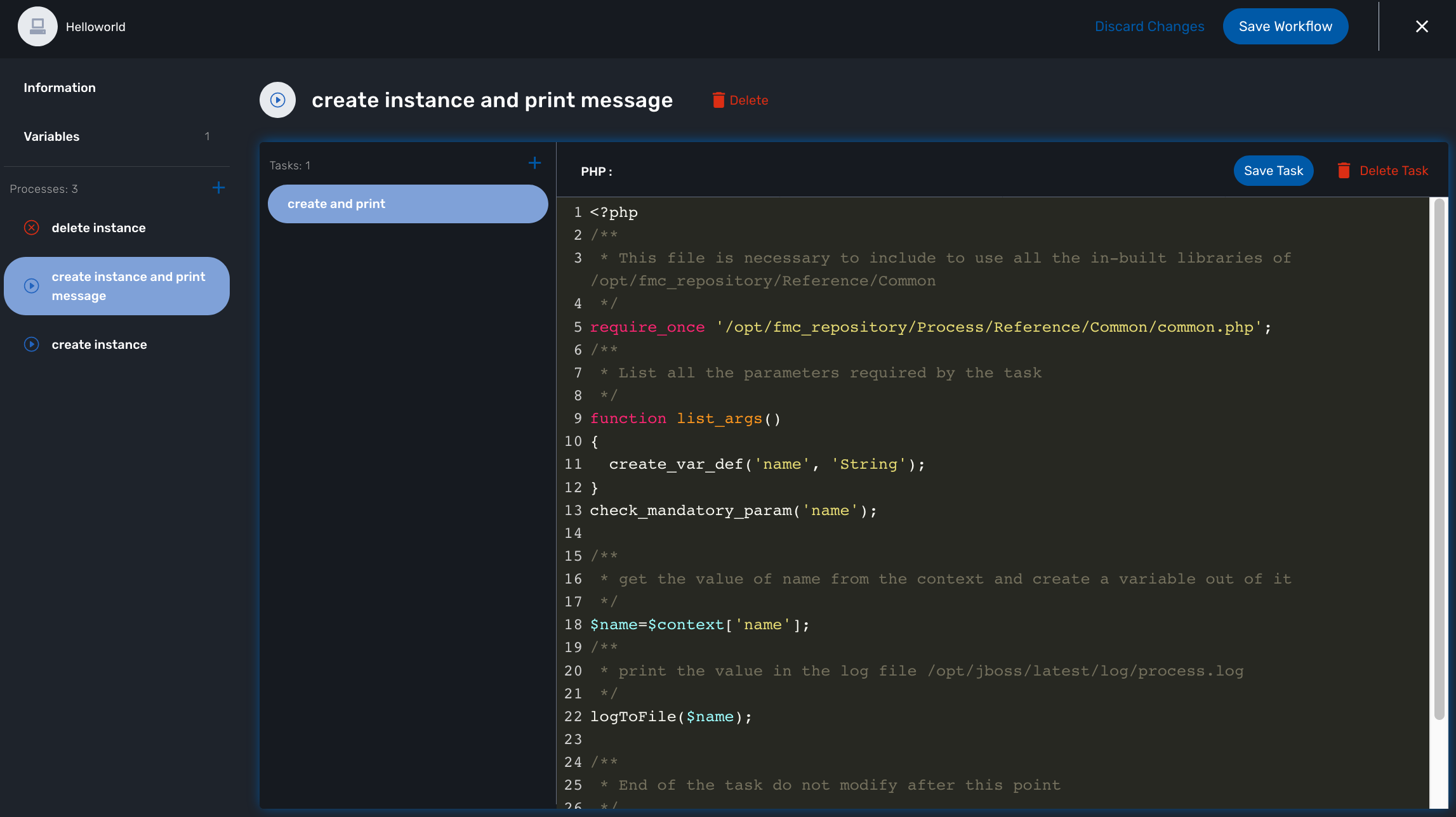

The BPM editor will list all the processes defined in a workflow and when the process is selected it will list the variables that are defined in the tasks by the function list_args.

| you should only select workflow processes with the type CREATE. |

You can save your BPM design anytime and edit it later.

The BPM files are stored in the repository under /opt/fmc_repository/Datafiles/<TENANT ID>/<CUSTOMER ID>/bpmn

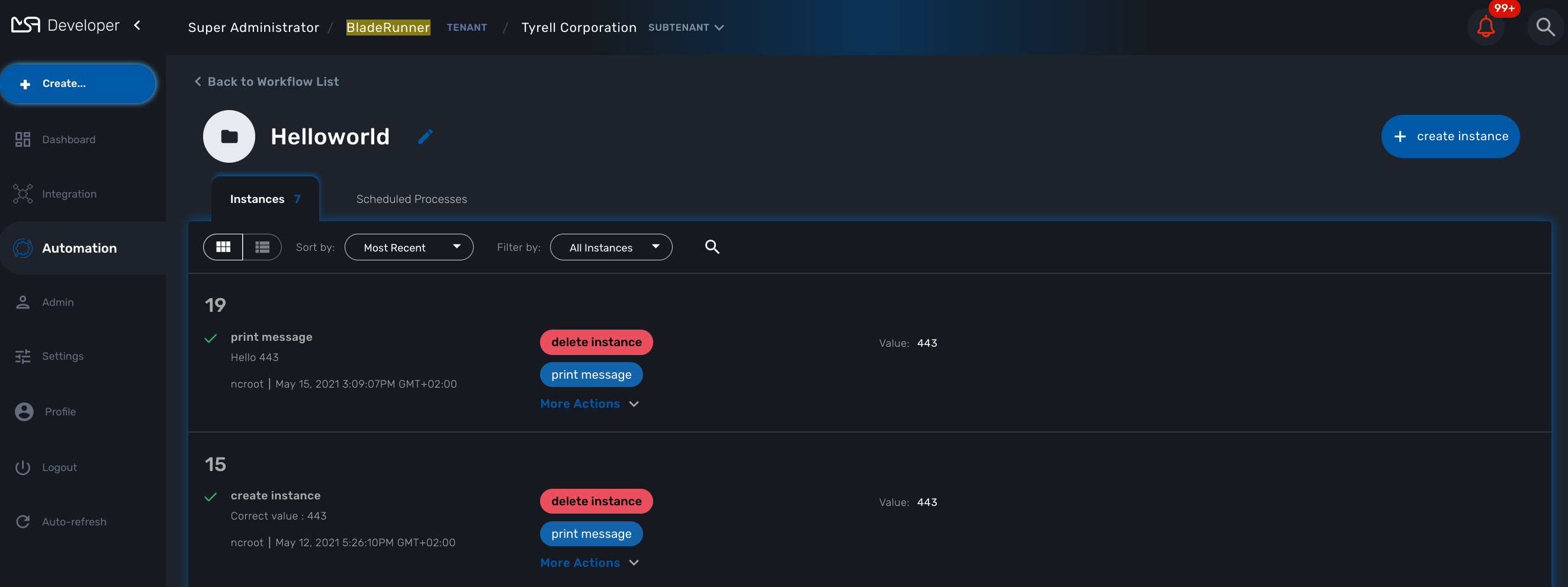

The Helloworld BPM

This tutorial will show you, in a simple way how to create a new BPM and call a Workflow.

It is using the Helloworld example wich is detailed in this documentation: Getting Started Developing Workflows.

As stated above, the BPM tasks can only call Workflow process with the type CREATE. Before creating the BPM, you need to add a new process to the Helloworld workflow and make sure its type is CREATE. This process can have a single task and you can reuse the PHP code from the Helloworld tutorial.

Once this is done, go to the "BPM" section under "Automation" and click on "+ Create BPM".

Add a Task Activity and an End Event.

Click on the task, select the Helloworld workflow, then from the list of process, select the new CREATE process. Provide a value for the parameter name and save the BPM

To test your BPM, click on it’s name from the list of BPM and click on "Execute BPM".

You can check that the workflow process was executed and the message is visible in the processes execution status.

Workflow Editor

The workflow editor is a web based UI tool for designing, developing, testing and releasing automation workflows.

Overview

With the workflow editor, you can create new workflows or edit existing workflows.

This document explains how to use the editor to design workflows and implement them in PHP or in Python.

The Workflow designer and execution engine are located in the architecture layer, between the BPM and the Microservice.

Workflow overview

A workflow is a automation entity that can be used to automate all sorts of simple to complex processes.

A workflow is defined by

-

a set of variables that can be used to hold the state of a workflow instance;

-

a set of processes made out of tasks. This is where the execution is coded.

The tasks are scripts that can be implemented either in PHP or in Python.

The lifecycle of a workflow

There are 3 main types of workflow processes:

CREATE

execute an automated process and create a new instance of the workflows.

UPDATE

execute an automated process that will (but it’s not mandatory) update the state of the process.

DELETE

execute an automated process that will also remove the instance of the workflow.

| a delete process can be assigned to the trash icon of the workflow instances. By default, the thrash icon action will only delete the workflow instance without executing any specific process. |

Execution status

The status a normal process execution is defined by the implementation of the tasks and it’s the responsibility of the developer to handle the termination status. As a developer, you have 3 statuses, defined by constants in PHP or Python, that you can use in your task to define the condition for transiting from a task to the next one.

-

ENDED: the execution was successful, the next task will be executed and if it was the last task, the process will be marked as "Success".

-

WARNING: the execution was successful but some warning were raised, the next task will be executed and if it was the last task, the process status will be displayed as "Warning".

-

FAILED: the execution failed, the process execution will stop at the current task and the process status will be noted as "Failed".

| these documentations to get more details and code samples on this topic: PHP SDK and Python SDK |

How to persist the state of a workflow instance

The variables are used to define the current state of a workflow instance, this state is maintained in a context which is persisted in the database.

For each workflow instance, the variable and their values are stored in the database in a context. This context is accessible in read-write mode anytime in the process tasks, in order to store a value of a variable or read a value from a variable.

in Python: read a value from the context

context = Variables.task_call()

my_name = context['name']set a value in the context

context['name'] = my_namein PHP: read a value from the context

$my_name = $context['name'];set a value in the context

$context['name'] = $my_name;The context is persisted in the database and its value is updated after each task execution.

This is how variable values can be passed, during the execution of a process, from one task to another.

| by default, the variables that are declared a persisted in the context but you can also create local variables in the tasks and store them in the context. |

Editor Overview

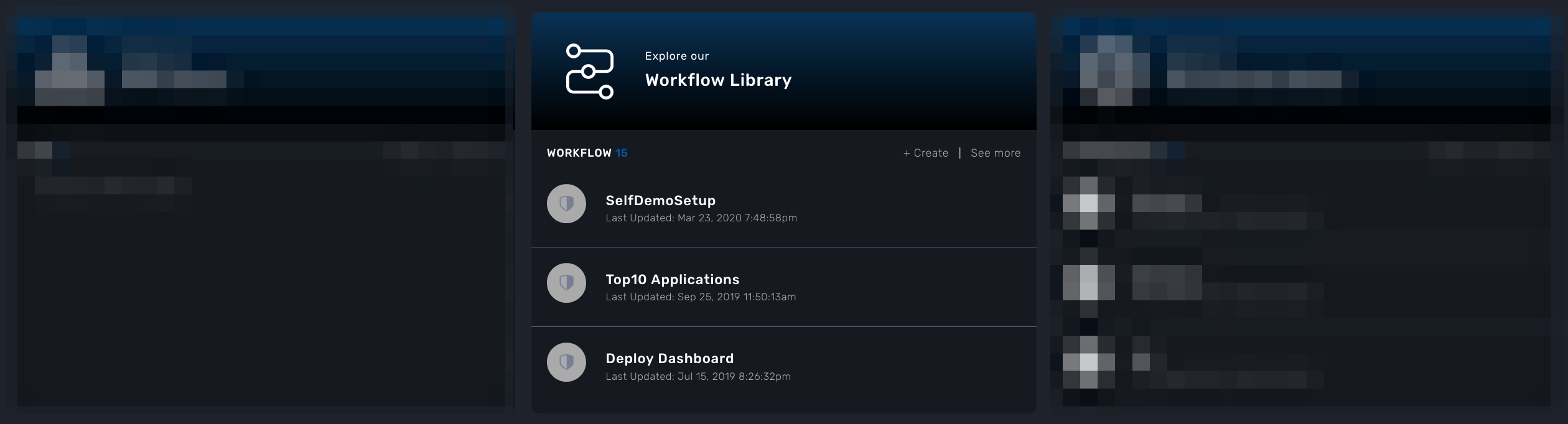

To create a new workflow, connect to the developer portal and click "+ Create" on the workflow library swimlane.

Workflow information

Provide the information related to the workflow:

-

Workflow Name: the name of the workflow

-

Description: a description of the workflow

-

Delete process: the delete process to associate to the workflow instance trash icon.

-

Workflow variable name: default to service_id (see below for more detail about this field)

-

Workflow language: PHP or PYTHON (this cannot be edited)

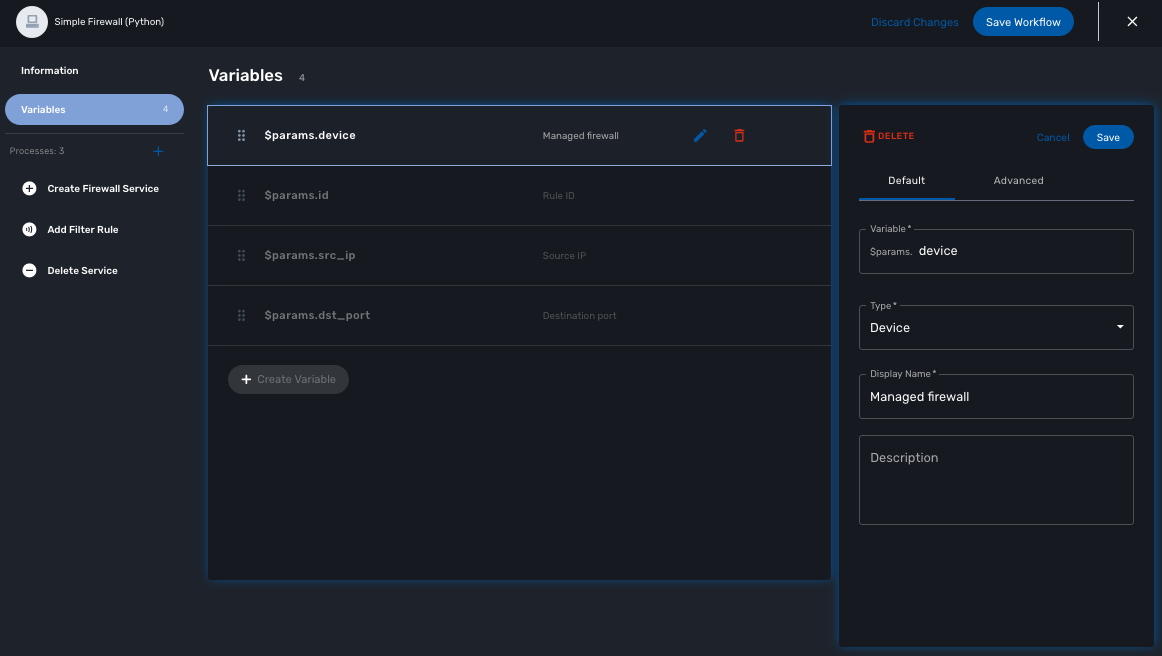

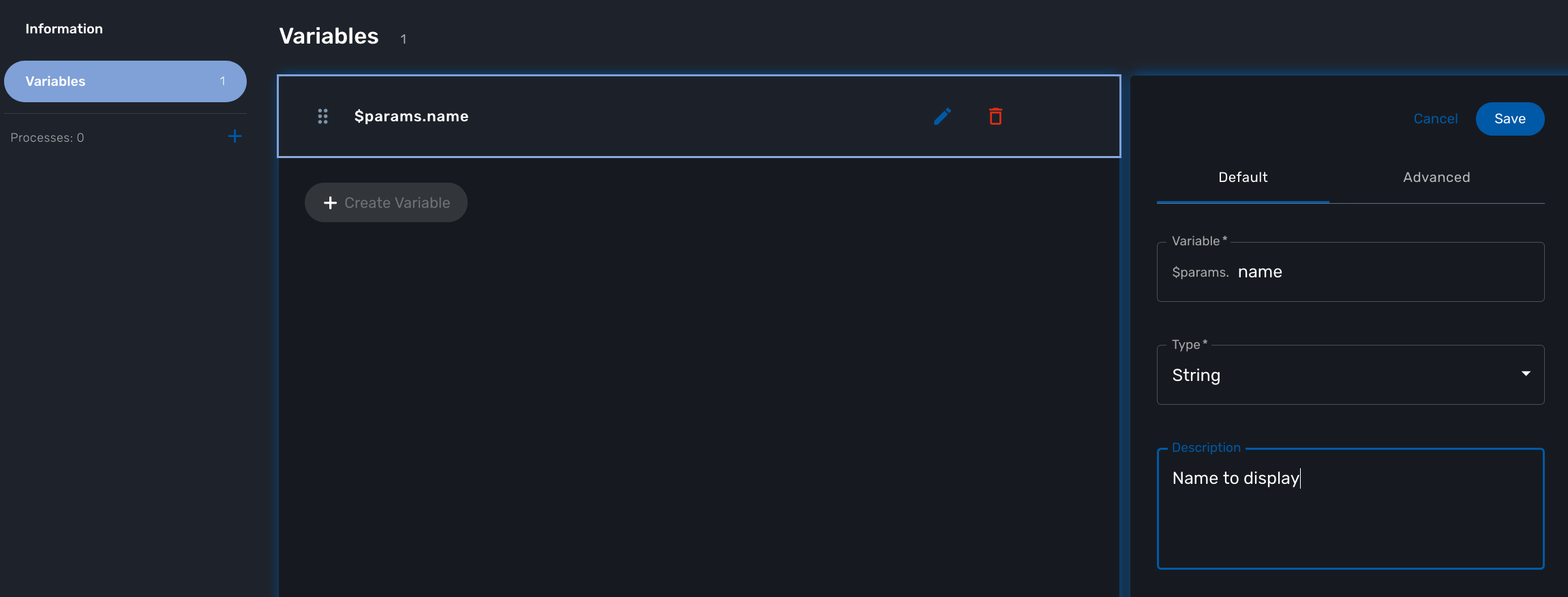

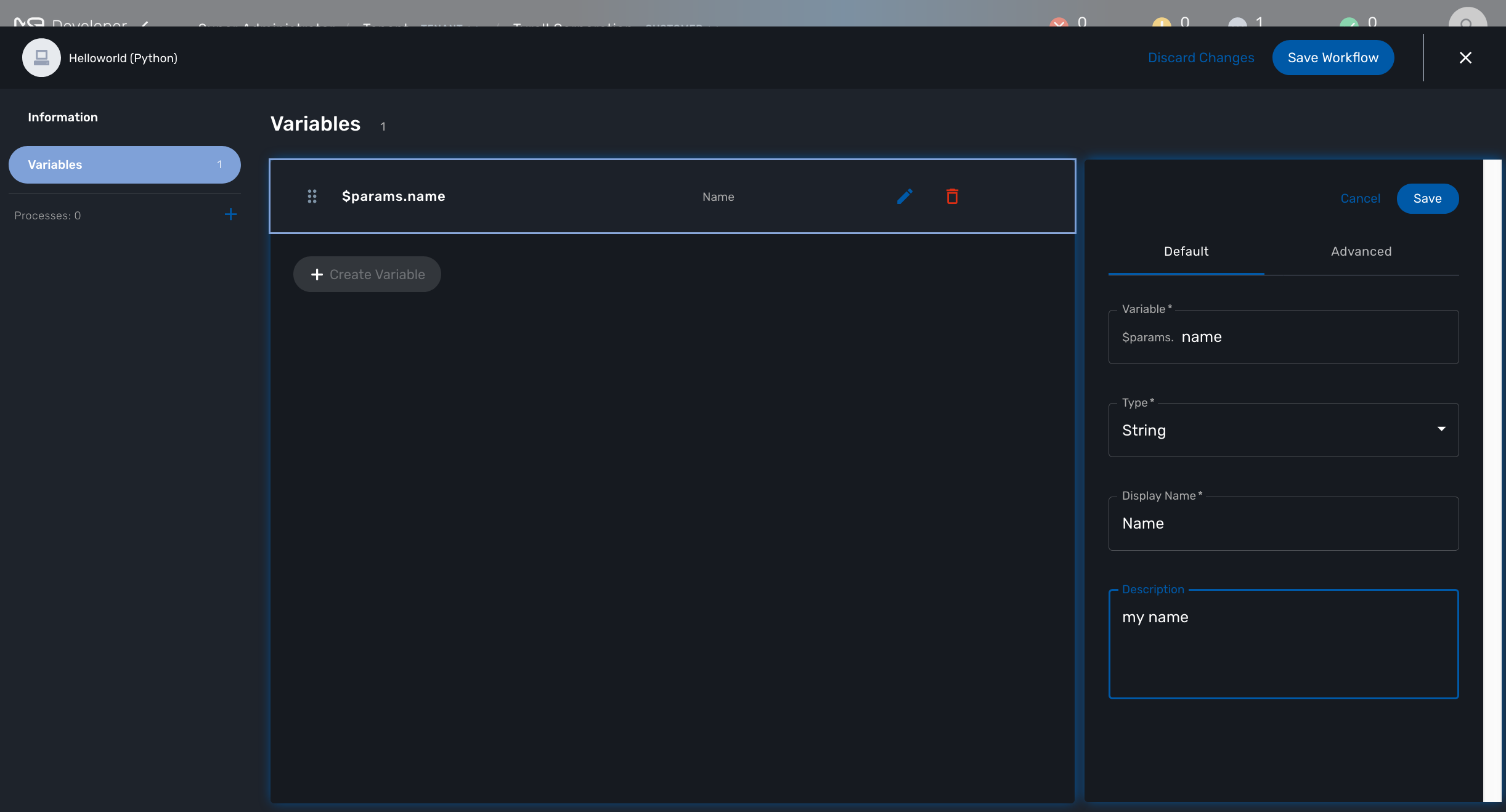

Workflow variables

Use "+ Create Variable" to add a variable to this workflow.

A variable can be used to store data in the context of the workflow instance and it can also be used to generate the user input fields when executing a process from the UI.

It is possible to define a variable for "internal" use and decide to keep is hidden from the end-user.

A variable has a name, a type and a display name

This documentation will give you more details on the variables and the various types available.

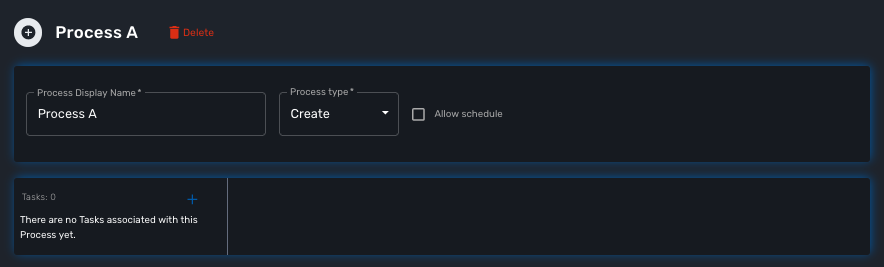

Workflow processes

A workflow can have as many processes as needed. The processes provide the "public" functions exposed by a workflow either with the UI or the REST API.

To create a process, click on the "+" and provide a name and a type (CREATE, UPDATE or DELETE).

| the other types listed in the UI are not supported yet. |

Process scheduling

Scheduling of process execution can be authorize when defining a process by checking "Allow scheduling" on the process definition screen.

When scheduling is allowed, the user execute the process either the usual way by clicking "Run" or use "Schedule" to configure the process execution scheduling.

Tasks

The tasks are the smallest execution unit of a workflow.

A process can have as many tasks as needed and although it’s possible to implement a process with a single task, splitting the overall process execution into smaller tasks will ease the code maintenance and the execution monitoring.

Depending on the workflow language selected when creating the workflow, the task should be implemented either in Python or in PHP.

When creating a new task, the UI will populate the code editor with a pre-defined code template that you can use to start coding your tasks.

PHP template

<?php

require_once '/opt/fmc_repository/Process/Reference/Common/common.php'; (1)

function list_args() (2)

{

create_var_def('var_name', 'String');

create_var_def('var_name2', 'Integer');

}

check_mandatory_param('var_name'); (3)

/**

* $context => workflow context variable one per Instance

* ENTER YOUR CODE HERE

*/

$context['var_name2'] = $context['var_name2'] + 1; (4)

if ($context['var_name2'] % 2 === 0) { (5)

$ret = prepare_json_response(FAILED, 'Task Failed', $context, true);

echo "$ret\n";

exit;

}

task_success('Task OK'); // or task_error('Task FAILED'); (6)

?>| 1 | include the php SDK libraries. |

| 2 | function to list all the parameters required by the task and that should also be rendered as user input field. |

| 3 | function to check whether all the mandatory parameters are present in user input. |

| 4 | assign a variable with a modified value from another variable. |

| 5 | task execution status will depend on the value of a variable |

| 6 | end of the task. |

Python template

from msa_sdk.variables import Variables (1)

from msa_sdk.msa_api import MSA_API

dev_var = Variables()

dev_var.add('var_name', var_type='String') (2)

dev_var.add('var_name2', var_type='Integer')

context = Variables.task_call(dev_var)

context['var_name2'] = int(context['var_name2']) + 1 (3)

ret = MSA_API.process_content('ENDED', 'Task OK', context, True)

print(ret) (4)| 1 | include the php SDK libraries. |

| 2 | list all the parameters required by the task and that should also be rendered as user input field. |

| 3 | update the current context with another value read from the context. |

| 4 | end of the task. |

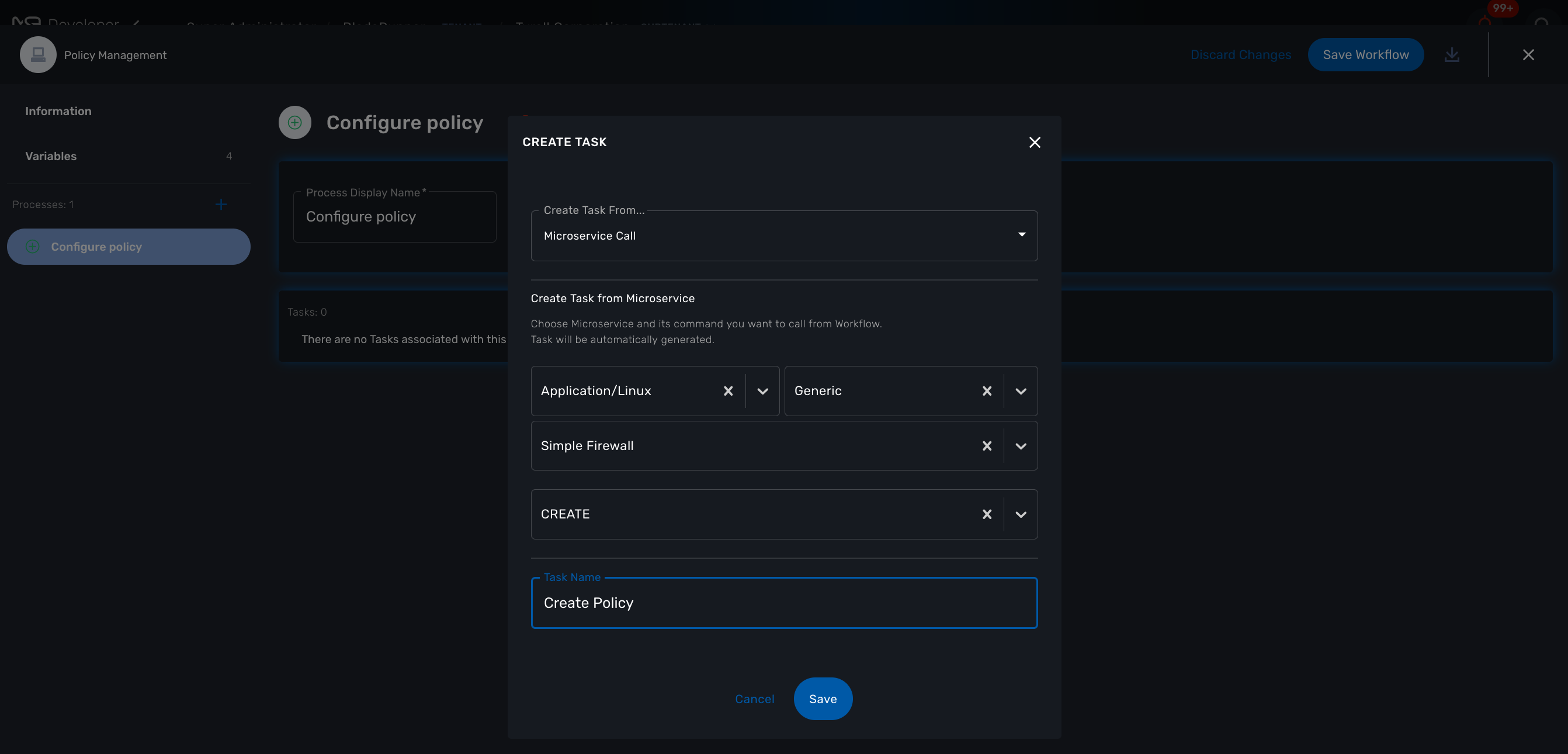

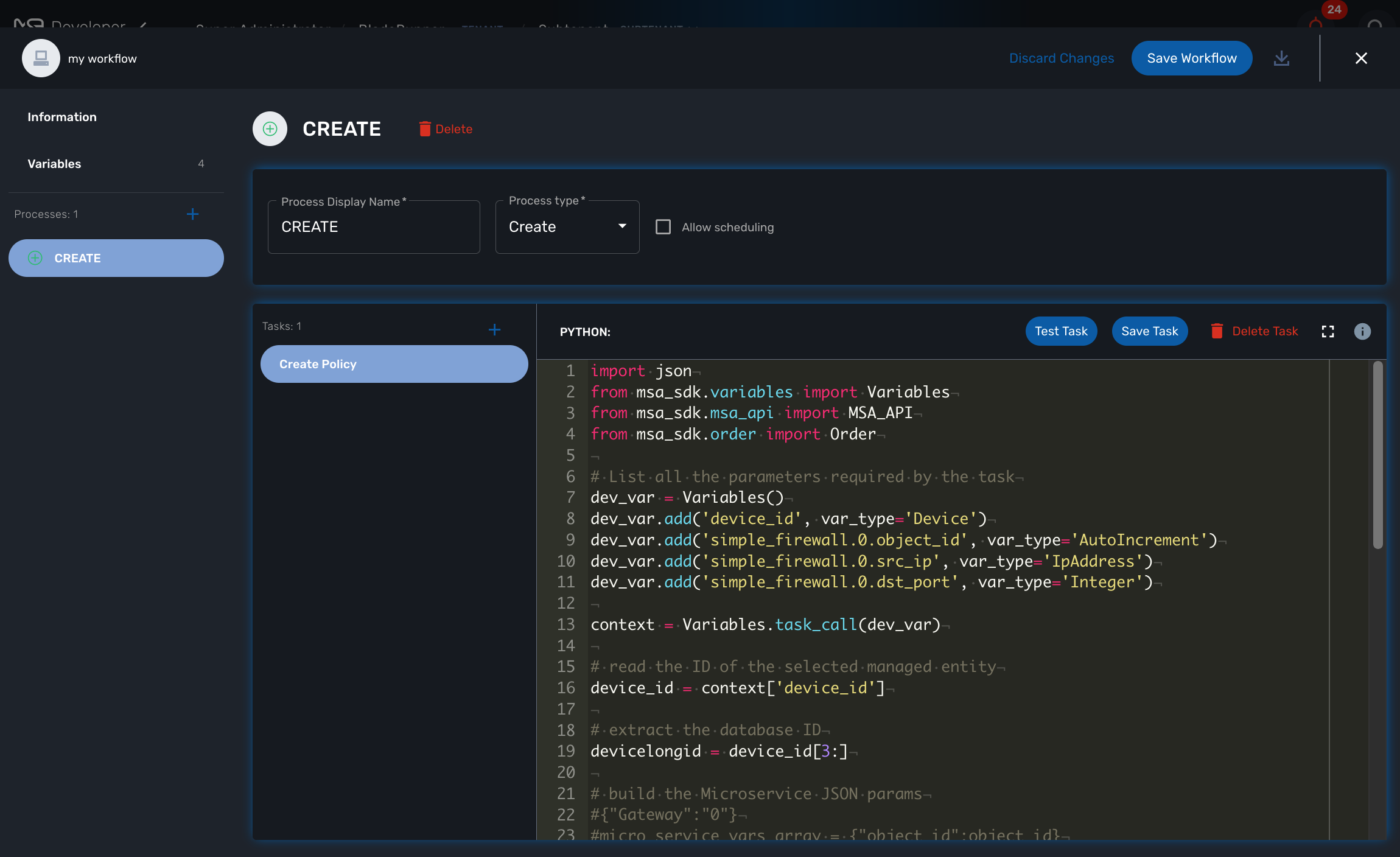

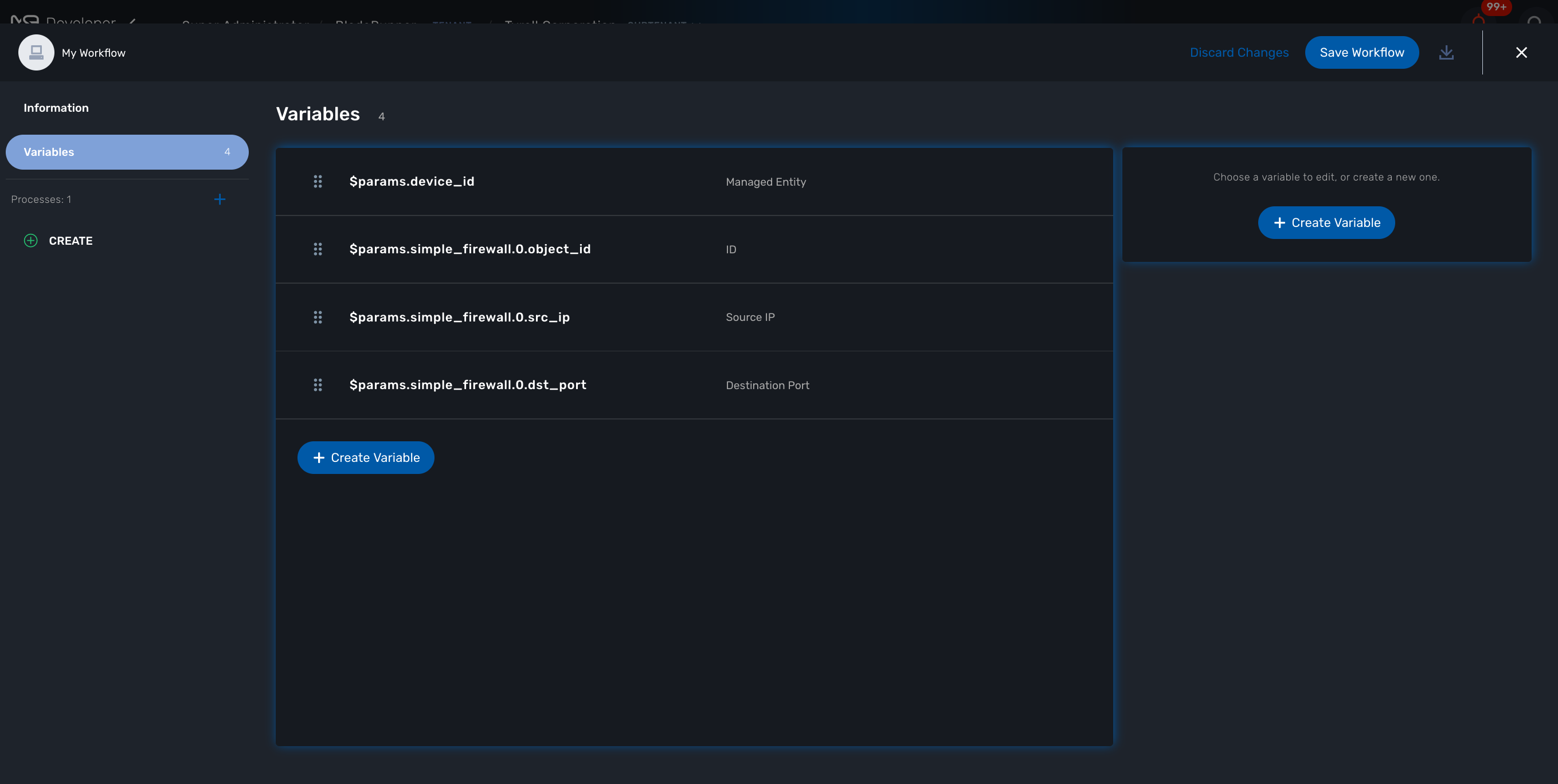

Microservice to Task code generation

When you create a task you have the possibility to create a simple task pre-coded with on of the template above but you can also choose to create a task from a Microservice call.

If you select the second option, you’ll have the possibility to select a Microservice and one for it’s function to generate a task with all the code to execute this microservice auto-generated.

The code of the task is automatically generated.

The variables related to the microservice are added.

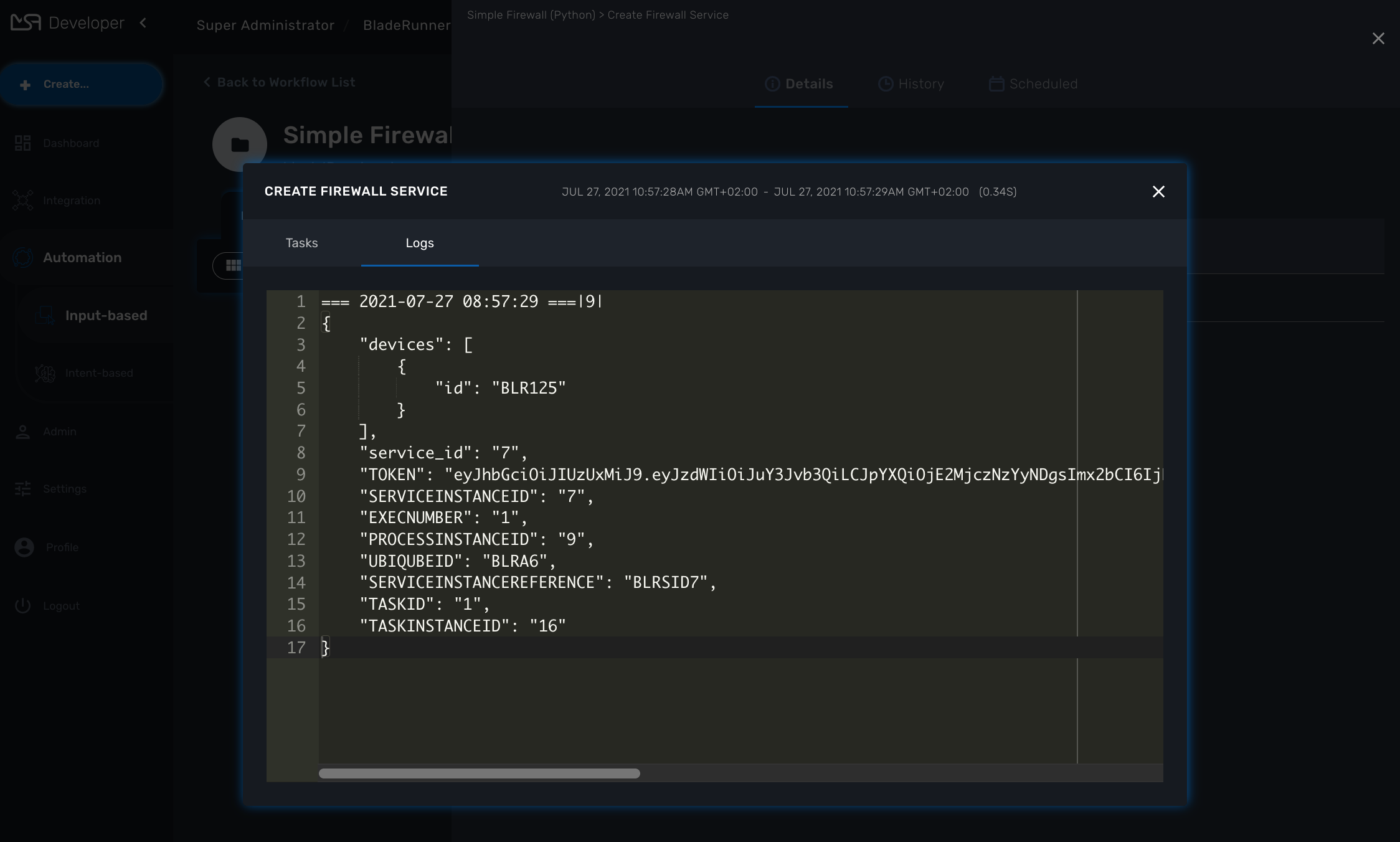

Logging and troubleshooting

You can add debugging information to help you with your development and also provide useful information for troubleshooting task in production.

The log files are generated per workflow instance in the container msa_api under /opt/wildfly/logs/processLog/. The log files are formatted as process-XX.log where XX is the workflow instance ID.

You can monitor the logs of a process by opening the logs tab in the process execution view.

You can also monitor the logs of a process with the CLI command below

docker-compose exec msa-api tail -F /opt/wildfly/logs/processLog/process-XX.log

require_once '/opt/fmc_repository/Process/Reference/Common/common.php';

logToFile("a message");from msa_sdk.variables import Variables

from msa_sdk import util

dev_var = Variables()

context = Variables.task_call(dev_var)

process_id = context['SERVICEINSTANCEID']

util.log_to_process_file(process_id, 'a message')Workflow Variables

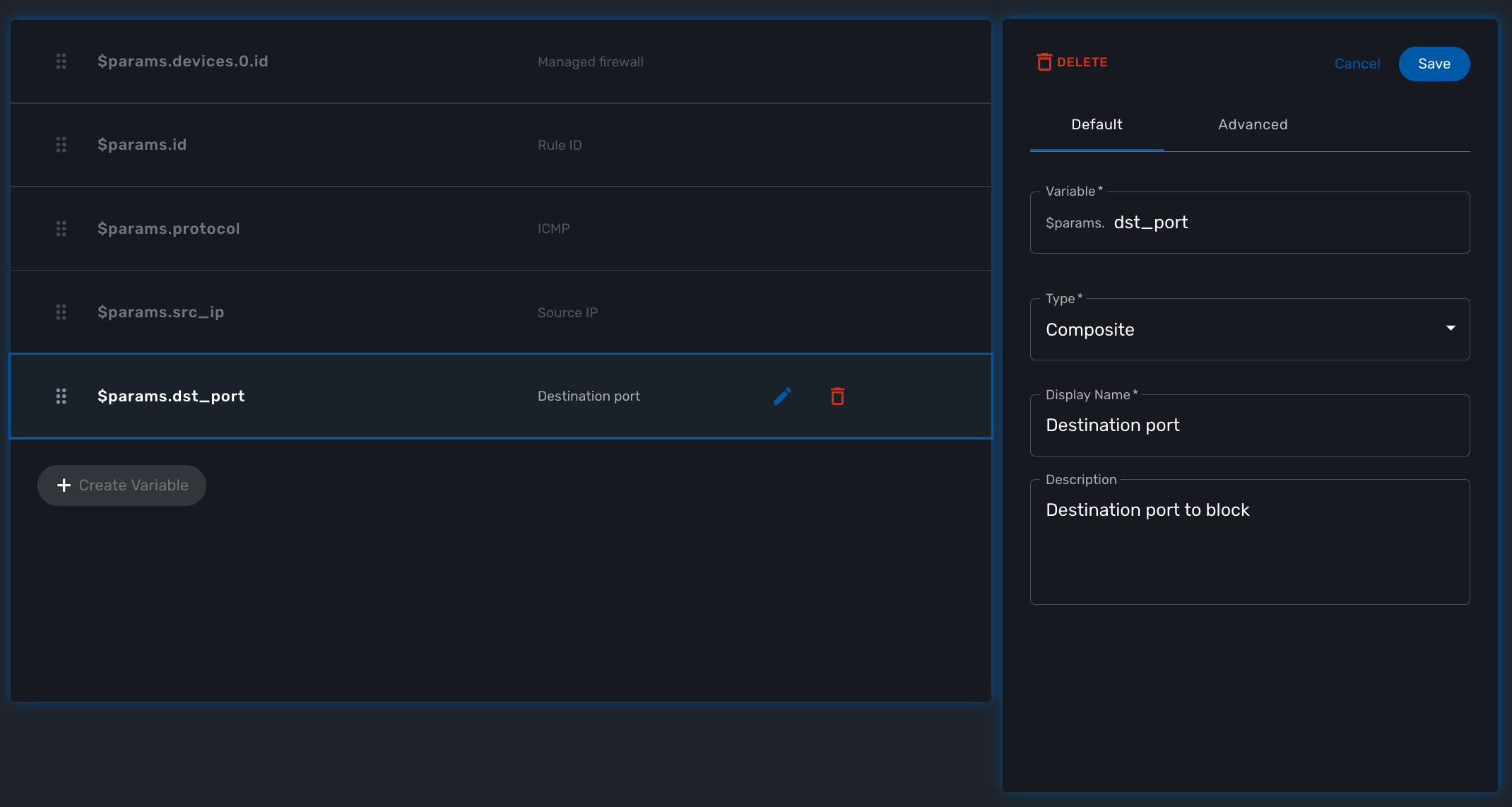

Variables are used to hold the parameters to pass to a workflow process. For instance, the port and IP address to block in a firewall policy.

All variables are referenced with the prefix $params which is automatically set in the variable editor screen and when a variable has to be referenced in one of the workflow functions, you need to use the syntax {$params.your_variable} (see below for more examples).

By default the type of a variable is String but other types are supported such as Integer, Boolean, Password, IpAddress, Microservice Reference,…

Overview

Variables are used to hold the parameters to pass to a workflow process. For instance, the port and IP address to block in a firewall policy.

Variables can also be used to display user information that is not necessarily meant to be used for configuring the managed entity.

Setting variables is done from the section "Variables" on the workflow editor screen.

The type of a variable will affect the way the workflow end user form will be rendered.

For instance, the type boolean will render the variable with true/false radio buttons.

Default settings

When creating or editing a variable, there are some information that need to be provided in the "Default" section.

Variable

Name of the variable to use in the implementation of the workflow or when calling the REST API.

Type

The type of the variable should be one from the list below

String

The default type for a variable, it will accepts any value and the UI renders it as a input field without any specifc validation with regards to the value set.

Boolean

This data type accepts a value of true or false, the UI will render it as a checkbox.

Integer

This data type represents a numerical value, the UI will render it as an input field restricted to integer.

Code

This type allows to render a variable as a textfield with the possibility to select a code language (default is simple text) for syntax highlighting

Password

| not supported yet |

This data type represents a multi-character value that is hidden from plain sight (i.e. the value is represented as asterisks instead of clear text).

IPv4 address and mask, IPv6 mask

| not supported yet |

This data type will enforce data validation against IP address formats.

Composite

The variable type composite provide ways to add control over the behavior of the workflow user form.

It can be used, for instance, to show/hide parts of the form based on the value of another component of the form.

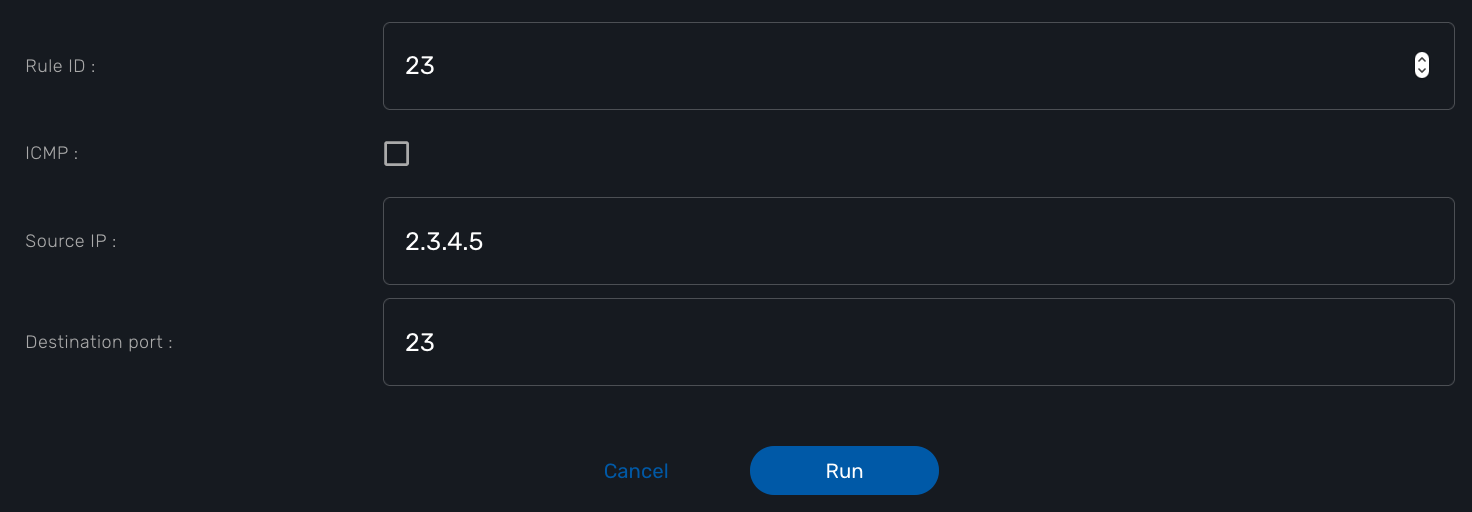

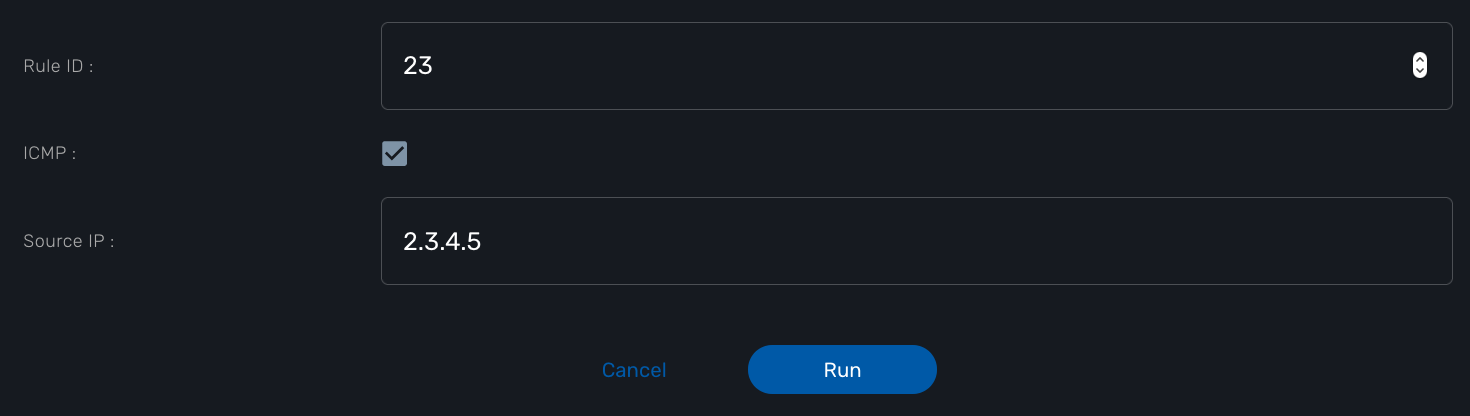

Let’s take a simple example to illustrate the use of the composite type with a simple workflow for managing firewall policy.

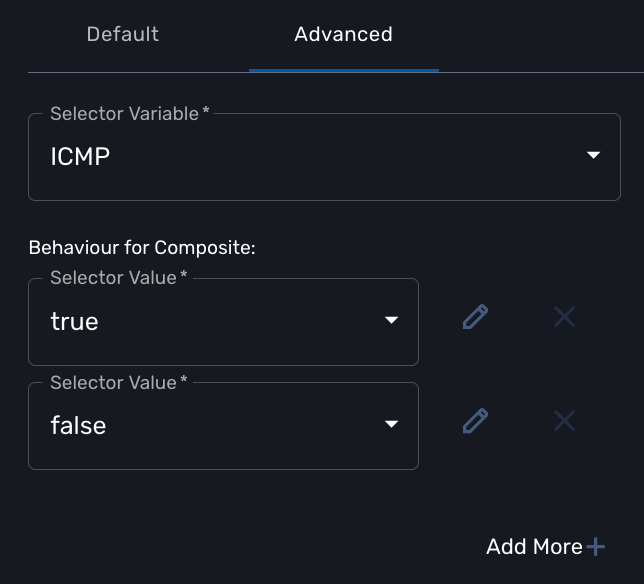

The workflow allows the user to create a firewall policy to block a source IP address and a destination port but the user may also need to select the protocol TCP, UDP or ICMP and in the case of ICMP, the destination port is not relevant. We need to build a workflow UI where the user will have to provide the source IP and destination port when the protocol is TC or UDP and only the source IP when the protocol is ICMP.

In this example, the variable "dst_port" for the destination port should be typed as a composite because it’s behavior when rendered as a user web form will depend on the other variable "protocol".

To implement this behavior, set the type of "dst_port" variable to "Composite".

In the advanced parameter tab, first choose the "Selector Variable" and select the protocol (note that the list shows the display name, not the actual name of the variable)

Then configure the "Behavior for the Composite". The selector is a boolean so you can only have 2 types of behavior, one for true and one for false.

Each behavior can be configured by editing it with the pencil icon.

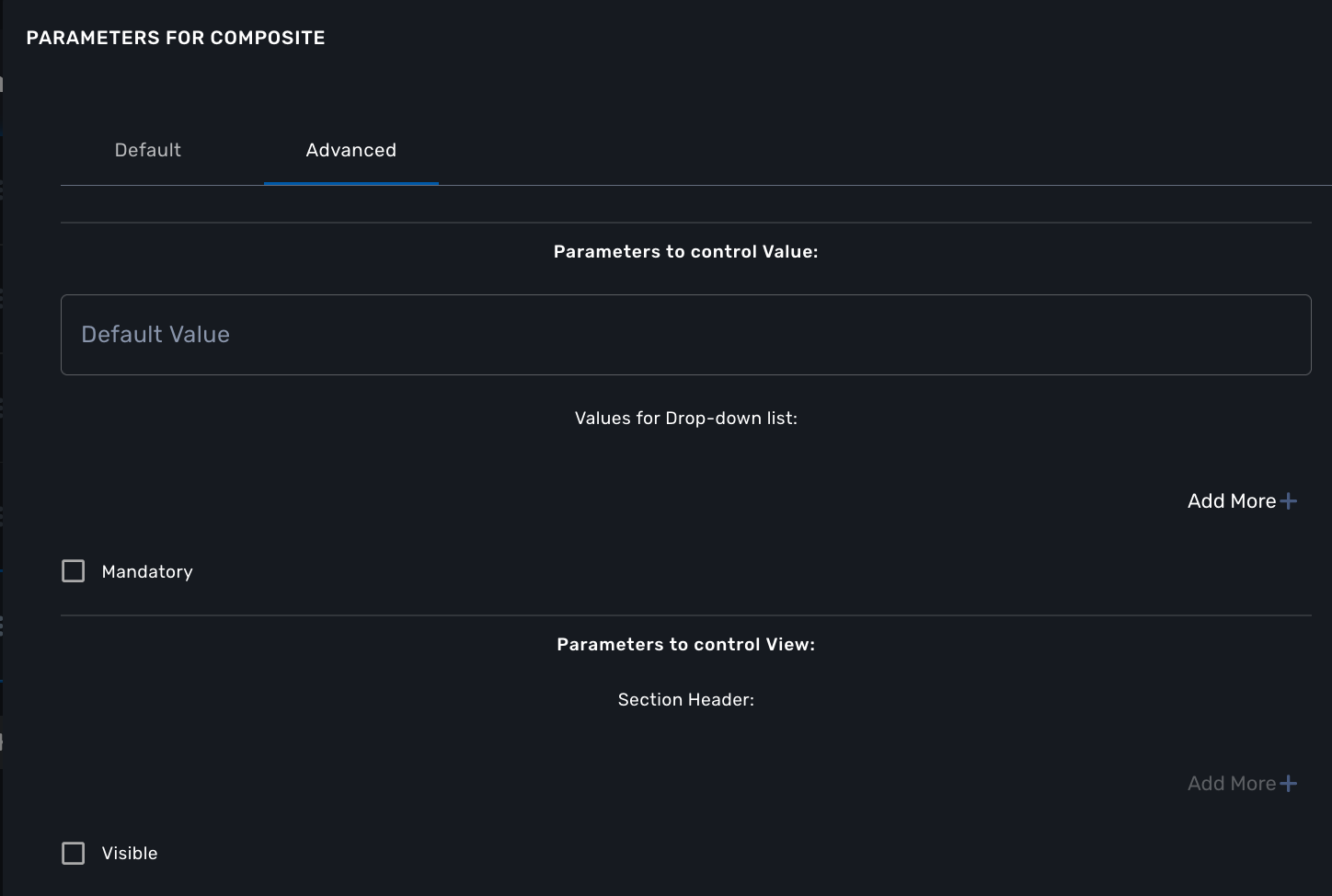

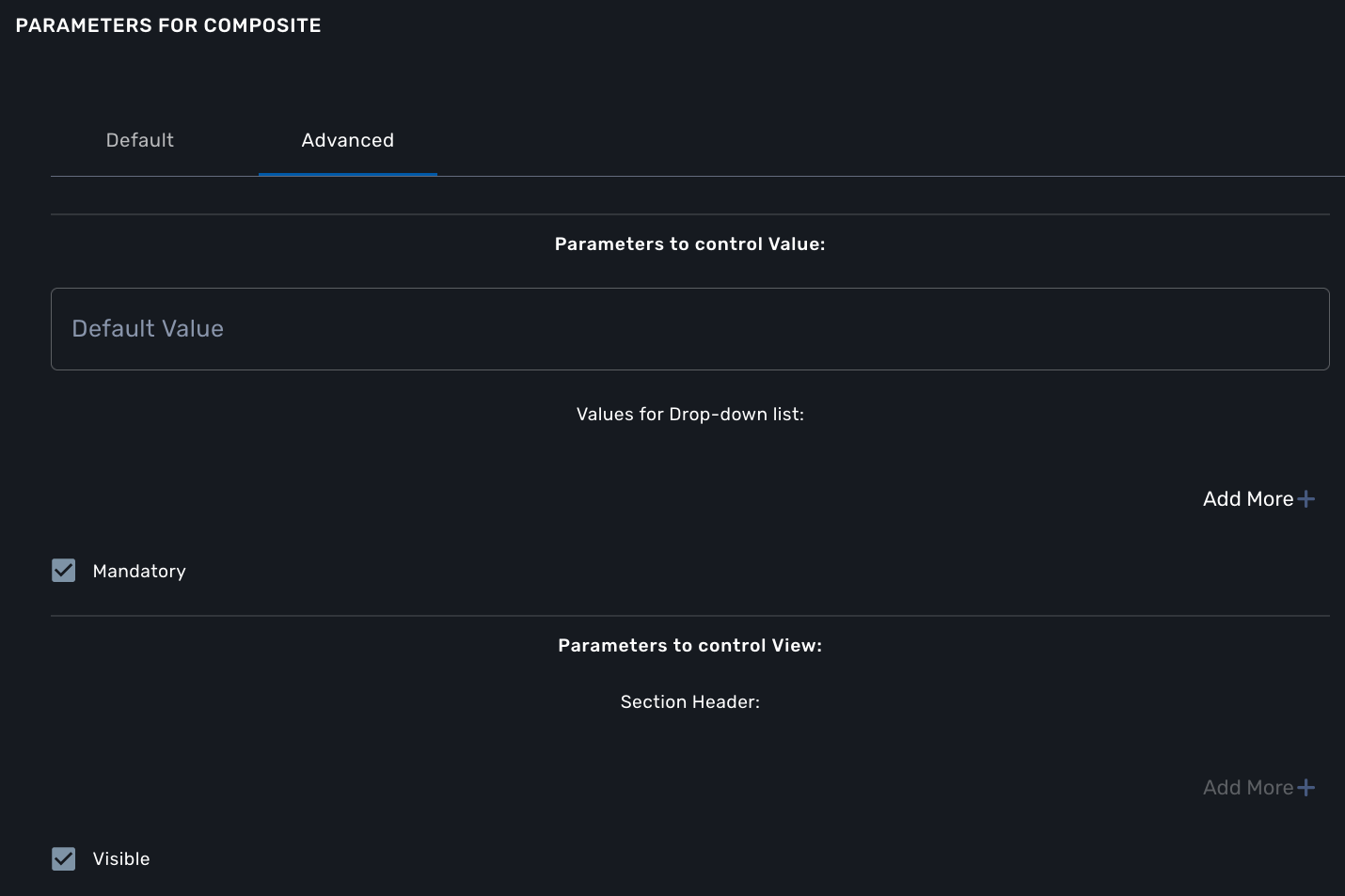

In our case, if the selector is set to true (when the user selects ICMP), the variable "dst_port" should be hidden: uncheck the attribute "Visible" in the advanced parameters for composite.

And when the selector is set to false, the variable "dst_port" should be visible and mandatory.

Link

| not supported yet |

This type is useful if you wat to display a URL in the user form, for instance to link to some documentation on a web server. It is usually used in read-only mode with the URL set as the default value of the variable

Auto Increment

This type is used to maintain an incremental counter in within the instances of a workflow for a managed entity. This is useful for managing the object_id.

Increment |

an integer to define the increment step |

Start Increment |

the initial value for the variable |

Workflows sharing the same increment |

a list of workflows that are also using the same variable and need to share a common value. |

Device

This type is used to allow the user to select a managed entity and pass its identifier to the implementation of the workflow.

In the task implementation you need to list the variables with "Device" for the type

function list_args()

{

create_var_def('my_device');

}from msa_sdk.variables import Variables

TaskVariables = Variables()

TaskVariables.add('my_device')A very common use of the type Device is for automating configuration (or any other automated action) over a list of managed entities.

You can do that by creating a array variable with the type Device and loop through the array in the task.

from msa_sdk.variables import Variables

from msa_sdk.msa_api import MSA_API

from msa_sdk import util

dev_var = Variables()

dev_var.add('me_list.0.id') (1)

context = Variables.task_call(dev_var)

process_id = context['SERVICEINSTANCEID'] (2)

me_list = context['me_list'] (3)

for me_id in me_list: (4)

util.log_to_process_file(process_id, me_id['id']) (5)

ret = MSA_API.process_content('ENDED', 'Task OK', context, True)

print(ret)| 1 | declare the the array variable to be displayed in UI |

| 2 | read the current process ID |

| 3 | read the list of managed entities selected by the user on the UI |

| 4 | loop through the list and print each managed entity ID in the process log file |

| 5 | print the managed entity identifier in the process log file |

function list_args()

{

create_var_def('devices.0.id');

}

// read the ID of the selected managed entity

$devices = $context['devices'];

foreach ($devices as $device) {

$device_id = $device['id'];

logToFile("update device $device_id");

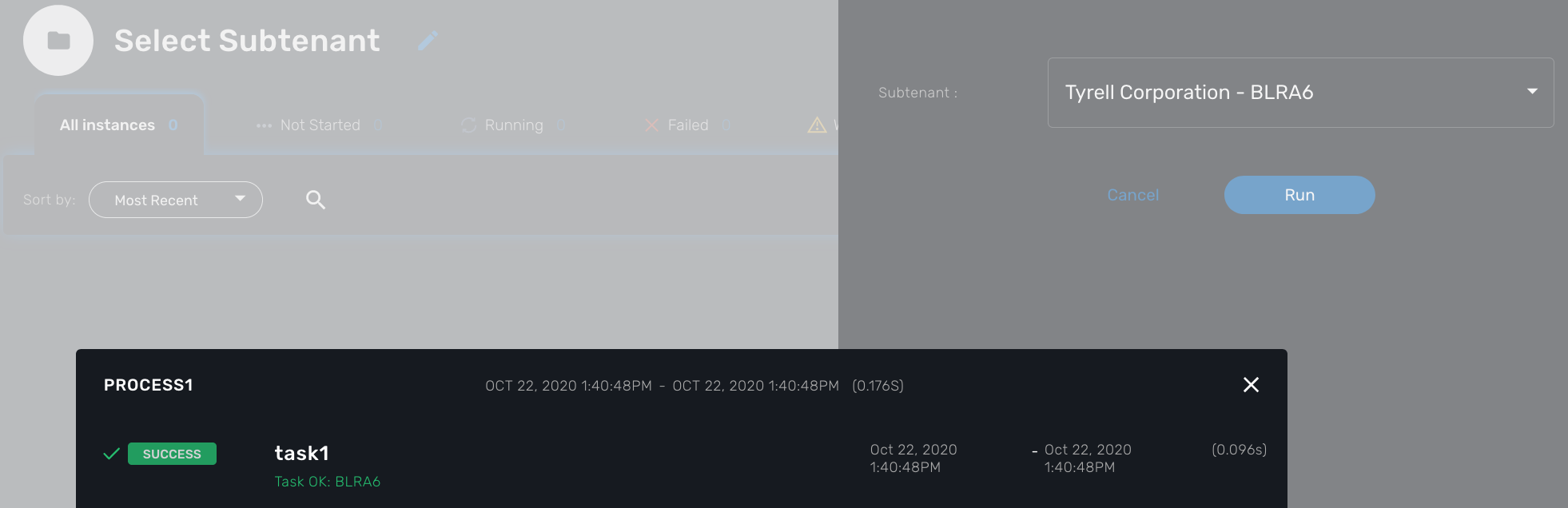

}Subtenant

This type will allow the user to select a subtenant and use the subtenant ID from the workflow instance context in the task.

The source code below will let the user select a subtenant and display the subtenant ID on the execution console

<?php

require_once '/opt/fmc_repository/Process/Reference/Common/common.php';

function list_args()

{

create_var_def('subtenant'); (1)

}

$subtenant = $context['subtenant']; (2)

task_success('Task OK: '.$subtenant); (3)

?>| 1 | declare the variable subtenant to be displayed in the user form |

| 2 | read the variable value from the context |

| 3 | print the value on the execution console |

If you need to select multiple subtenants, you have to create an array variable with the type Customer.

With the variable $params.subtenants.0.id typed as Customer, the code below will ask for the user to select 1 or more subtenant, print the identifier of each one in the process log file and display the number of subtenant selected on the UI.

<?php

require_once '/opt/fmc_repository/Process/Reference/Common/common.php';

function list_args()

{

create_var_def('subtenants.0.id');

}

$subtenants = $context['subtenants'];

foreach ($subtenants as $subtenant) { (1)

logToFile("subtenant: ".$subtenant['id']); (2)

}

task_success('Task OK: '.sizeof($subtenants )." subtenant selected");

?>| 1 | loop through the list of subtenants |

| 2 | log the value in the process log file |

| the code for iterating over an array of managed entities is very similar |

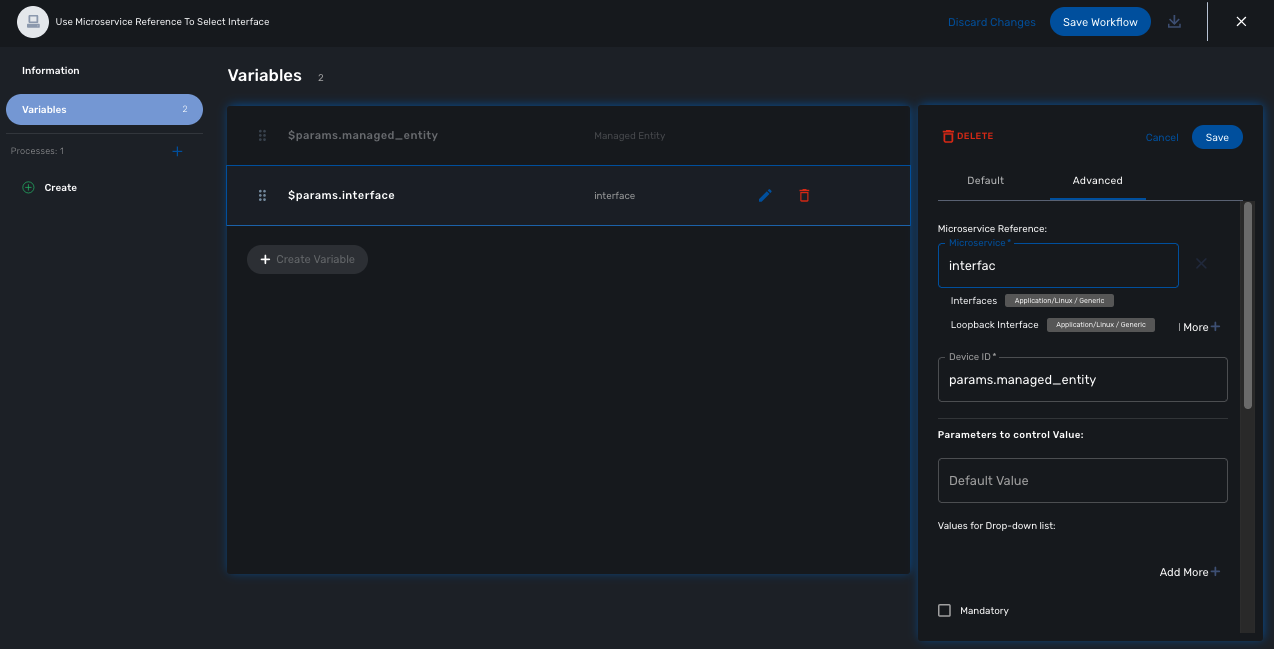

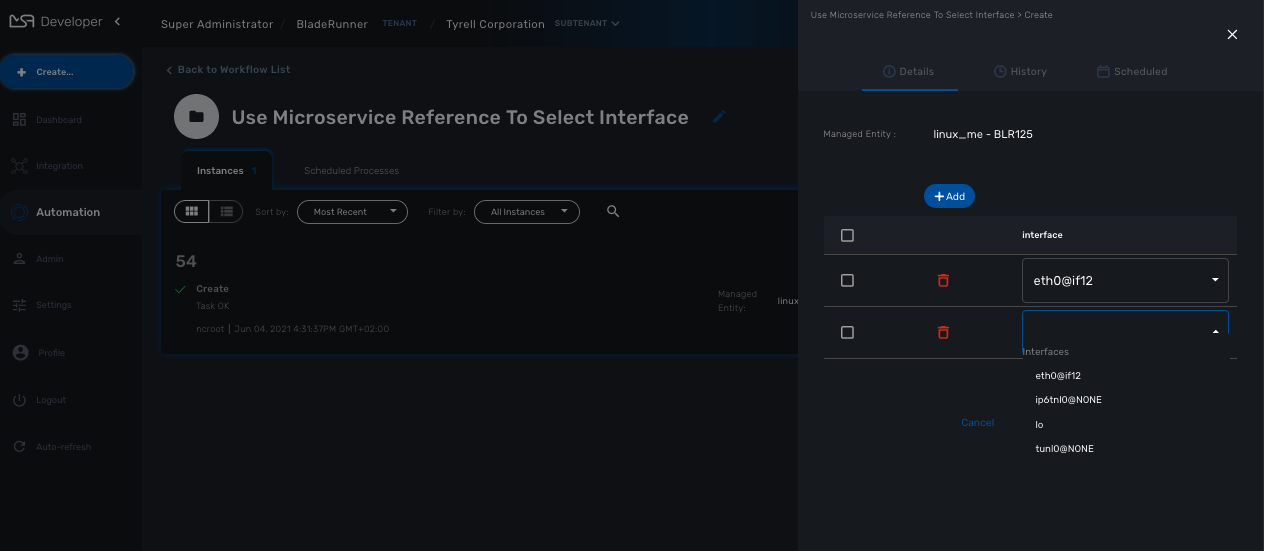

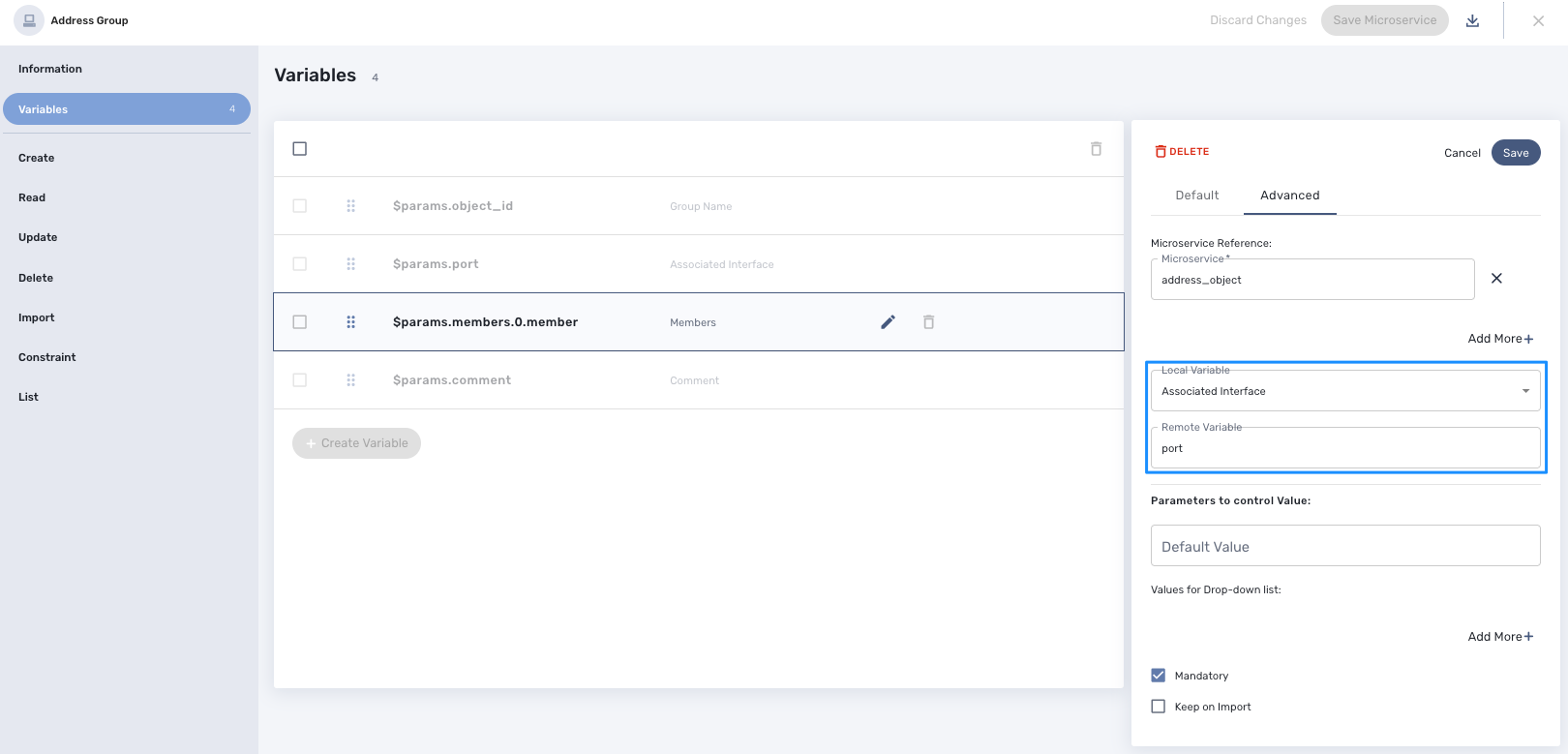

Microservice reference

This type is key when integrating workflows and microservices.

It allows you to import and use the microservice instance data from a managed entity in your automation code.

To use this type you need 2 variables:

-

a variable with the type

Managed Entityto select the managed entity to get the data from -

a variable with the type

Microservice Referenceto select the microservice that will pull the data

When creating a variable typed Microservice Reference you need to select the Managed Entity variable to use and the microservice that will act as the data source.

| the microservice must be attached to the managed entity with a deployment setting in order for the microservice reference to work. |

In the example below, the variable $params.interface is typed as Microservice Reference.

In the "Advanced" tab, the field "Microservice Reference" references one or several microservice and the field "Device ID" references a managed entity.

from msa_sdk.variables import Variables

from msa_sdk.msa_api import MSA_API

dev_var = Variables()

dev_var.add('managed_entity')

dev_var.add('interface.0.name')

context = Variables.task_call(dev_var)

ret = MSA_API.process_content('ENDED', 'Task OK', context, True)

print(ret)It also possible to use an array to select multiple values from the microservice

from msa_sdk.variables import Variables

from msa_sdk.msa_api import MSA_API

dev_var = Variables()

dev_var.add('managed_entity', var_type='Device')

dev_var.add('interface.0.name', var_type='OBMFRef') (1)

context = Variables.task_call(dev_var)

ret = MSA_API.process_content('ENDED', 'Task OK', context, True)

print(ret)| 1 | Use a variable array to allow multiple value selection |

Workflow reference

This type is useful for referencing a workflow from another one.

Display Name

The display value for the variable name.

Description

An optional description of this variable.

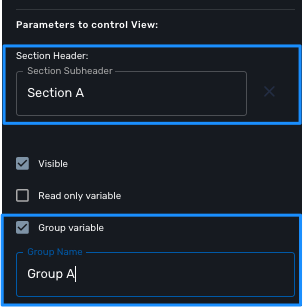

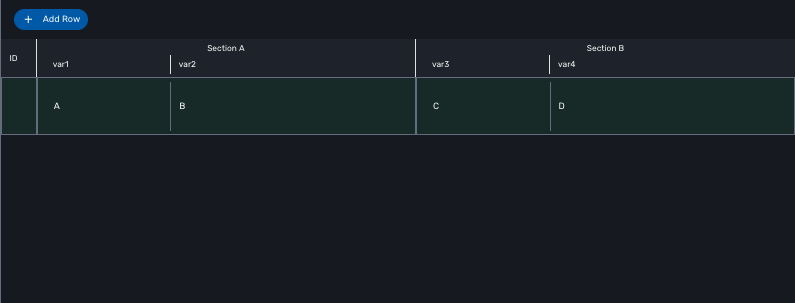

Advanced settings

Depending on the selected type, some advanced parameters may be differ.

| Setting | Description |

|---|---|

Default Value |

the default value that will be used when creating a new workflow instance |

Values for Drop-down |

a list of possible value the user can choose from |

Mandatory |

a value has to be provided for this variable |

Read only variable |

the value cannot be edited |

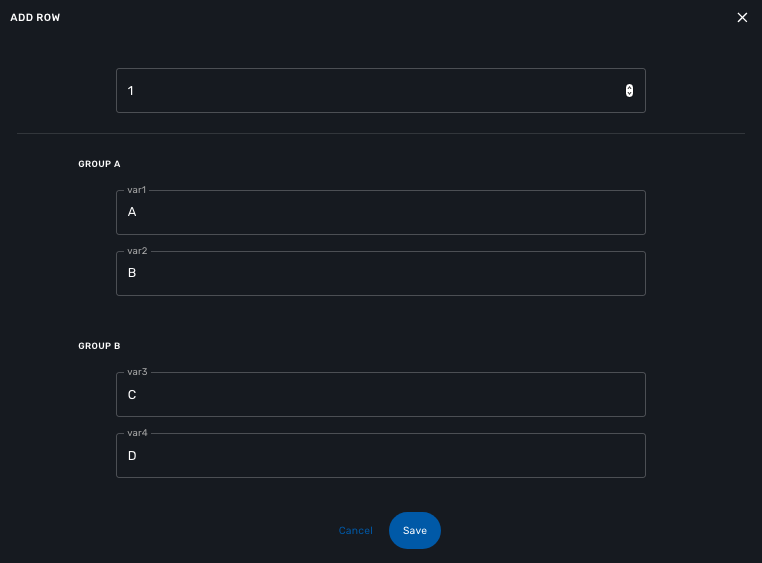

Section Header |

group some variables in the workflow console (see below). |

Show only in edit view |

hide the variable from the workflow console |

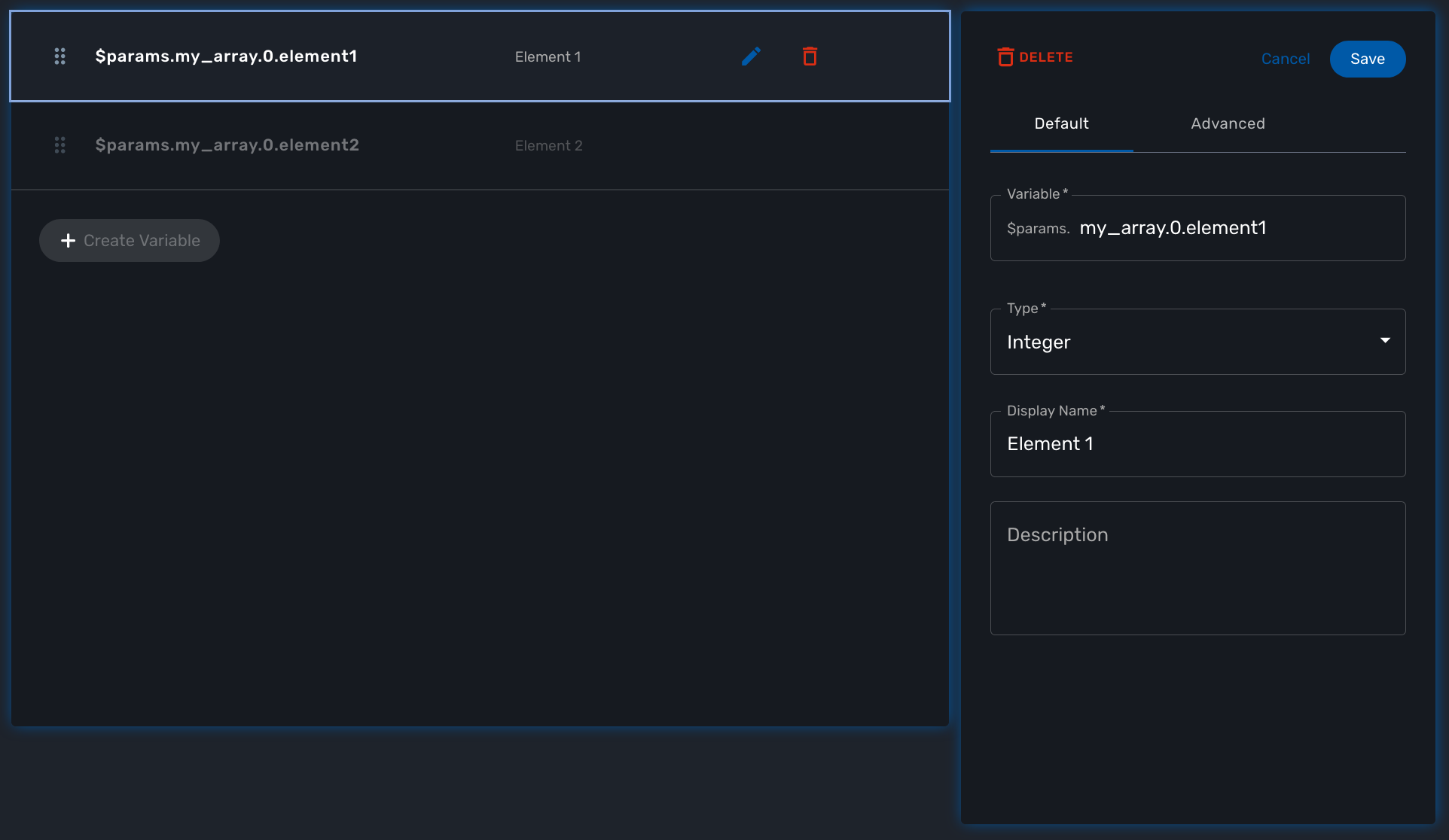

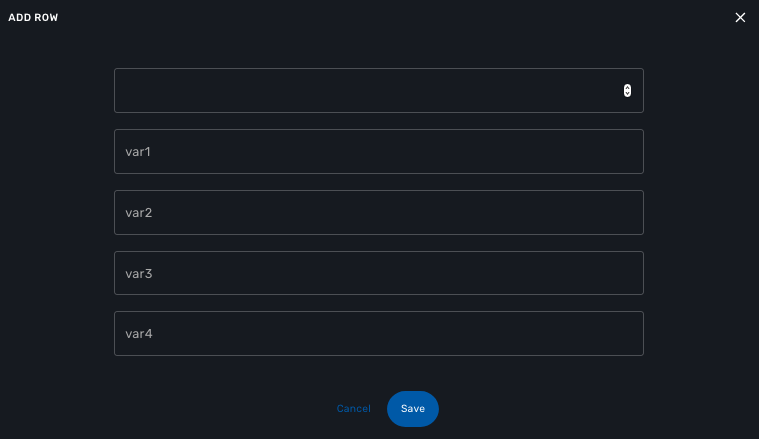

Array settings

When you are dealing with variable arrays, these options will let you control the possible actions a user can have over the array.

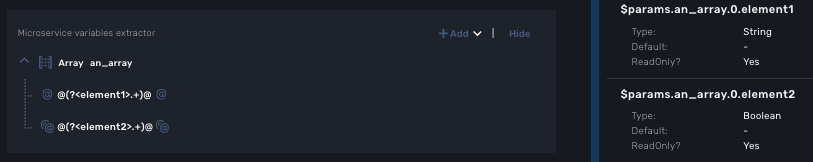

Variable arrays

To create a variable array, you need to follow a precise naming convention: $params.<ARRAY NAME>.0.<ELEMENT NAME>. The 0, is the separator that will allow the UI and the configuration engine that this variable is an array.

Getting Started Developing Workflows in PHP

Overview

This tutorial walks you through the design and development of your first workflow.

Workflows can be used to automate a wide variety of tasks and processes, such as:

-

Service configuration chaining

-

VNF lifecycle management

-

Configuration audit and verification

-

Automated customer on-boarding

-

…

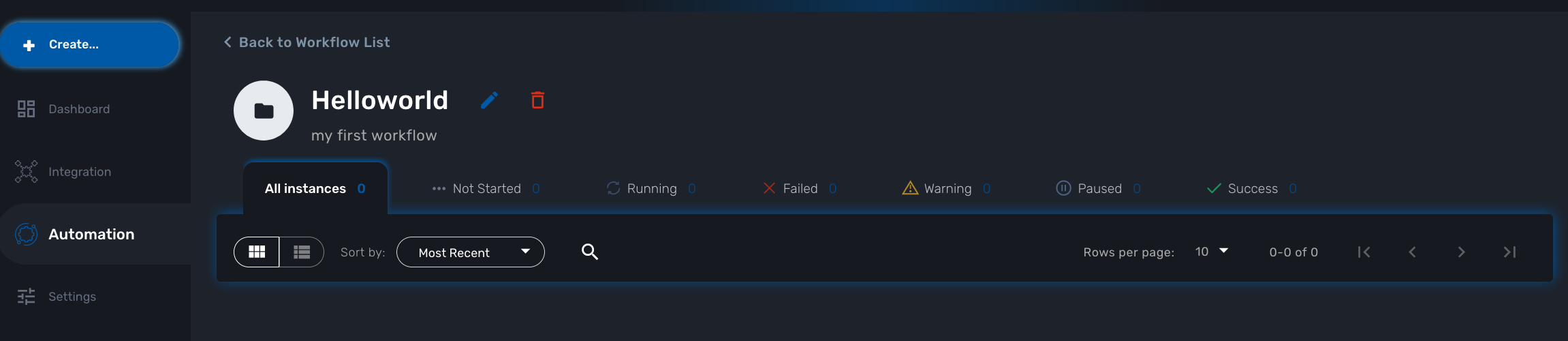

The "Helloworld" Workflow

As an example, we’ll use the "Helloworld" workflow. The "Helloworld" workflow will print a name as IN parameter and will display a message to the user ("Hello NAME").

This workflow is composed of 3 processes: one to create the new instance of the workflow, one to enter the name and print it, and one to delete the instance.

Create a new Workflow

From the Developer dashboard click on "+ Create"

In the tab "Information", set a name, a description and set the Workflow variable name to service_id and save your workflow.

Create a variable "Name" in the tab "Variable".

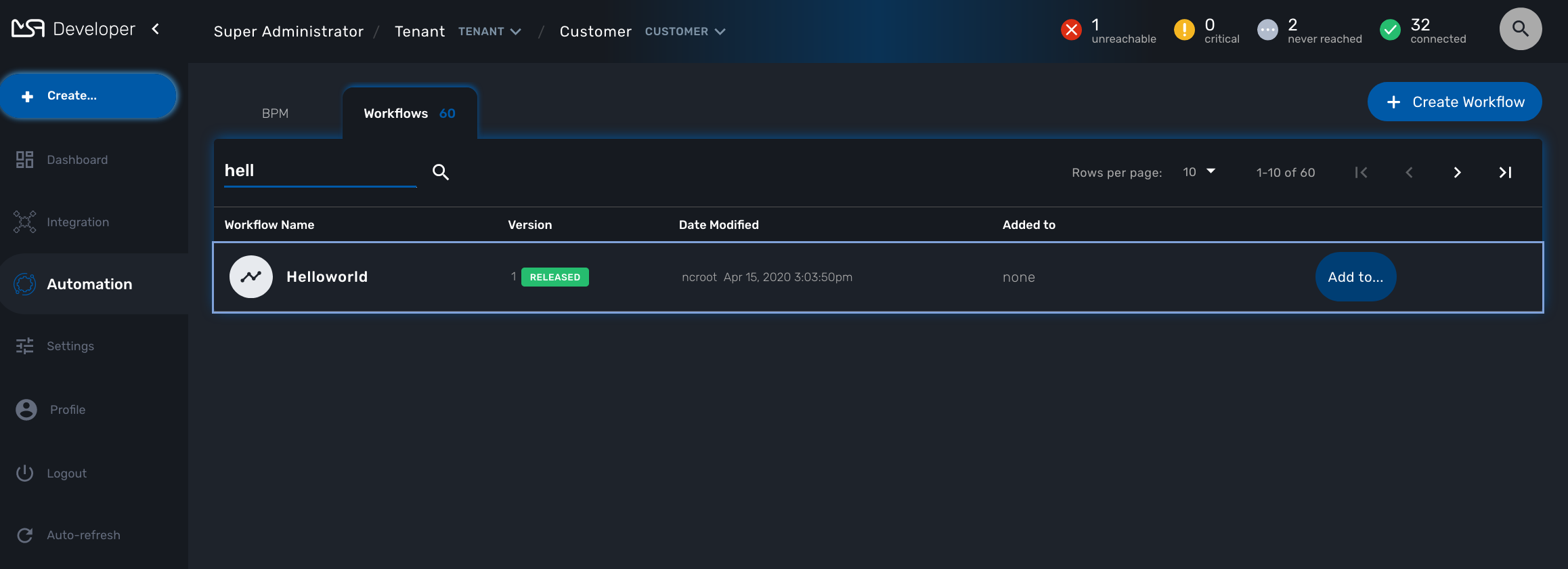

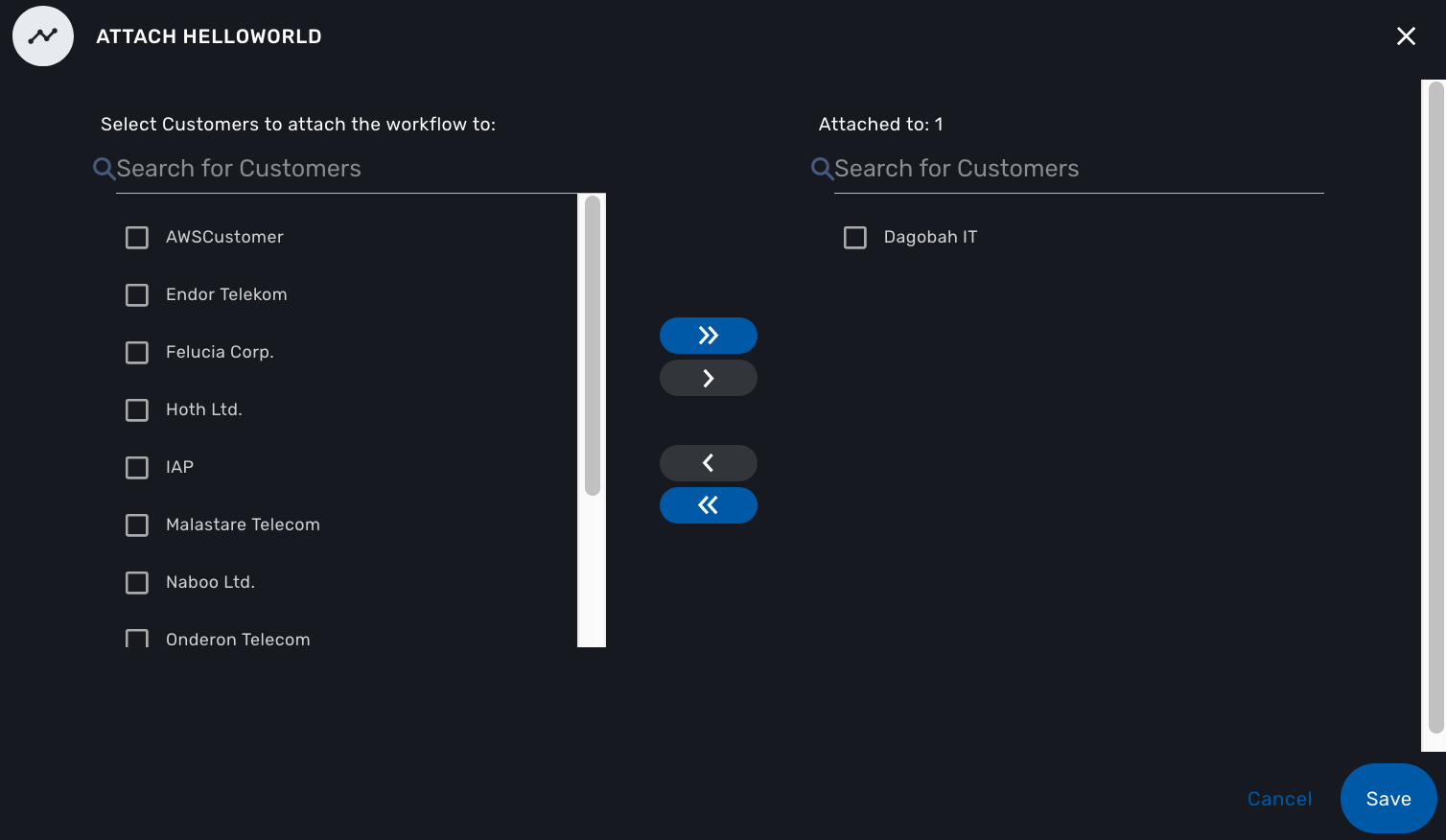

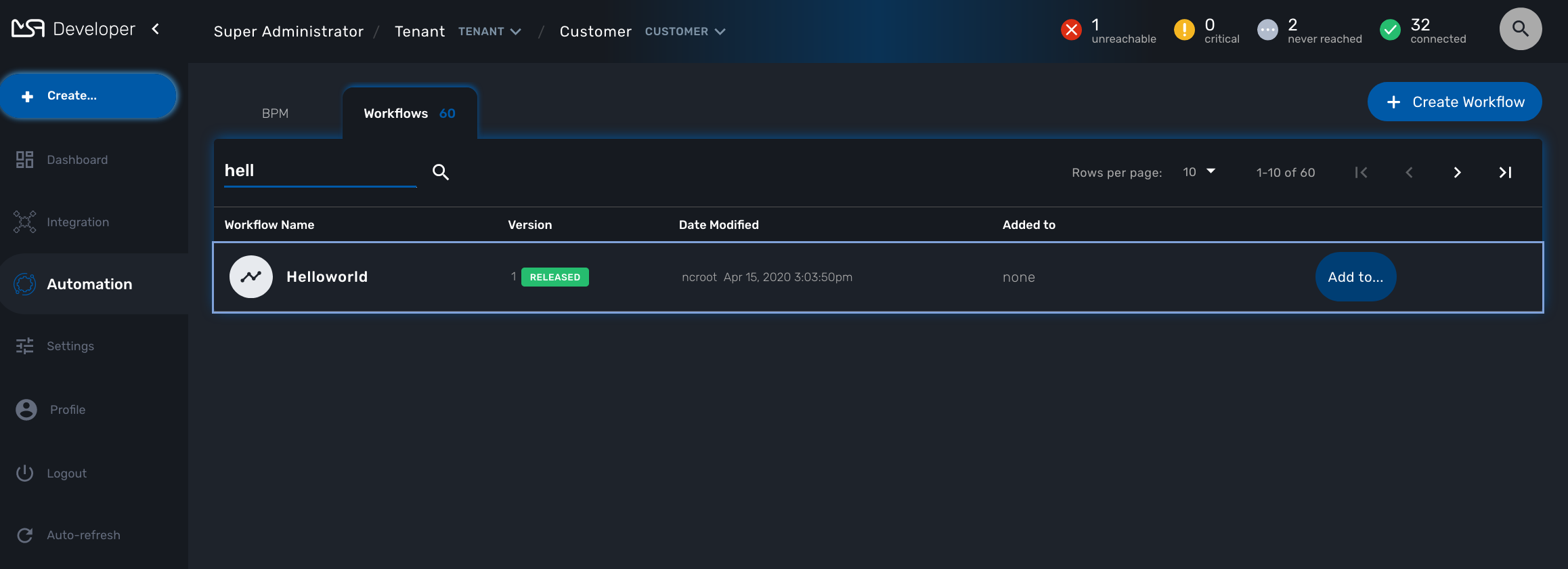

To start testing your workflow, you need to associate it to a customer. Make sure that you have no tenant selected, go to the "Automation" section, you should see your workflow in the list.

You can use the magnifier to search for it.

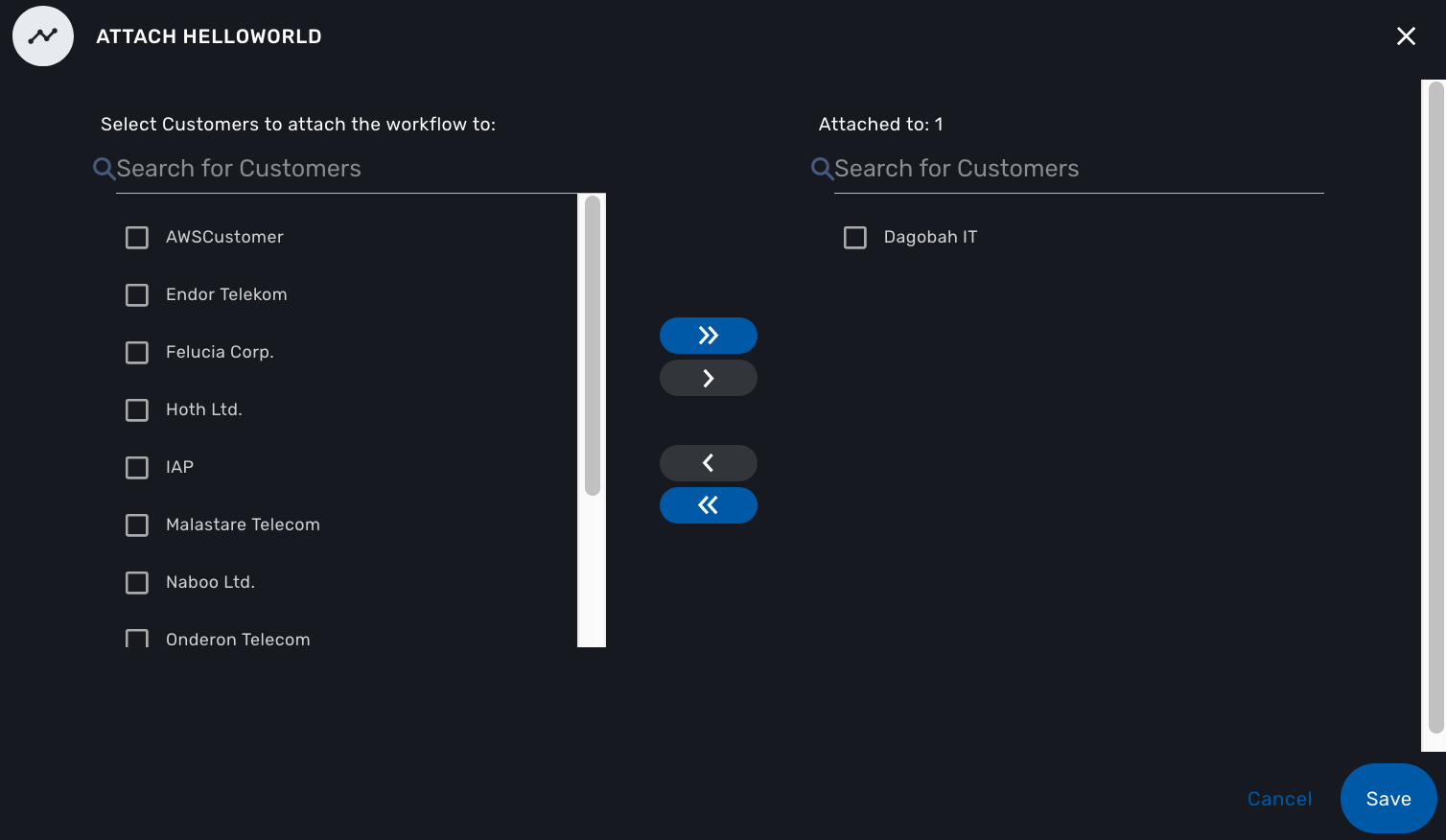

Use the link "Add to.." to associate the workflow to a customer.

Select the customer to use for designing and testing the workflow.

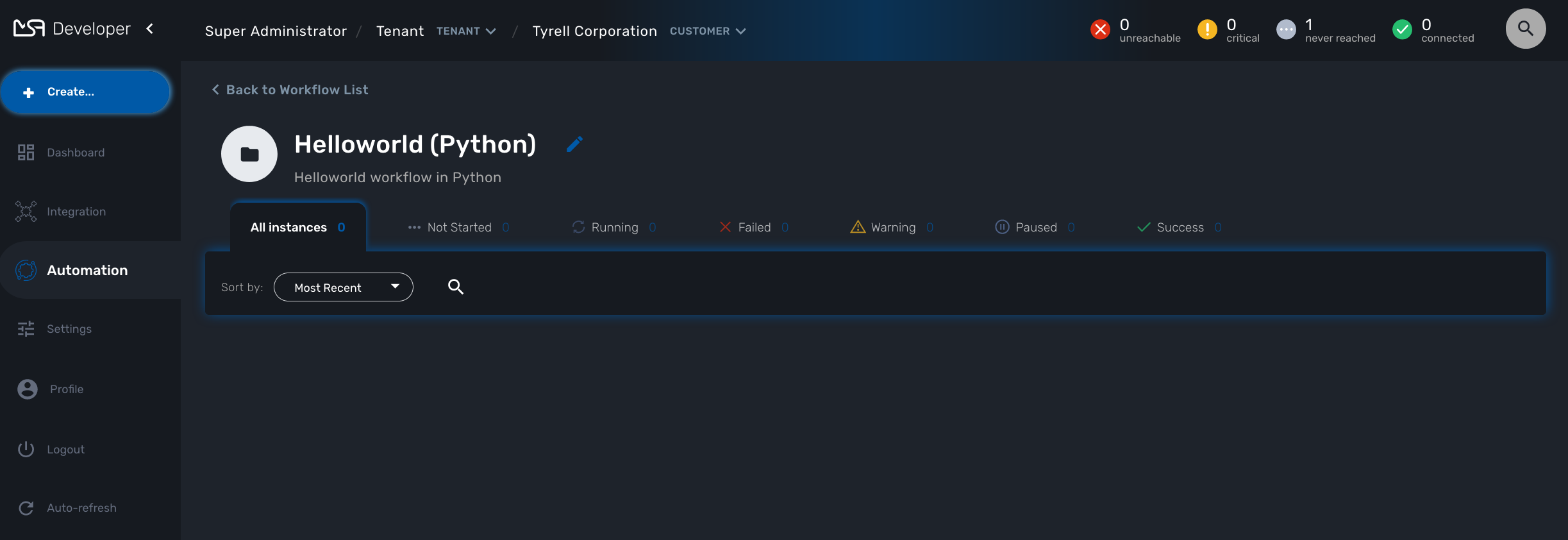

Once done, you can select your customer, list its workflows and edit it with the pencil icon.

Create the Processes

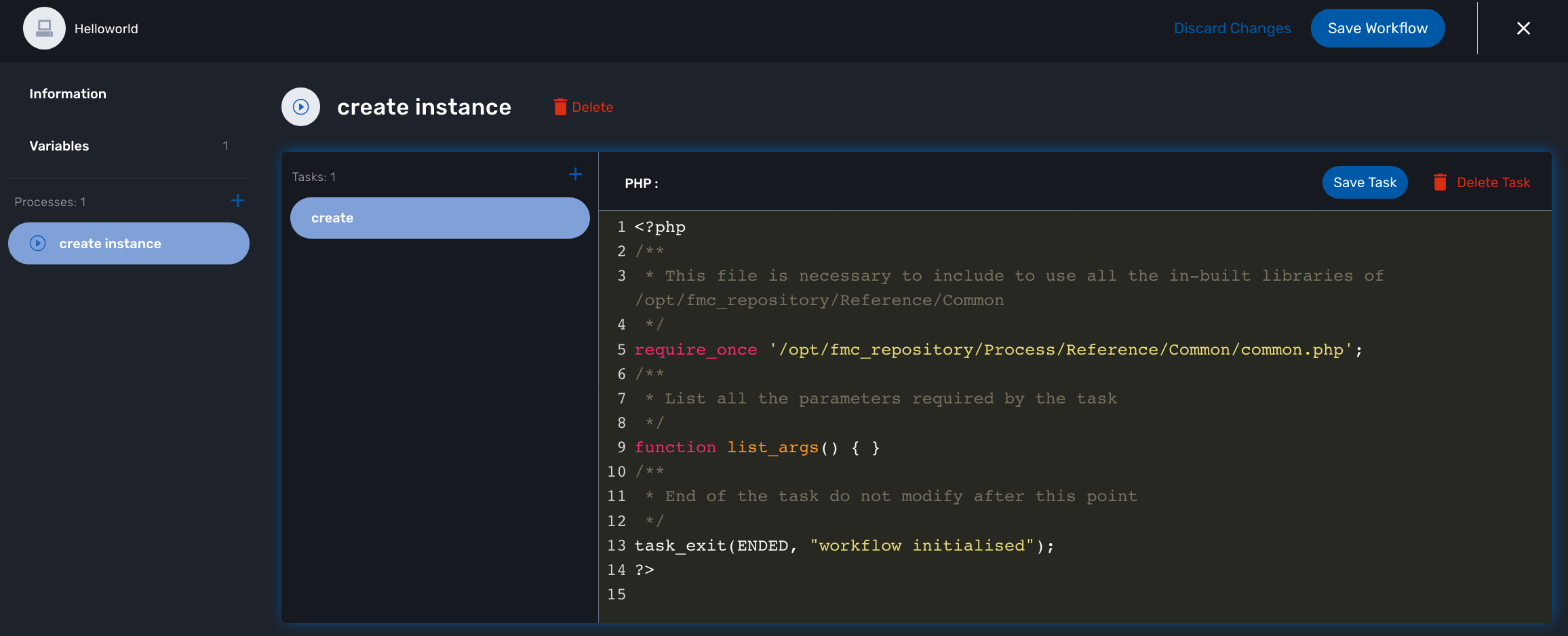

The "create instance" Process

In order to be used, every Workflow should be instantiated first. This is the role of the process with the type "Create".

| even though for most use cases, a single "CREATE" process is sufficient, it is possible to have several "CREATE" processes to support various ways of creating the Workflow instance (You can relate that to having several object constructor in an OOP language such as Java). |

For this tutorial you will create one process named "create instance" and add one task to this process. This task will simply display a message to the process execution console.

<?php

/**

* This file is necessary to include to use all the in-built libraries of /opt/fmc_repository/Reference/Common

*/

require_once '/opt/fmc_repository/Process/Reference/Common/common.php';

/**

* List all the parameters required by the task

*/

function list_args() { }

/**

* End of the task do not modify after this point

*/

task_exit(ENDED, "workflow initialised");

?>

Once done, save the Workflow.

The "delete instance" Process

Follow the same steps as in the "create instance" process, but make sure that the type of the process is set to "DELETE", instead of "CREATE".

| In our case, we only need the instance to be deleted, therefore we don’t need a Task to be added to this Process but in a real world use case, your DELETE process will probably take care of removing or cleaning up some parts of your managed system. |

The "print message" Process

For the print process, use the process type "UPDATE". It will take one parameter that will be used to print your message. Use the code below to create a task that will read the name from the user form and print it in the live console.

<?php

/**

* This file is necessary to include to use all the in-built libraries of /opt/fmc_repository/Reference/Common

*/

require_once '/opt/fmc_repository/Process/Reference/Common/common.php';

/**

* List all the parameters required by the task

*/

function list_args()

{

create_var_def('name', 'String');

}

check_mandatory_param('name');

/**

* get the value of name from the context and create a variable out of it

*/

$name=$context['name'];

/**

* print the value in the log file /opt/jboss/latest/log/process.log

*/

logToFile($name);

/**

* End of the task do not modify after this point

*/

task_exit(ENDED, "Hello " . $name);

?>Test the Workflow

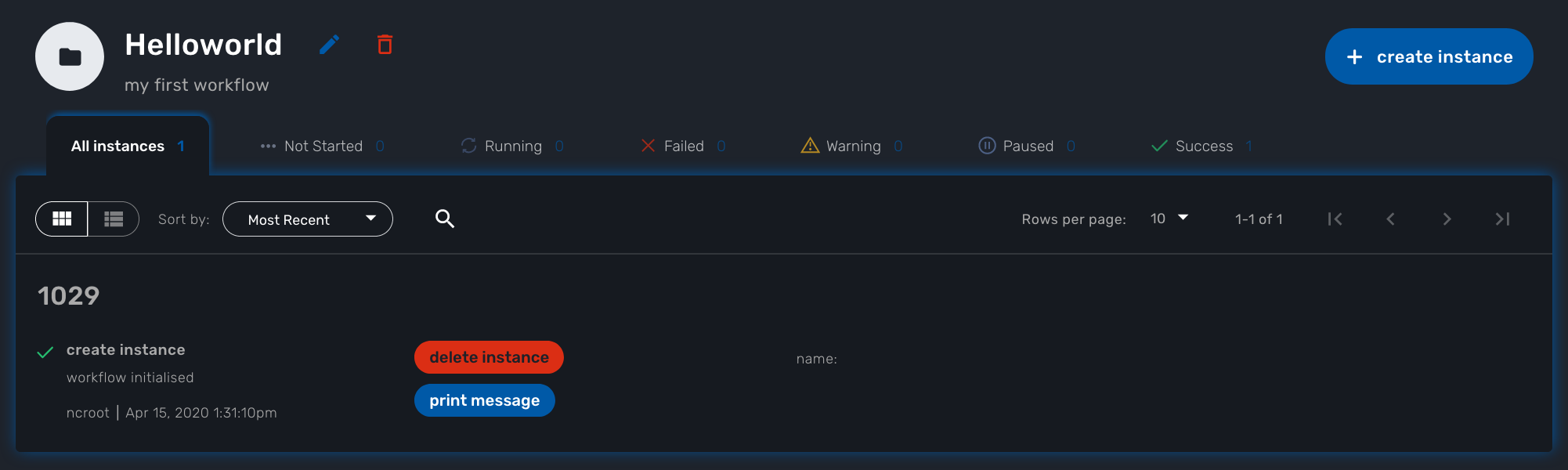

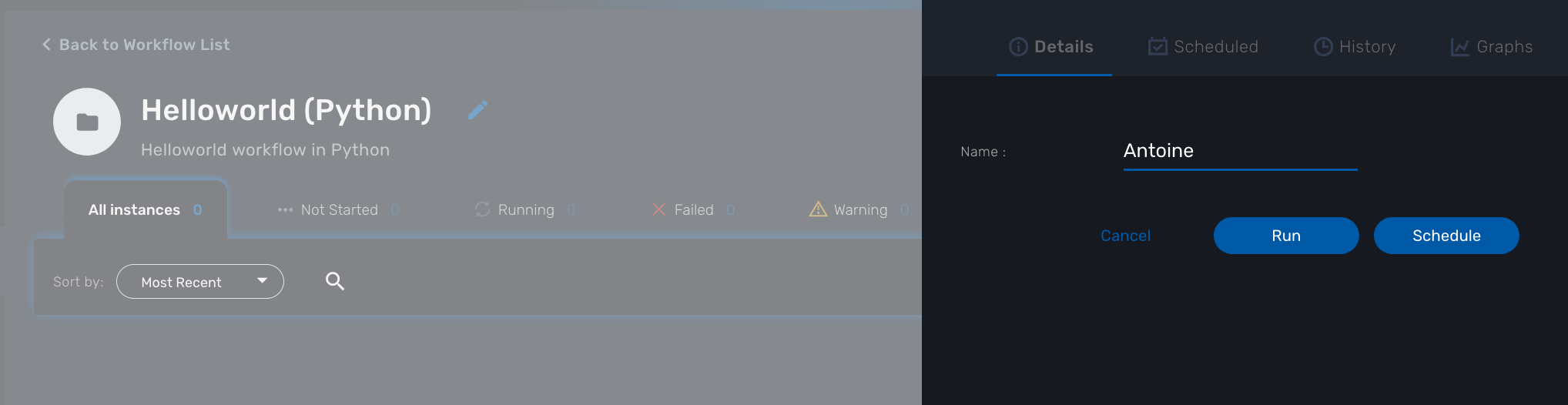

Before you can test the workflow and execute some processes, you need to attach the workflow to your current subtenant.

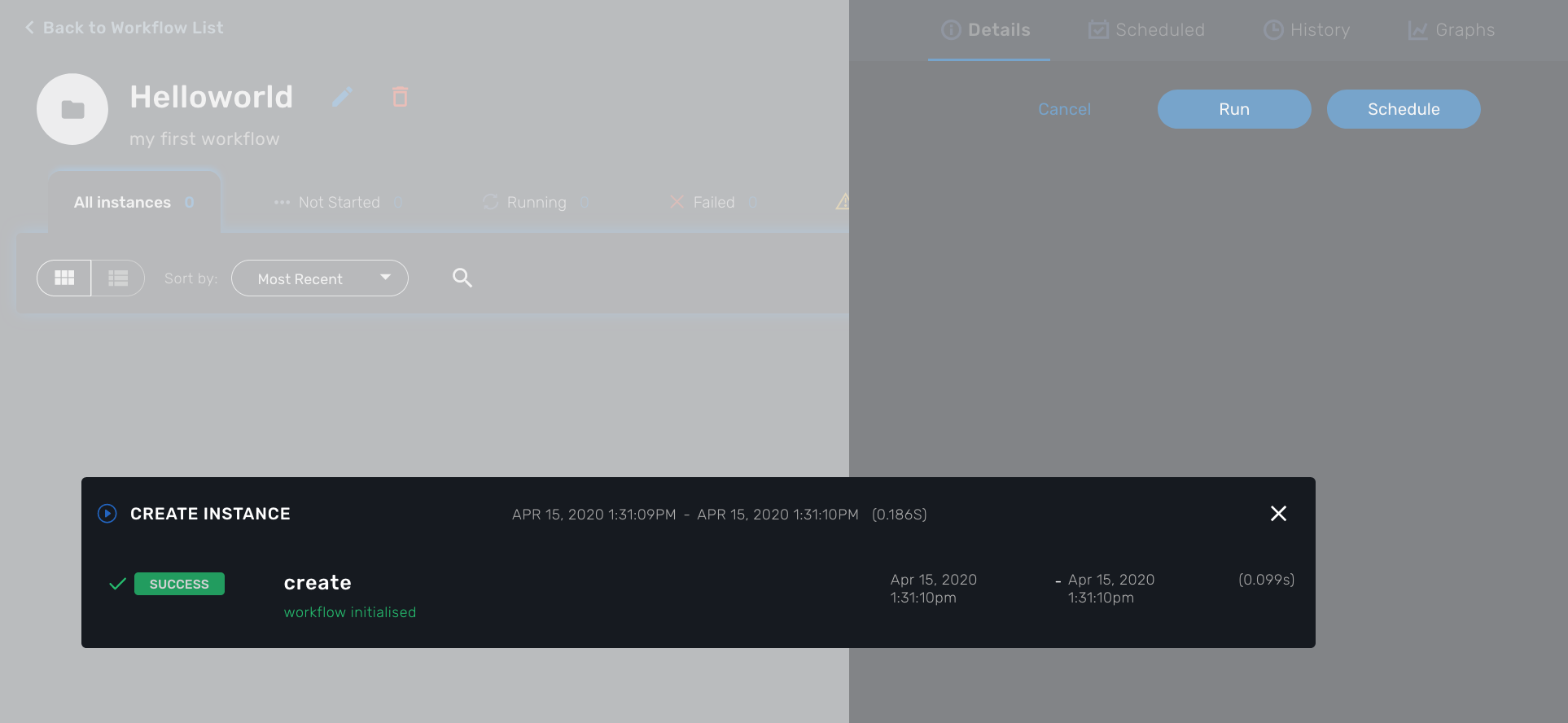

Use the "+ create instance" action to execute the "create instance" process and create a new instance of your workflow.

A new instance is available and you can execute the process "print message".

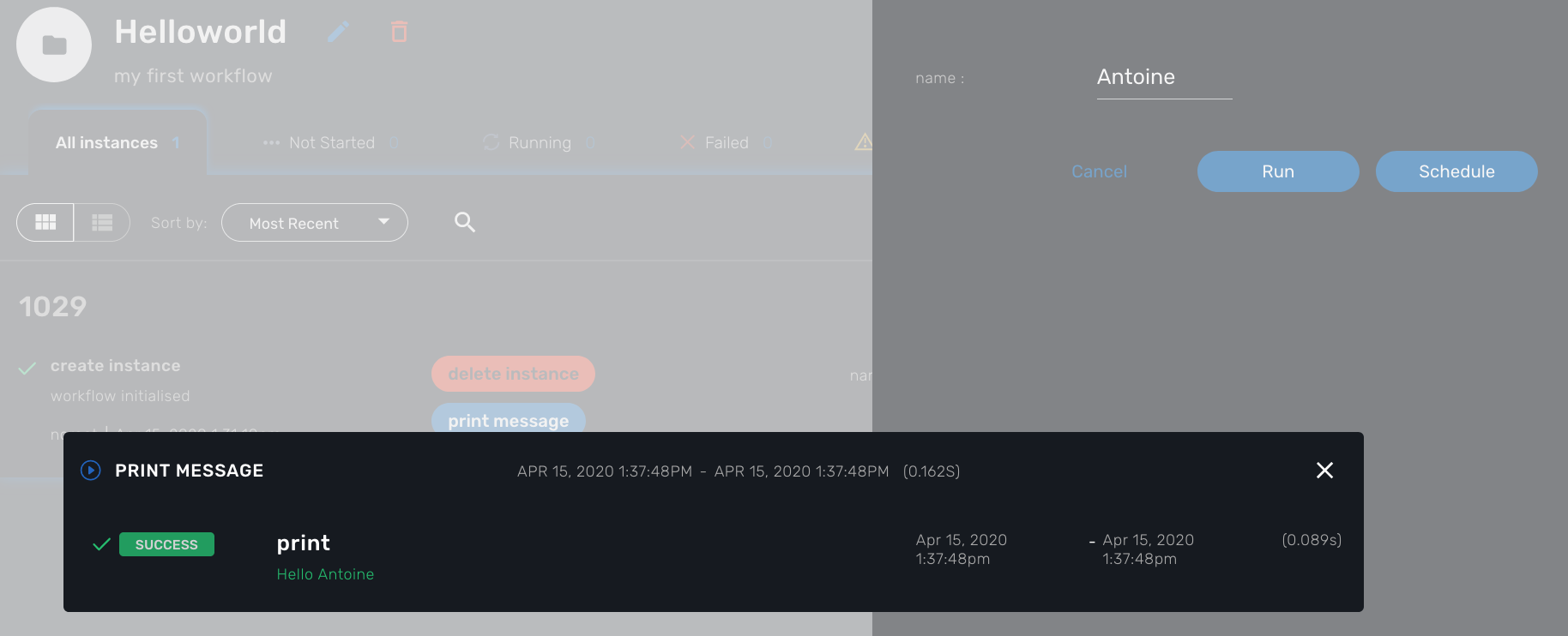

The process "print message" will start executing and will executes the tasks sequentially.

The name will be displayed in the task execution status popup, below the name of the task.

Getting Started Developing Workflows in Python

Overview

This tutorial walks you through the design and development of your first workflow in Python. This tutorial is similar to the Helloworld example in PHP

Workflows can be used to automate a wide variety of tasks and processes, such as:

-

Service configuration chaining

-

VNF lifecycle management

-

Configuration audit and verification

-

Automated customer on-boarding

-

…

The "Hello world" Workflow

As an example, we’ll use the "Helloworld" workflow. The "Helloworld" workflow will print a name as IN parameter and will display a message to the user ("Hello NAME").

This workflow is composed of 3 processes: one to create the new instance of the workflow, one to enter the name and print it, and one to delete the instance.

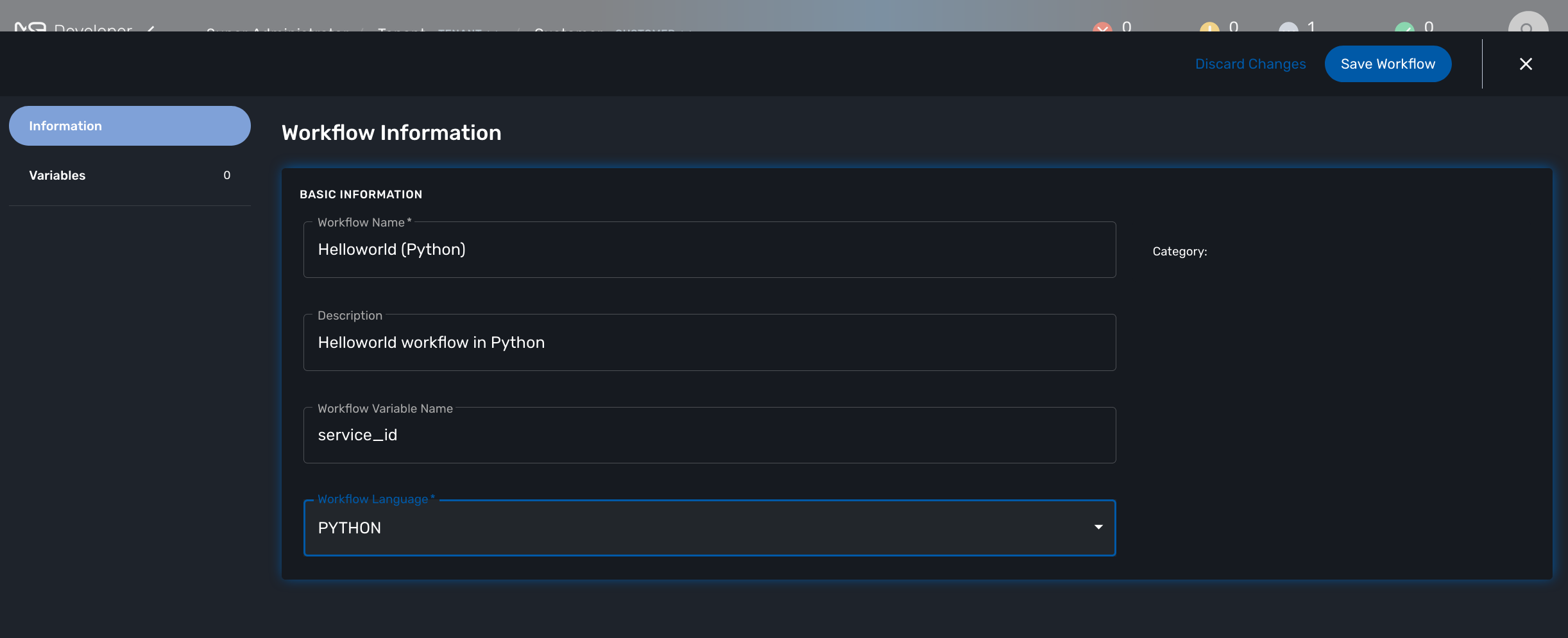

Create a New Workflow

From the Developer dashboard click on "+ Create"

In the tab "Information", set a name, a description and set the Workflow variable name to service_id and save your workflow.

Create a variable "Name" in the tab "Variable".

To start testing your workflow, you need to associate it to a customer. Make sure that you have no tenant selected, go to the "Automation" section, you should see your workflow in the list.

You can use the magnifier to search for it.

Use the link "Add to.." to associate the workflow to a customer.

Select the customer to use for designing and testing the workflow.

Once done, you can select your customer, list its workflows and edit it with the pencil icon.

Create the Processes

The "create instance" Process

In order to be used, every Workflow should be instantiated first. This is the role of the process with the type "Create".

| even though for most use cases, a single "CREATE" process is sufficient, it is possible to have several "CREATE" processes to support various ways of creating the Workflow instance (You can relate that to having several object constructor in an OOP language such as Java). |

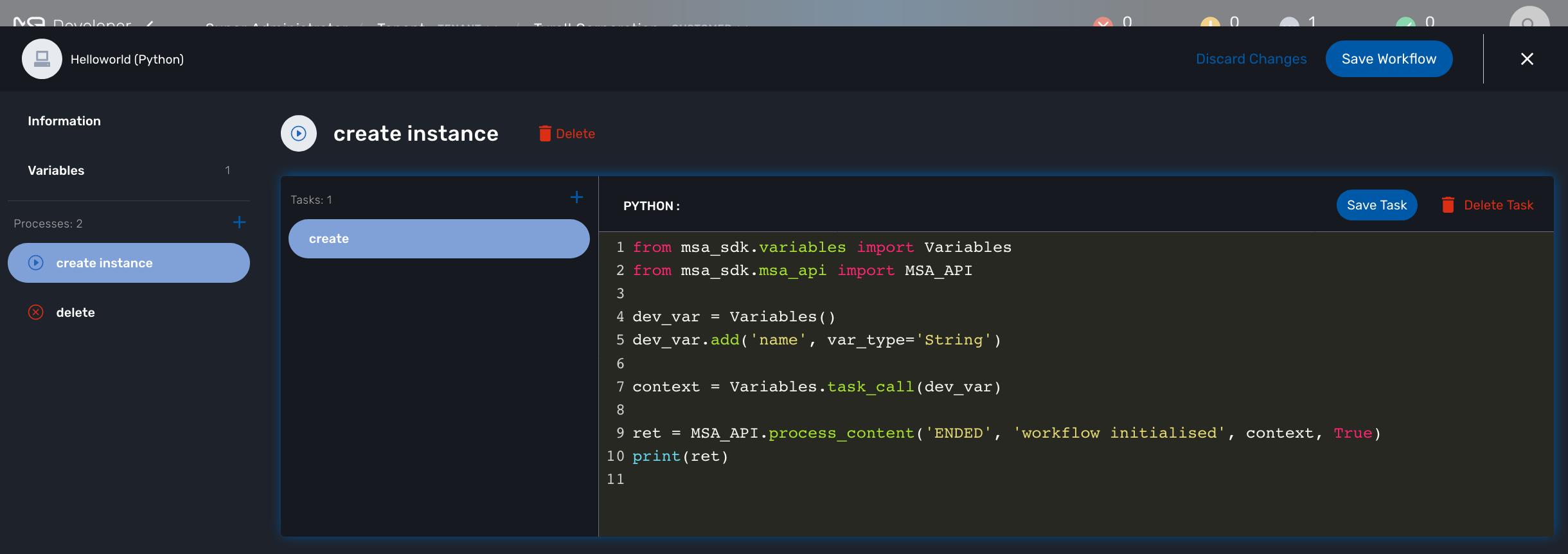

For this tutorial you will create one process named "create instance" and add one task to this process. This task will simply display a message to the process execution console.

from msa_sdk.variables import Variables

from msa_sdk.msa_api import MSA_API

dev_var = Variables()

dev_var.add('name', var_type='String')

context = Variables.task_call(dev_var)

ret = MSA_API.process_content('ENDED', 'workflow initialized', context, True)

print(ret)

Once done, save the Workflow.

The "delete instance" Process

Follow the same steps as in the "create instance" process, but make sure that the type of the process is set to "DELETE", instead of "CREATE".

| In our case, we only need the instance to be deleted, therefore we don’t need a Task to be added to this Process but in a real world use case, your DELETE process will probably take care of removing or cleaning up some parts of your managed system. |

The "print message" Process

For the print process, use the process type "UPDATE". It will take one parameter that will be used to print your message. Use the code below to create a task that will read the name from the user form and print it in the live console.

from msa_sdk.variables import Variables

from msa_sdk.msa_api import MSA_API

context = Variables.task_call()

my_name = context['name']

ret = MSA_API.process_content('ENDED', f'Hello {my_name}', context, True)

print(ret)Test the Workflow

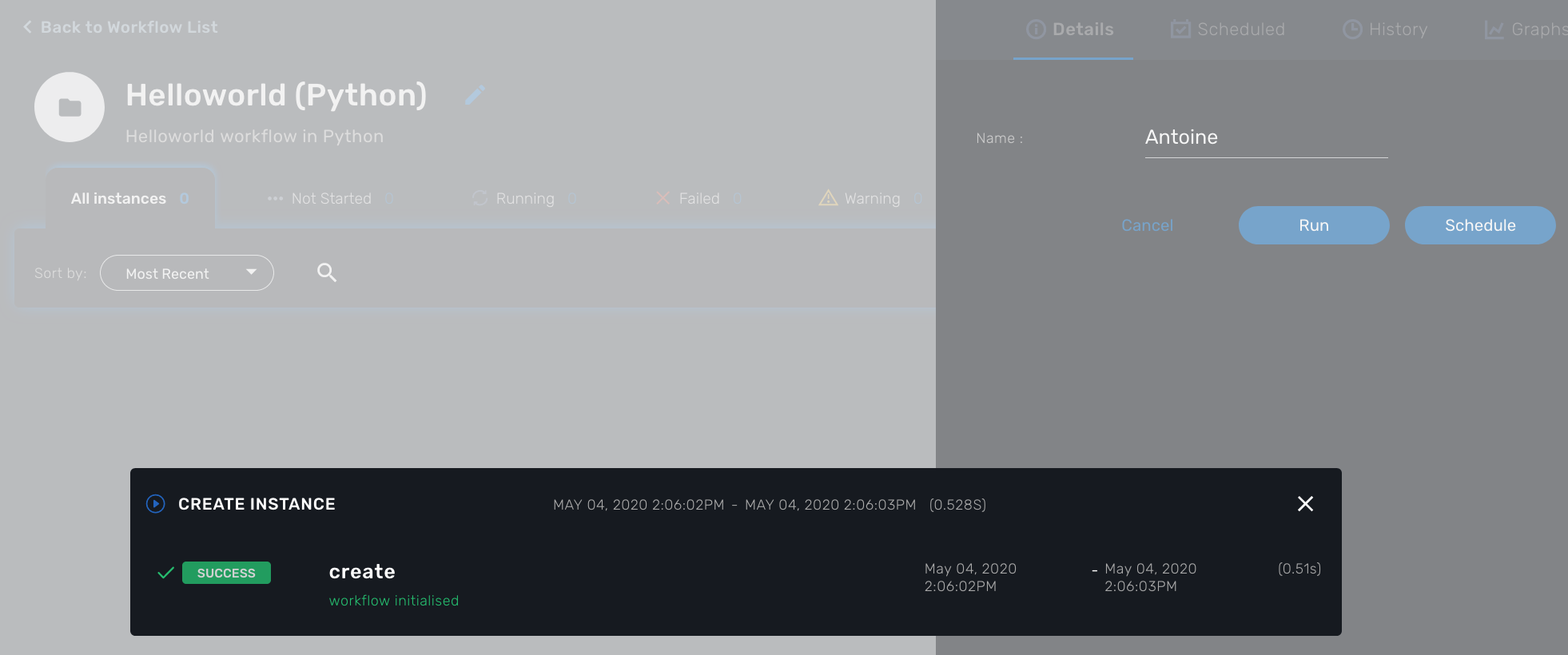

Use the "+ create instance" action to execute the "create instance" process and create a new instance of your workflow.

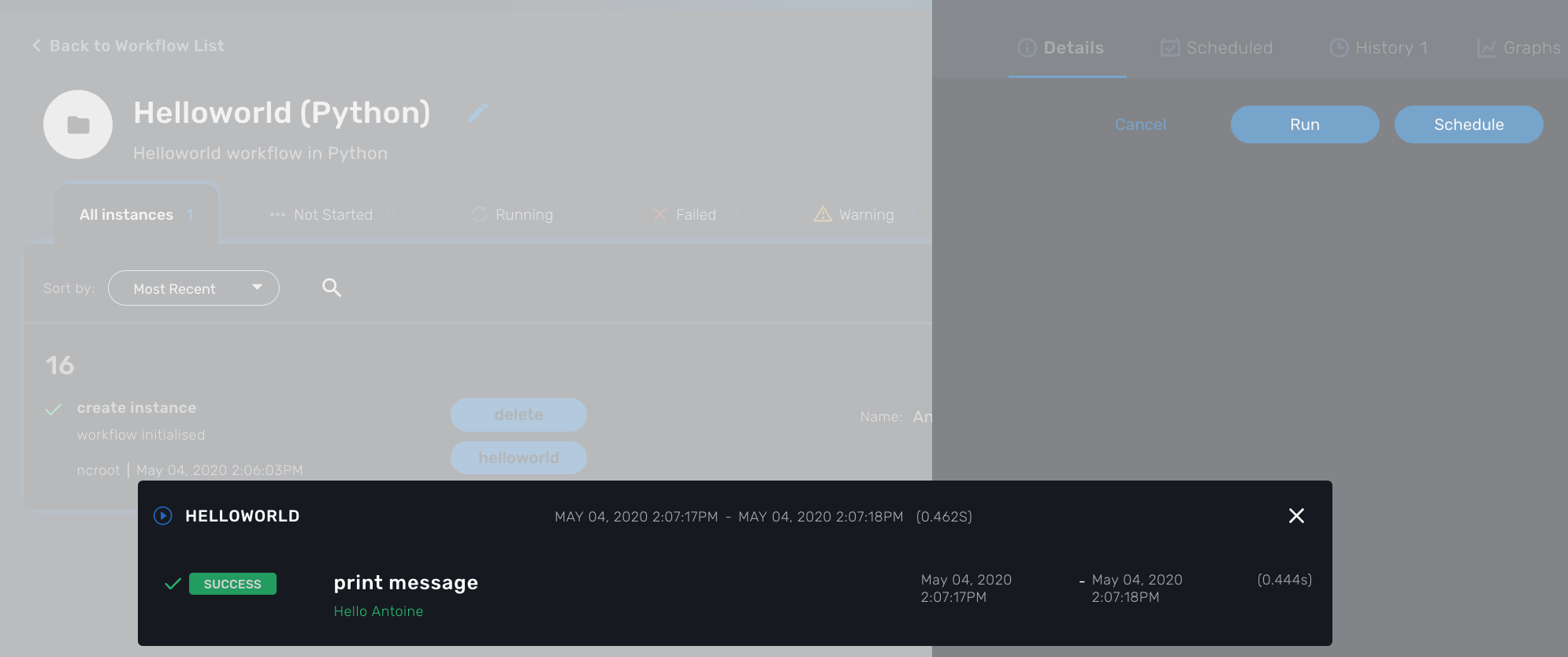

A new instance is available and you can execute the process "print message".

The process "print message" will start executing and will executes the tasks sequentially.

The name will be displayed in the task execution status popup, below the name of the task.

Python SDK

The MSactivator™ provides a support for Python SDK dedicated to developing automation workflows.

Overview

This SDK provides a set of functions to call the MSactivator™ REST API and automate action on the MSactivator™ such as create and activate managed entities, call microservice functions, call processes from other workflows…

The SDK API documentation is available online on your MSactivator™ instance at: https://localhost/msa_sdk

Contribution

The sources of the SDK is available on Github.

Code samples

Sample implementation of a SDK function.

This code sample is an example of the implementation of a Python SDK function to create a new managed entity.

class Device(MSA_API):

self.api_path = "/device"

def create(self):

self.action = 'Create device'

self.path = '{}/v2/{}'.format(self.api_path, self.customer_id) (1)

data = {"manufacturerId": self.manufacturer_id,

"modelId": self.model_id,

"managementAddress": self.management_address,

"reporting": self.reporting,

"useNat": self.use_nat,

"logEnabled": self.log_enabled,

"logMoreEnabled": self.log_more_enabled,

"managementInterface": self.management_interface,

"mailAlerting": self.mail_alerting,

"passwordAdmin": self.password_admin,

"externalReference": self.device_external,

"login": self.login,

"name": self.name,

"password": self.password,

"id": 0,

"snmpCommunity": self.snmp_community}

if self.management_port:

data["managementPort"] = self.management_port

self.call_post(data) (2)

self.fail = not self.response.ok

if self.response.ok:

self.device_id = json.loads(self.content)['id']

return json.loads(self.content)| 1 | the REST API to call |

| 2 | post the data to the REST API |

Sample call of a function in a workflow task.

from msa_sdk.device import Device

from msa_sdk.variables import Variables

import json

dev_var = Variables() (1)

dev_var.add('customer_id')

dev_var.add('managed_device_name')

dev_var.add('manufacturer_id')

dev_var.add('model_id')

dev_var.add('device_ip_address')

dev_var.add('login')

dev_var.add('password')

dev_var.add('password_admin')

context = Variables.task_call(dev_var)

new_device = Device(context['customer_id'], context['managed_device_name'], context['manufacturer_id'],context['model_id'], context['login'], context['password'], context['password_admin'],context['device_ip_address'])

new_device.create() (2)

context['device_id'] = new_device.device_id (3)

print(new_device.process_content('ENDED', 'Task OK', context, False))| 1 | define the parameters to pass to the API |

| 2 | create the new managed entity |

| 3 | store the ID of the new managed entity in the workflow instance context |

Microservice functions

Call a microservice CREATE/UPDATE/DELETE function

from msa_sdk.variables import Variables

from msa_sdk.msa_api import MSA_API

from msa_sdk.order import Order

from msa_sdk.orchestration import Orchestration

from msa_sdk import util

import json

me_id = context['me'][3:] (1)

micro_service_vars_array = { (2)

"object_id": "12.1.1.1",

"mask": "255.255.255.0",

"gateway": "10.10.1.254"

}

object_id = "null"

route = {"routing": {object_id: micro_service_vars_array}} (3)

try:

ms_order = Order(me_id)

ms_order.command_execute('CREATE', route) (4)

except Exception as e:

ret = MSA_API.process_content('FAILED', f'CREATE ERROR: {str(e)}', context, True)

print(ret)| 1 | Read the ID of the managed entity from the context, assuming the variable 'me' type is Device |

| 2 | Build the Microservice JSON params for the CREATE operation of the microservice. |

| 3 | The value of the key should match the Microservice file name (stripped of the .xml file extension) |

| 4 | Call the CREATE for simple_firewall MS for each device (use UPDATE or DELETE for the other operations) |

The function command_execute is defined in order.py

Call a microservice CREATE on multiple managed entities

from msa_sdk.variables import Variables

from msa_sdk.msa_api import MSA_API

from msa_sdk.order import Order

from msa_sdk import util

dev_var = Variables()

dev_var.add('object_id')

dev_var.add('service')

dev_var.add('src_ip')

dev_var.add('src_itf')

dev_var.add('dst_ip')

dev_var.add('dst_itf')

dev_var.add('firewalls.0.id') (1)

dev_var = Variables()

context = Variables.task_call(dev_var)

object_id = context['object_id']

micro_service_vars_array = {"object_id": context['object_id'],

"src_ip": context['src_ip'],

"src_mask": '255.255.255.255',

"dst_ip": context['dst_ip'],

"dst_mask": '255.255.255.255',

"src_itf": context['src_itf'],

"dst_itf": context['dst_itf'],

"action": 'deny',

"service": context['service']

}

simple_firewall = {"simple_firewall": {object_id: micro_service_vars_array}}

firewalls = context['firewalls']

for firewall in firewalls:

devicelongid = firewall['id'][-3:]

try:

order = Order(devicelongid)

order.command_execute('CREATE', simple_firewall)

except Exception as e:

ret = MSA_API.process_content('FAILED', f'CREATE ERROR: {str(e)}', context, True)

print(ret)

ret = MSA_API.process_content('ENDED',

f'IPTABLES RULE INITIALIZED',

context, True)

print(ret)| 1 | use a variable array typed as a Managed Entity |

Call a microservice IMPORT function

from msa_sdk.variables import Variables

from msa_sdk.msa_api import MSA_API

from msa_sdk.order import Order

from msa_sdk.orchestration import Orchestration

from msa_sdk import util

import json

try:

order = Order(me_id) (1)

order.command_execute('IMPORT', {"routing":"0"}) (2)

order.command_objects_instances("routing") (3)

ms_instances = json.loads(order.content) (4)

except Exception as e:

ret = MSA_API.process_content('FAILED', f'IMPORT ERROR: {str(e)}', context, True)

print(ret)| 1 | initialize an Order object |

| 2 | execute the IMPORT of a microservice defined in a file routing.xml |

| 3 | get the microservice instances |

| 4 | store the instance in a variable to further reuse |

How to create you libraries of functions

When developing a workflow you will probably have de define some functions that will be used in multiple tasks.

In order to avoid code duplication and ease the maintenance of your workflow one option is to create a common folder at the same level as your other task folder and create a python file common.py where the shared functions will be defined.

my_workflow

|- my_workflow.xml

|- common

|- common.py

|- process_1

|- task1.py

common.py will contain the python code and can also import the Python SDK as well as other Python modules

from msa_sdk.variables import Variables

from msa_sdk.msa_api import MSA_API

from msa_sdk import util

from msa_sdk.order import Order

from datetime import datetime

import time

import json

import typing

import copy

import requests

import ipaddress

import re

import pandas as Pandas

dev_var = Variables()

context = Variables.task_call(dev_var)

# Function: to convert

def printTable(myDict): (1)

df = Pandas.DataFrame.from_records(myDict)

return df.to_string()| 1 | a function that can be used in a task |

In the task implementation you need to add the following lines to import this common library

import os

import os.path

import sys

from pathlib import Path

from msa_sdk.variables import Variables

from msa_sdk.msa_api import MSA_API

currentdir = os.path.dirname(os.path.realpath(__file__)) (1)

parentdir = os.path.dirname(currentdir)

sys.path.append(parentdir)

from common.common import *

dev_var = Variables()

context = Variables.task_call(dev_var)

if data:

result = printTable(data) (2)

context['result'] = result

ret = MSA_API.process_content('ENDED', 'DONE', context, True)

print(ret)| 1 | include the path of common in the modules |

| 2 | call the method defined in the common library |

How to extend the SDK

Create a custom library of scripts

You can extend the SDK by adding your own scripts in the MSactivator™. The scripts have to be added in the container msa_dev, under the directory /opt/fmc_repository/Process/PythonReference/custom

In a workflow task, you can use the code below to import your custom scripts

import custom.myfileor

from custom.myfile import SOME_METHOD

You can create a git repository under /opt/fmc_repository/Process/PythonReference/custom with git init and set a remote to a remote repository to ease the management and versioning of your custom SDK library

|

Install additional Python modules

To install an additional Python package you need to log into the msa_dev container and execute

python3 -m pip install \

--install-option="--install-lib=/opt/fmc_repository/Process/PythonReference" PACKAGE (1)| 1 | PACKAGE is the name of the Python package to install |

To list the packages that are installed

python3 -m pip listMiscellaneous

Output messages to the process execution UI

When a task runs, it is often useful to be able to provide real time message update on the UI.

The code sample below shows how to do it.

from msa_sdk.orchestration import Orchestration

from msa_sdk.msa_api import MSA_API

import time

Orchestration = Orchestration(context['UBIQUBEID'])

async_update_list = (context['PROCESSINSTANCEID'],

context['TASKID'],

context['EXECNUMBER']) (1)

Orchestration.update_asynchronous_task_details(*async_update_list,

'going to sleep') (2)

time.sleep(2) (3)

Orchestration.update_asynchronous_task_details(*async_update_list,

'wake up') (4)| 1 | creates an array with the information about current process and task |

| 2 | update the UI with a message |

| 3 | execute some code |

| 4 | update the UI with another message |

Write debug message in the process log file

To write debugging messages in the process execution log file (msa-api container, under /opt/wildfly/logs ) you can use the function log_to_process_file

from msa_sdk import util

dev_var = Variables()

context = Variables.task_call(dev_var)

process_id = context['SERVICEINSTANCEID'] (1)

util.log_to_process_file(process_id, 'a debug message')| 1 | read the current process ID from the context |

PHP SDK

The MSactivator™ provides a support for PHP SDK dedicated to developing automation workflows.

Overview

This SDK provides a set of functions that can be used to call the MSactivator™ REST API and automate actions on the MSactivator™ such as create and activate managed entities, call microservice function, call processes from other workflows…

Where to find the source code of the SDK?

The SDK functions are implemented in a set of PHP files stored in the MSactivator™ container msa_dev under /opt/fmc_repository/Process/Reference/Common/Library

The PHP files are organised by API topic and contains the functions that are calling the REST API.

These functions can be called directly when implementing the tasks of the workflow processes.

Code samples

Sample implementation of a SDK PHP function

The source code below shows the implementation of one of the functions provided by the SDK.

/**

* Create Subtenant (1)

* curl -u ncroot:ubiqube -H "Content-Type: application/json" \

* -XPOST 'http://ip_address/ubi-api-rest/customer/{prefix}?name={name}&reference={reference}' -d '

{

"name": "contactName",

"firstName": "contactFirstName",

"address": {

"streetName1": "sn1",

"streetName2": "sn2",

"streetName3": "sn3",

"city": "city123",

"zipCode": "zip123",

"country": "Country098",

"fax": "1233",

"email": "contact @ company.com",

"phone": "123"

}

}

*/

function _customer_create ($operator_prefix,

$customer_name,

$external_reference = "",

$contact_details = "{}") { (2)

$msa_rest_api = "customer/{$operator_prefix}?name={$customer_name}&reference={$external_reference}";

$curl_cmd = create_msa_operation_request(OP_POST, $msa_rest_api, $contact_details); (3)

$response = perform_curl_operation($curl_cmd, "CREATE CUSTOMER"); (4)

$response = json_decode($response, true);

if ($response['wo_status'] !== ENDED) { (5)

$response = json_encode($response);

return $response;

}

$response = prepare_json_response(ENDED, ENDED_SUCCESSFULLY, $response['wo_newparams']['response_body']);

return $response;

}| 1 | A description of the function and an sample call of the matching REST API |

| 2 | The function of the SDK always starts with _ |

| 3 | Call a SDK helper function to build the curl request |

| 4 | Call a SDK helper function to execute the curl request |

| 5 | Call a SDK helper function (defined in utility.php) to format a response with proper status, comment and response payload |

Sample call of a SDK function in a workflow task.

// Create subtenant

logToFile("Creating subtenant:\n");

$customer_contact_details_array = array(); (1)

$customer_contact_details_array['firstName'] = $customer_contact_first_name;

$customer_contact_details_array['name'] = $customer_contact_name;

if (isset($context['email_recipient']) && $context['email_recipient']){

$address = array('email' => $context['email_recipient']);

$customer_contact_details_array['address'] = $address;

}

$customer_contact_details_json = json_encode($customer_contact_details_array); (2)

// Call function to create customer

$response = _customer_create ($operator_prefix, $customer_name, $customer_ext_reference,

$customer_contact_details_json); (3)

$response = json_decode($response, true); (4)

if ($response['wo_status'] !== ENDED) { (5)

$response = json_encode($response);

echo $response;

exit;

}

logToFile(debug_dump($response['wo_newparams'], "RESPONSE\n")); (6)| 1 | Build the array with the customer contact details. This parameter is defaulted by an empty array |

| 2 | Encode the array into it’s json representation |

| 3 | Call the SDK function |

| 4 | Get the JSON response as an array |

| 5 | If the call to the function failed, echo the response and exit the task |

| 6 | If the call was successful continue the task execution |

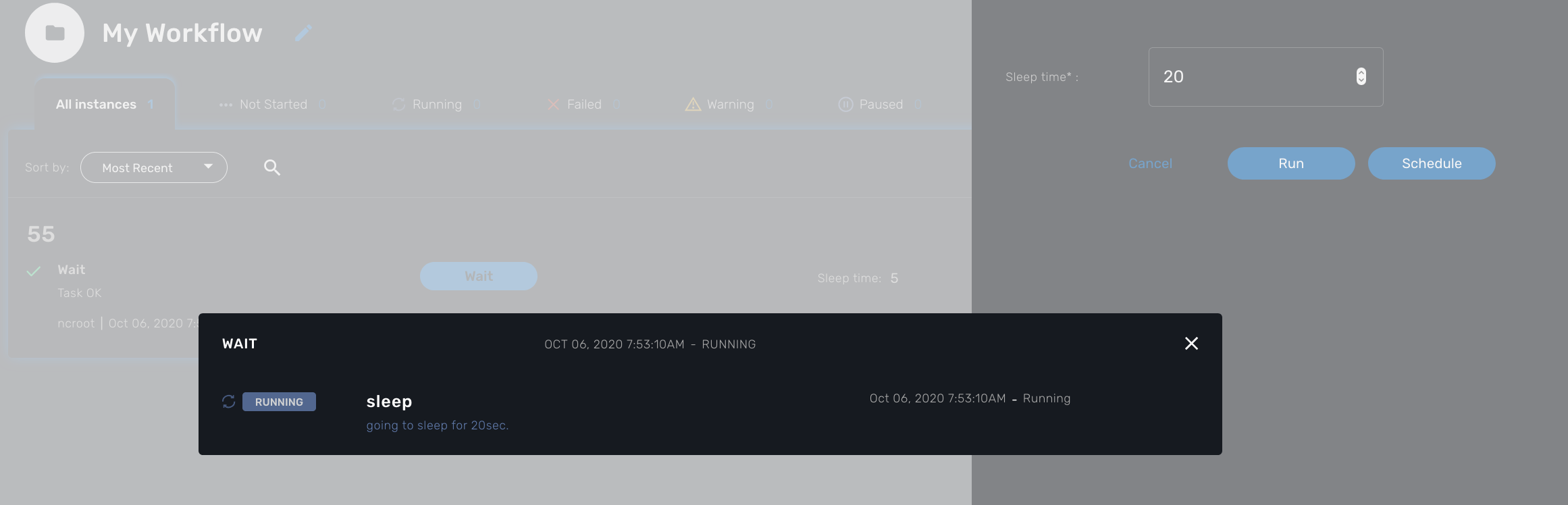

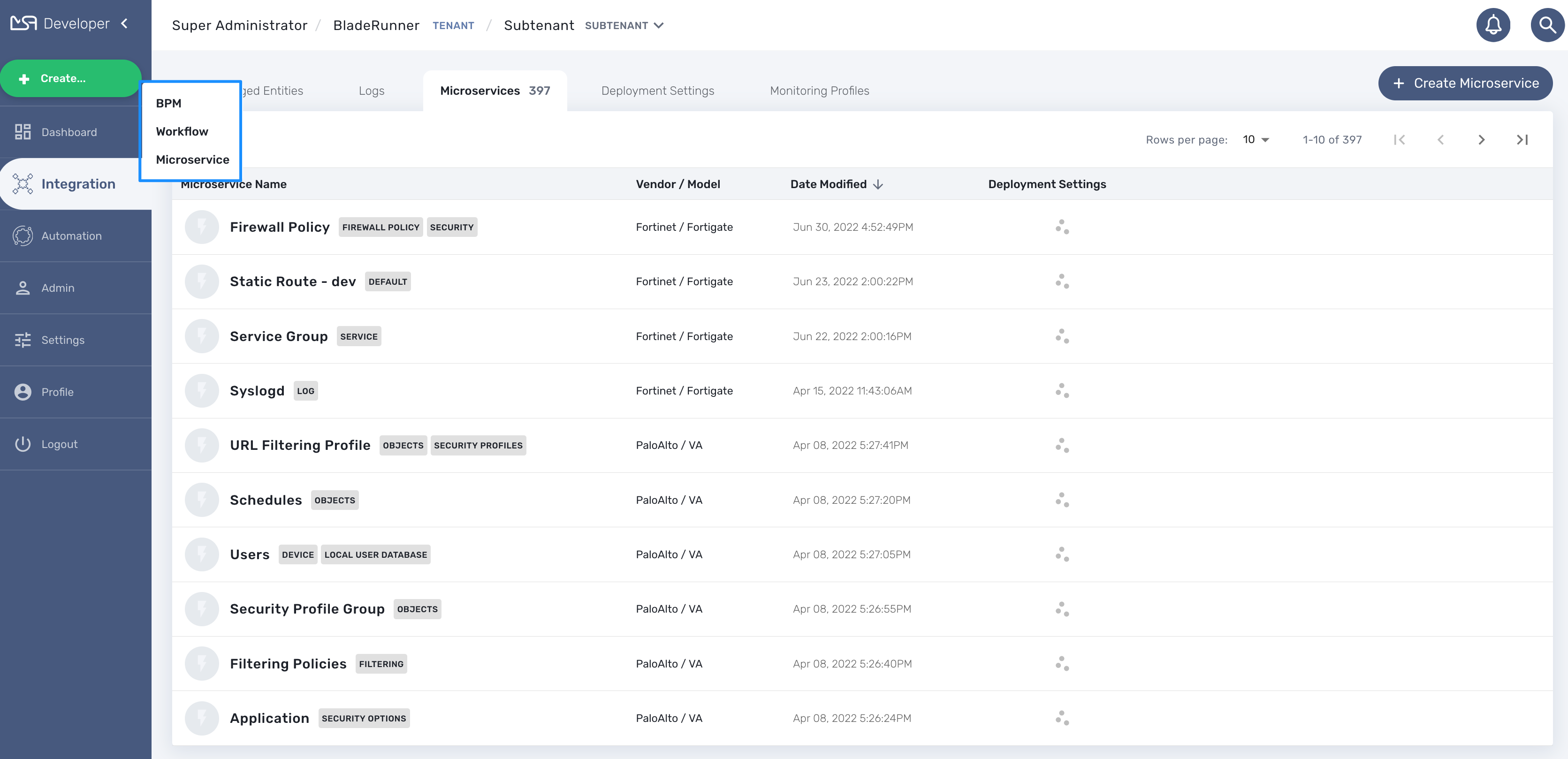

Output messages to the process execution UI

When a task runs, it is often useful to be able to provide real time message update on the UI.

The code sample below shows how to do it.

$PROCESSINSTANCEID = $context['PROCESSINSTANCEID'];

$EXECNUMBER = $context['EXECNUMBER'];

$TASKID = $context['TASKID'];

$process_params = array('PROCESSINSTANCEID' => $PROCESSINSTANCEID, (1)

'EXECNUMBER' => $EXECNUMBER,

'TASKID' => $TASKID);

update_asynchronous_task_details($process_params,

"going to sleep for ".$context['sleep']. "sec."); (2)

sleep($context['sleep']); (3)

update_asynchronous_task_details($process_params, "wakeup"); (4)| 1 | creates an array with the information about current process and task |

| 2 | update the UI with a message |

| 3 | execute some code |

| 4 | update the UI with another message |

Microservice functions

Call a microservice CREATE/UPDATE/DELETE function

$micro_service_vars_array = array (); (1)

$micro_service_vars_array ['object_id'] = $context ['id']; (2)

$micro_service_vars_array ['src_ip'] = $context ['src_ip'];

$micro_service_vars_array ['src_mask'] = $context ['src_mask'];

$micro_service_vars_array ['dst_ip'] = $context ['dst_ip'];

$micro_service_vars_array ['dst_mask'] = $context ['dst_mask'];

$micro_service_vars_array ['service'] = $context ['service'];

$micro_service_vars_array ['action'] = $context ['action'];

$object_id = $context ['id'];

$simple_firewall = array (

'simple_firewall' => array ( (3)

$object_id => $micro_service_vars_array

)

);

$response = execute_command_and_verify_response ( $managed_entity_id, CMD_CREATE, $simple_firewall, "CREATE simple_firewall" ); (4)| 1 | Build the Microservice JSON params for the CREATE operation of the microservice. |

| 2 | Assign the values passed to the workflow process to the array of parameters of the Microservice. |

| 3 | The value of the key should match the Microservice file name (stripped of the .xml file extension) |

| 4 | Call the CREATE for simple_firewall MS for each device (use CMD_UPDATE or CMD_DELETE for the other operations) |

The function execute_command_and_verify_response is defined in msa_common.php

Synchronize the managed entity configuration

The code sample below uses a PHP function from the SDK to trigger this operation by calling the IMPORT function of a microservice

$response = synchronize_objects_and_verify_response($managed_entity_id); (1)| 1 | The variable $managed_entity_id is the database ID of the managed entity |

Useful functions

Here is a list of some of the most commonly used functions.

Managed entities

function _device_create ($customer_id, $device_name, $manufacturer_id,

$model_id, $login, $password, $password_admin,

$management_address, $device_external_reference = "",

$log_enabled = "true", $log_more_enabled = "true",

$mail_alerting = "true", $reporting = "false", $snmp_community = SNMP_COMMUNITY_DEFAULT, $managementInterface = "")location: device_rest.php

| if you need to set the hostname or update the credentials you can use some dedicated functions from device_rest.php |

function _device_do_initial_provisioning_by_id ($device_id)location: device_rest.php

function _device_delete ($device_id) {location: device_rest.php

Tenant and Subtenant

function _operator_create ($operator_prefix, $name)location: operator_rest.php

function _customer_create ($operator_prefix, $customer_name, $external_reference = "", $contact_details = "{}")location: customer_rest.php

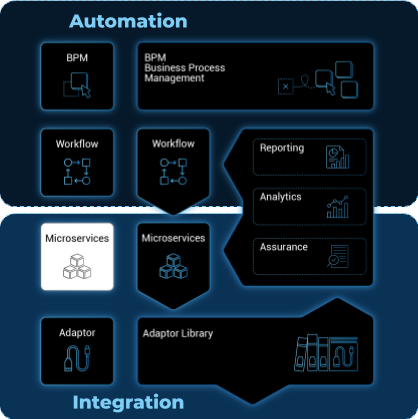

Microservice Editor

The microservice editor is a web based UI tool for designing, developing, testing and releasing microservices.

Microservice editor

Overview

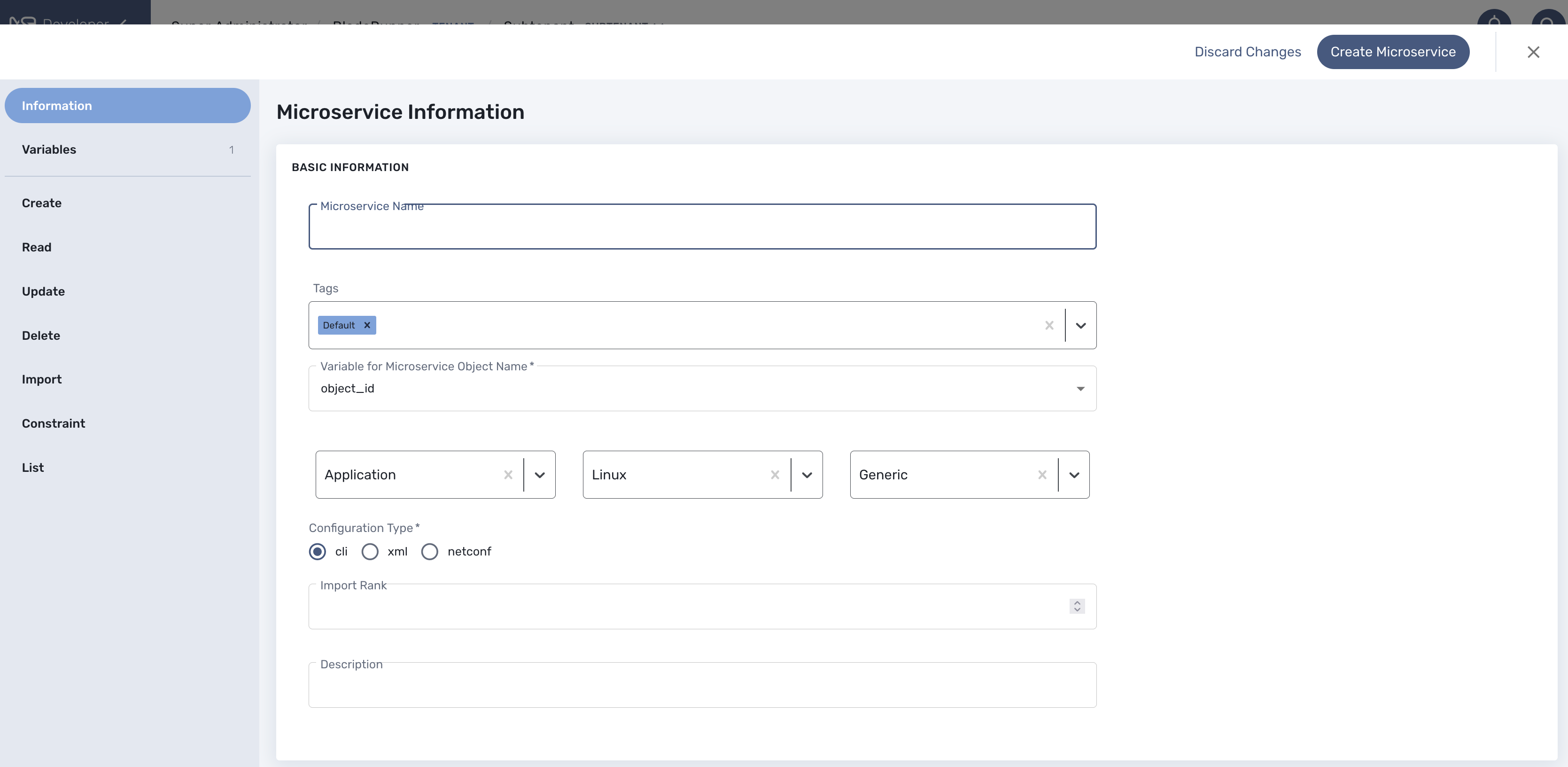

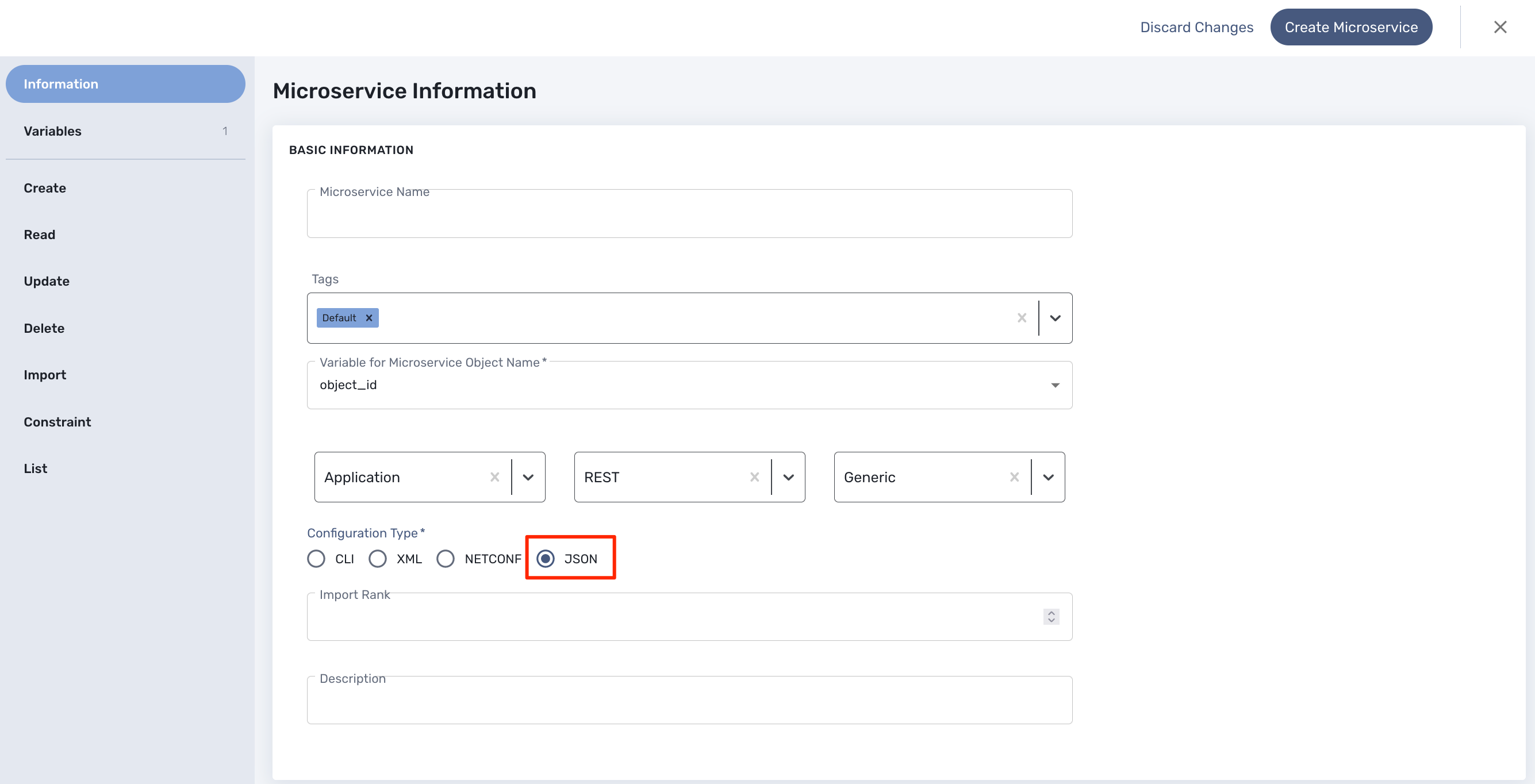

From the developer portal, click on "Create Microservice" to create the microservice.

The microservice console is composed of a vertical menu on the left and a main screen

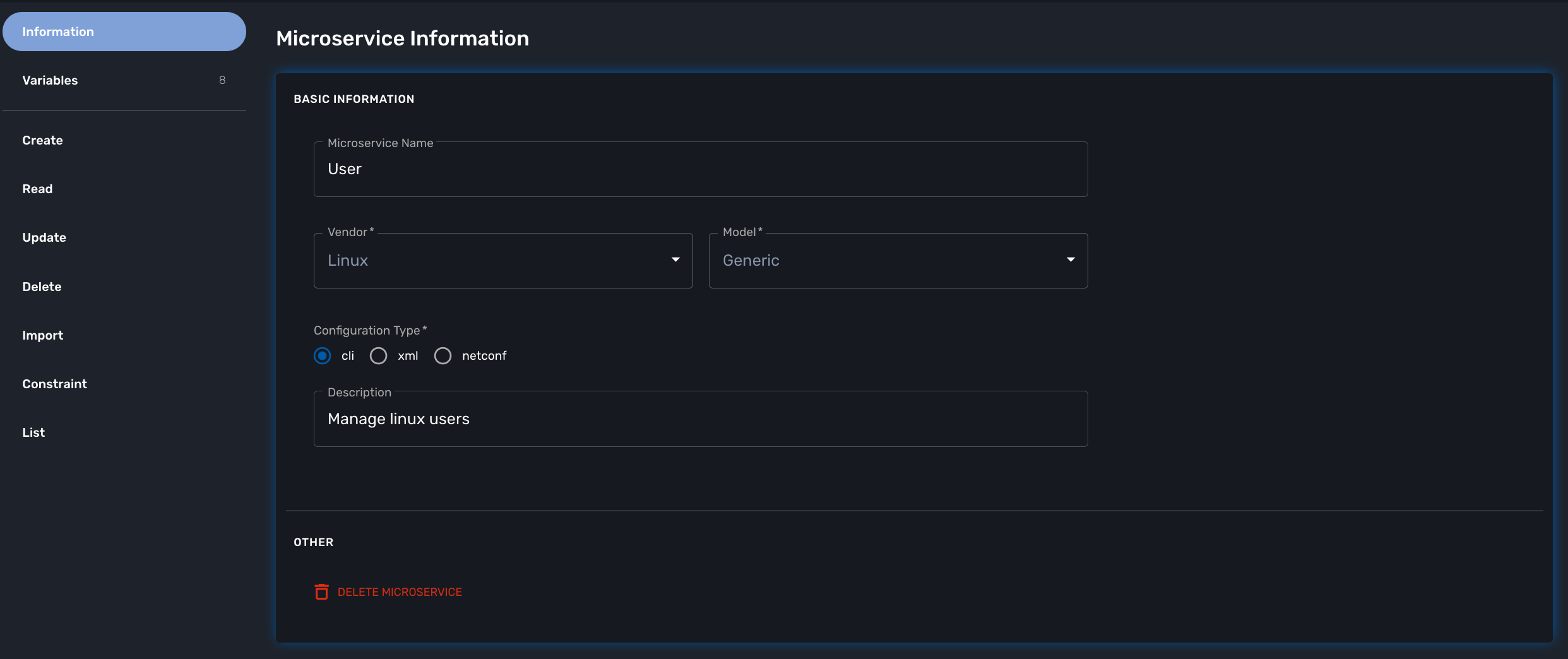

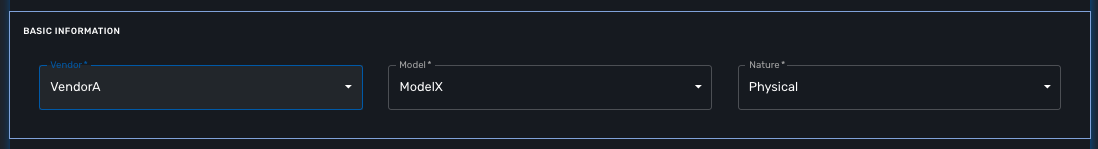

Information

Microservices are vendor specific, the implementations of the functions to create, update, delete or import a managed entity configuration will depend on the managed entity, the type of remote management interface, the format of the configuration, how it is structured,…

Vendor and model

Select the correct vendor/model information. This will be used by the deployment settings to filter the microservice that are eligible for selection.

Configuration Type

It is also important to select the proper configuration type:

-

cli: for managed entities such as Linux, Cisco IOS, Fortigate,…

-

xml: for managed entities with a REST management API (both XML and JSON API are supported)

-

netconf: for managed entities that support Netconf as the management API

The editor UI will adjust based on the configuration type and this setting cannot be changed once the microservice is created.

Import rank

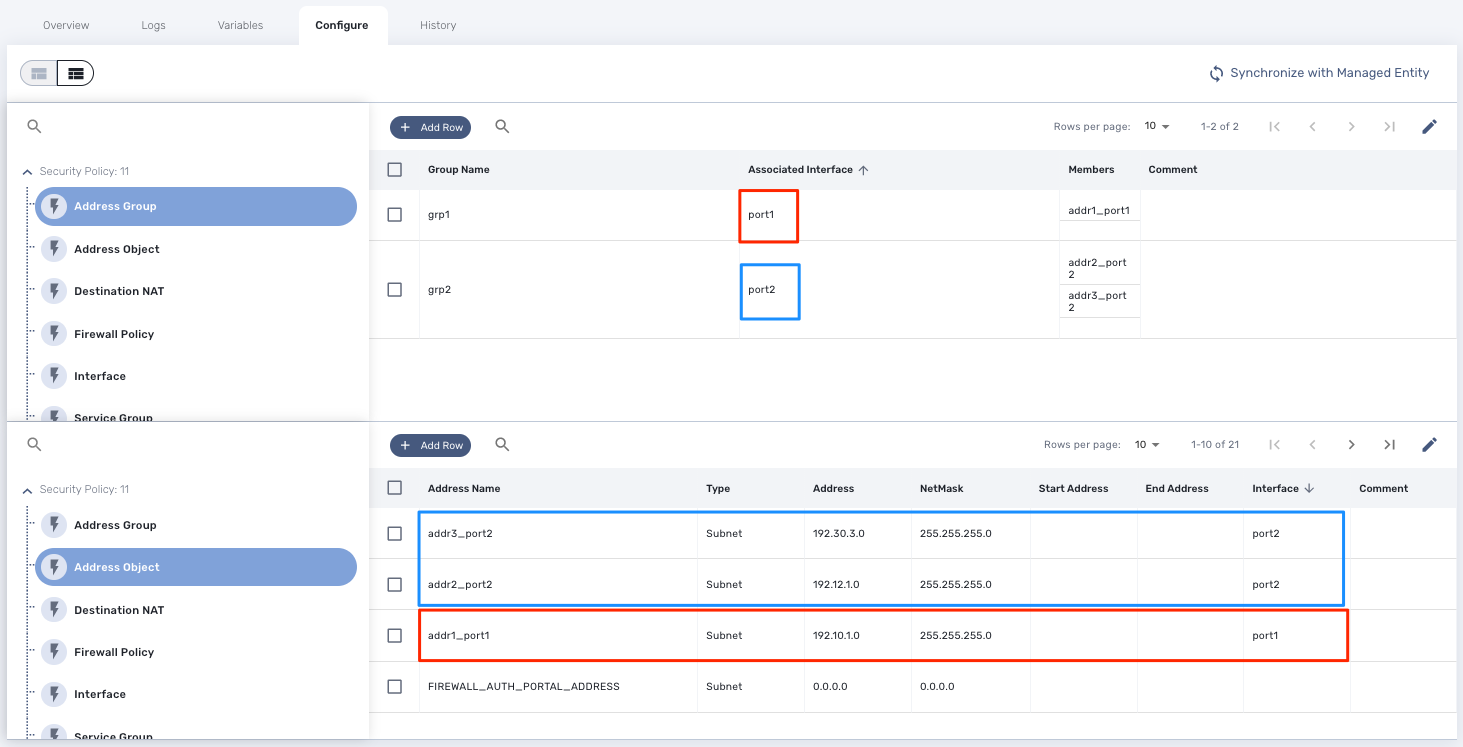

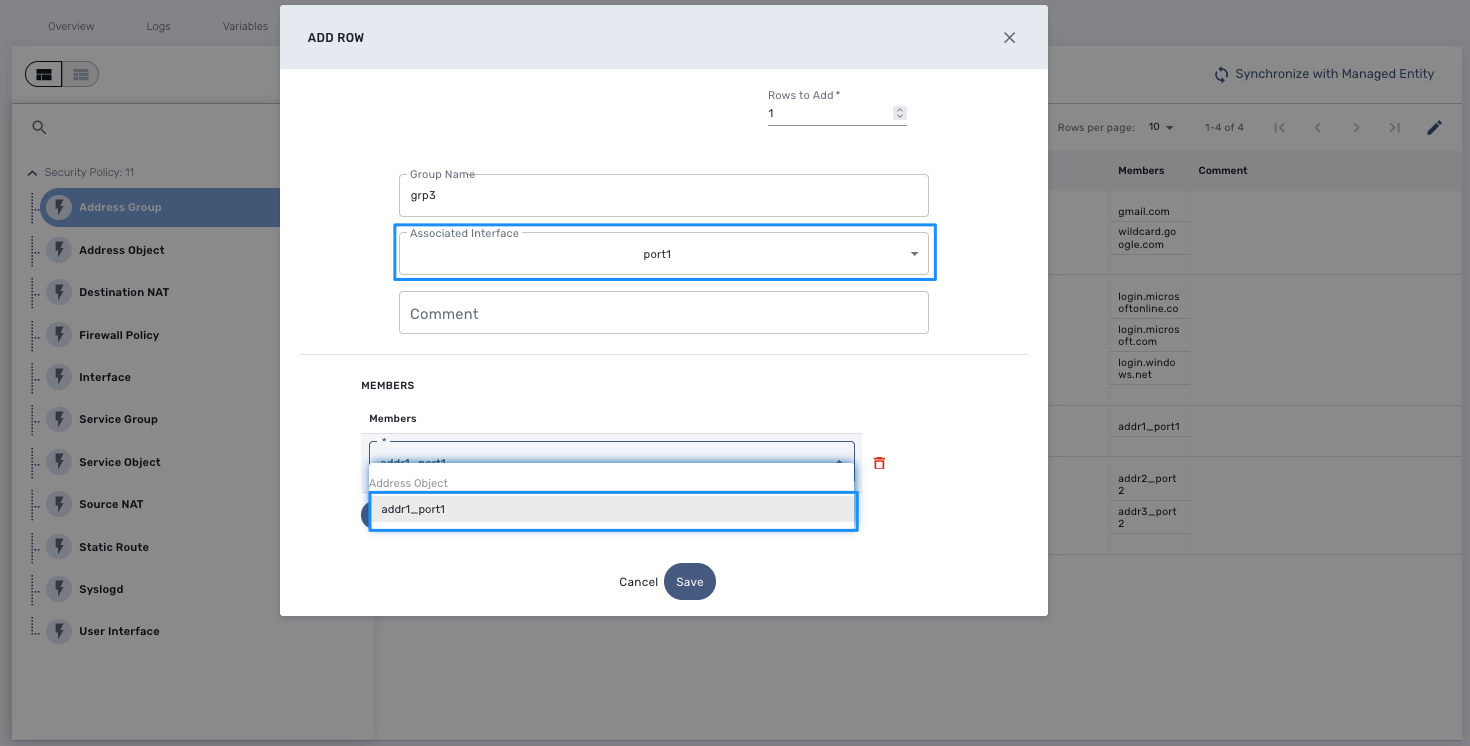

The import rank is needed to control in which order the execution of the import functions of your microservices will be executed when you click on "Synchronize with Managed Entity" from the managed entity tab "configure".

Setting correct import rank is important when you have dependencies between your microservices. Microservice dependencies are created by leveraging the variable type "Microservice Reference" (see documentation about microservice variables).

Once created, you can search for your microservice in the list and attach it to a deployment setting, you can also edit it or delete it.

| The easiest way to design a microservice is to use a managed entity dedicated to testing and follow in a code-test-fix development cycle. This documentation uses the Linux Managed Entity provided by the mini lab. |

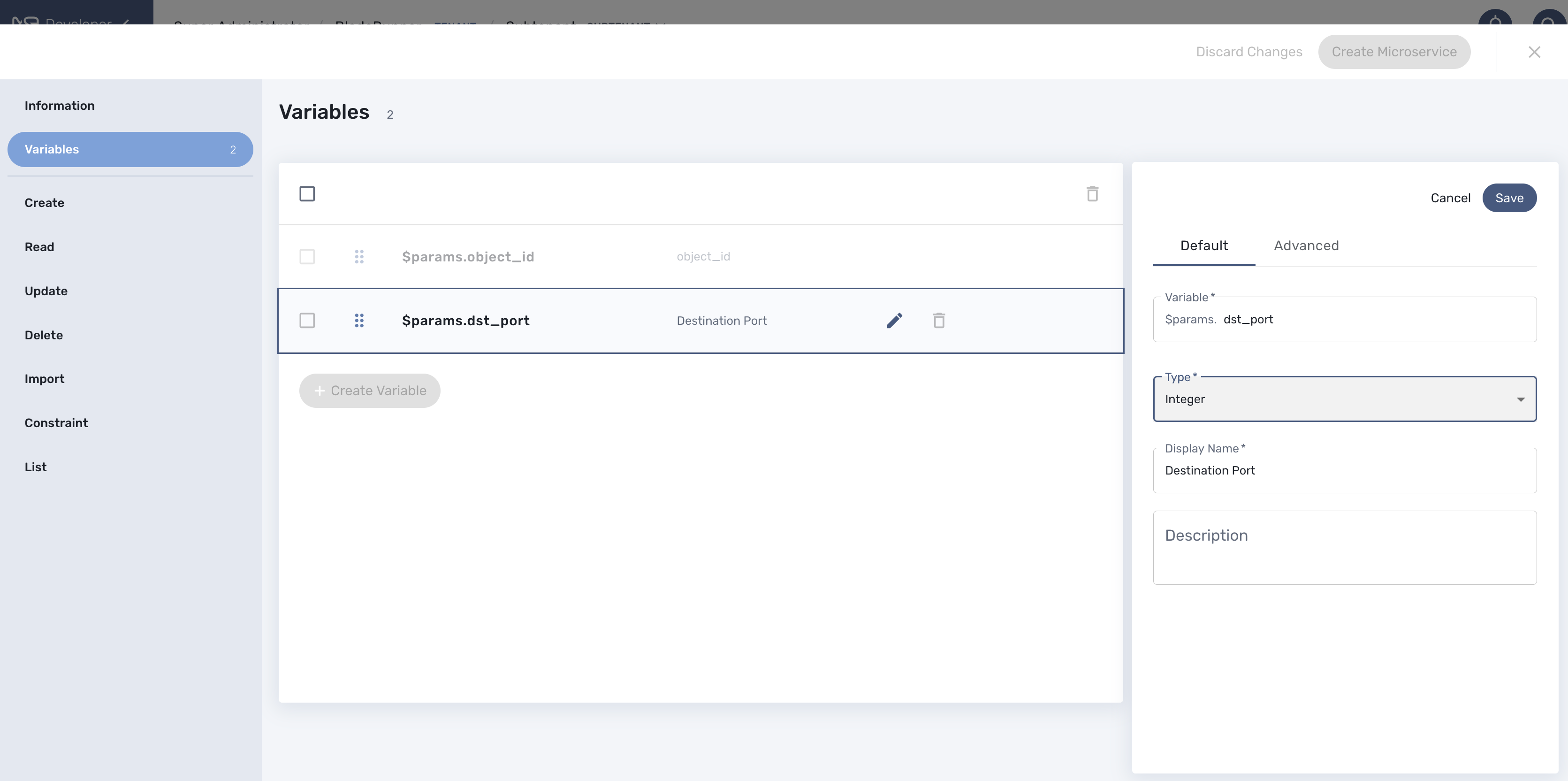

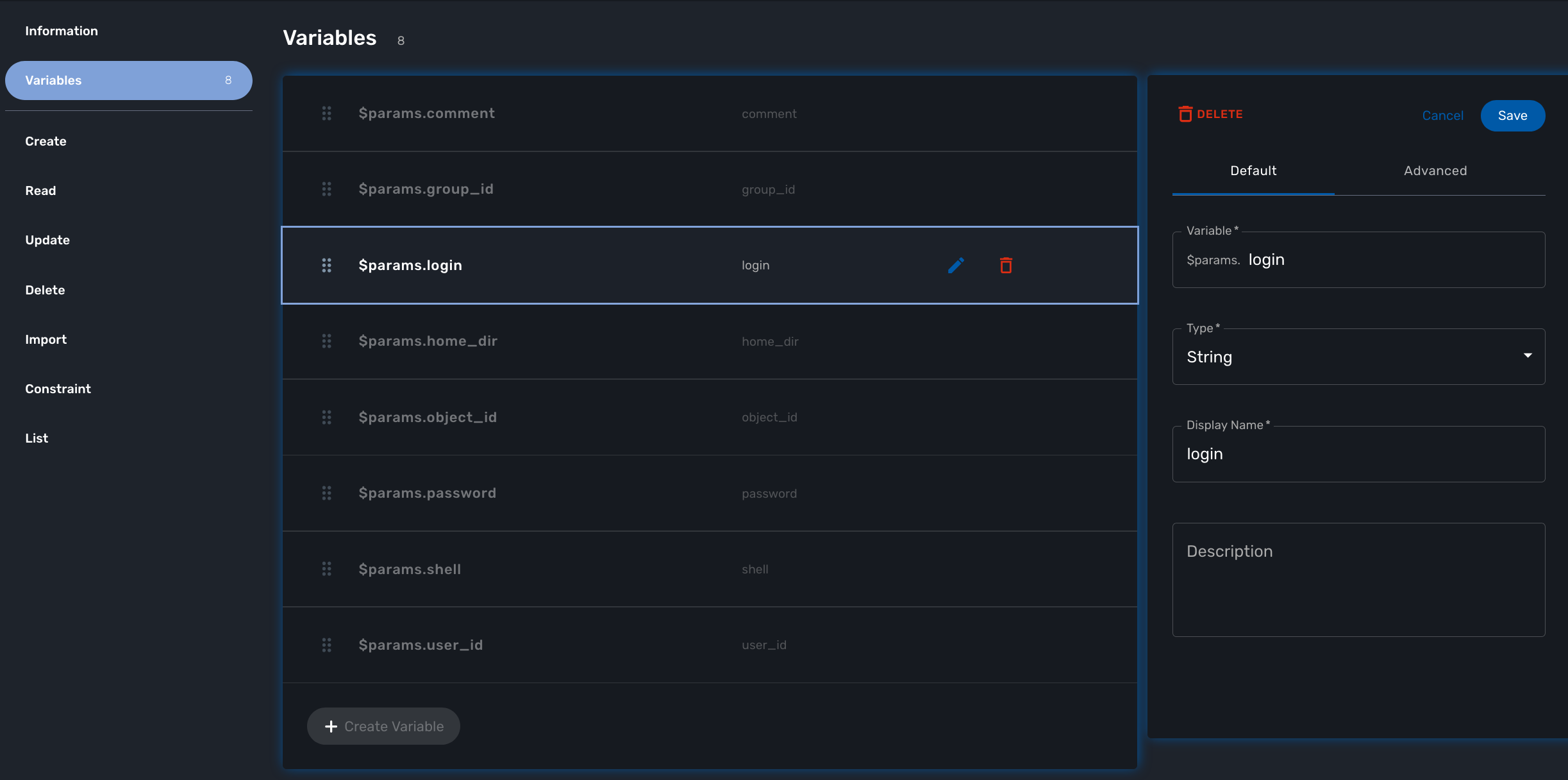

Variables

Variable are usually defined to hold the parameters to be passed to the microservice. For instance, the port and IP to block for a firewall policy. Variables can also be used to display user information that is not meant to be used for configuring the managed entity.

Setting variables is done from the section "Variables" on the microservice editor screen.

When you create a new microservice, the variable object_id, which is mandatory, will be set and cannot be deleted. It can be edited to set it’s type and other advanced properties.

All variables are referenced with the prefix $param which is automatically set in the variable editor screen and when a variable has to be referenced in one of the microservice function, you need to use the syntax {$param.your_variable} (see below for more examples)

By default the type of a variable is String but other types are supported such as Integer, Boolean, Password, IpAddress, ObjectRef,…

Usage of the {$object_id} variable

The {$object_id} is a reserved variable used to reference objects into the database and used as variable name in Smarty in the template resolution.

When the parameters are passed to the engine you give:

{"interface":{"Interface-Service-engine0/0":{"ip_address":"1.2.3.4"}}}

The variables values are:

{$object_id} => "Interface-Service-engine0/0"

{$params.ip_address} => "1.2.3.4"

Functions

From the left menu of the microservice editor there is a list of functions to implement. While none of the functions are mandatory, at least one of 'Create', 'Update', 'Delete' or 'Import' has to be implemented in oder to have a microservice that can actually do something.

In order to provide a full lifecycle management of a service on a managed entity, the 4 functions above have to be implemented.

The function 'Read', 'List' are optional and while it’s possible to implement them, you’ll have to rely on the REST API to execute them.

The function 'Constraint' allow the implementation of custom constraints to be verified before the managed entity is actually configured.

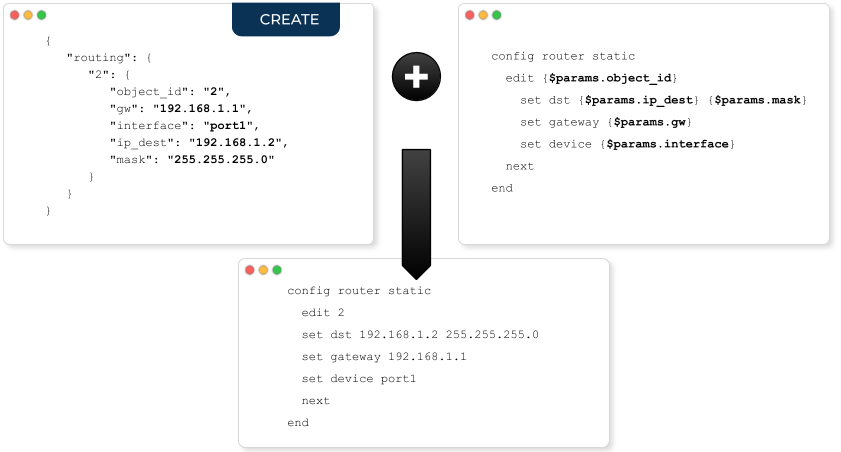

Create

The Create function takes care of configuring a new service in the managed entity. For example, a new static route if the managed entity is a router.

This functions is implemented either in PHP Smarty template language for CLI microservice (see "Microservice Template with PHP Smarty" for more details) or as a REST API call for XML microservice.

When this function is implemented, a button "+ Add Row" will appear in the tab "Configure" of the managed entity that uses this microservice.

Example

This example is a Samrty template that will take the parameters entered in the user form and generate a configuration.

config router static

edit {$params.object_id}

set dst {$params.ip_dest} {$params.mask}

set gateway {$params.gw}

set device {$params.interface}

{if empty($params.comment)}

unset comment

{else}

set comment "{$params.comment}"

{/if}

next

endUpdate

The Update function takes care of updating an existing service in the managed entity.

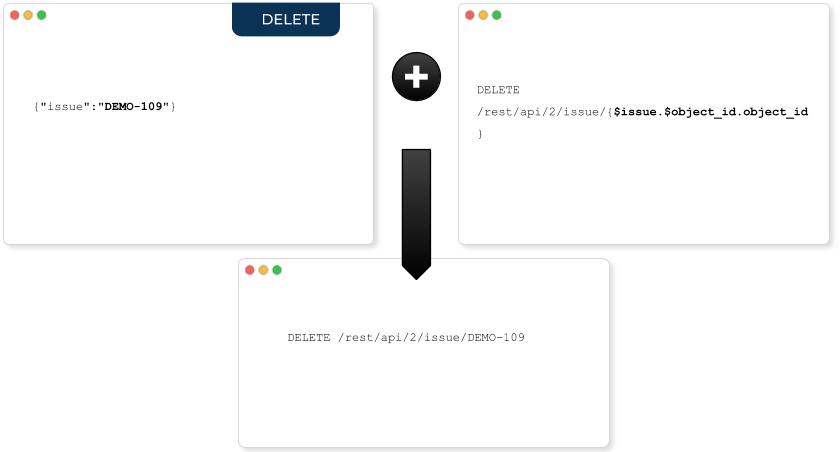

Delete

The Delete function takes care of deleting an existing service in the managed entity.

Example

This example generate a conf based on the instance parameters as store in the database.

config router static

delete "{$routing.$object_id.object_id}"

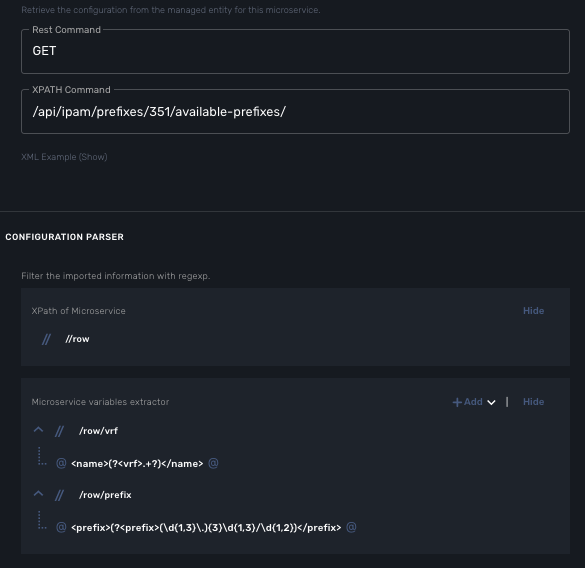

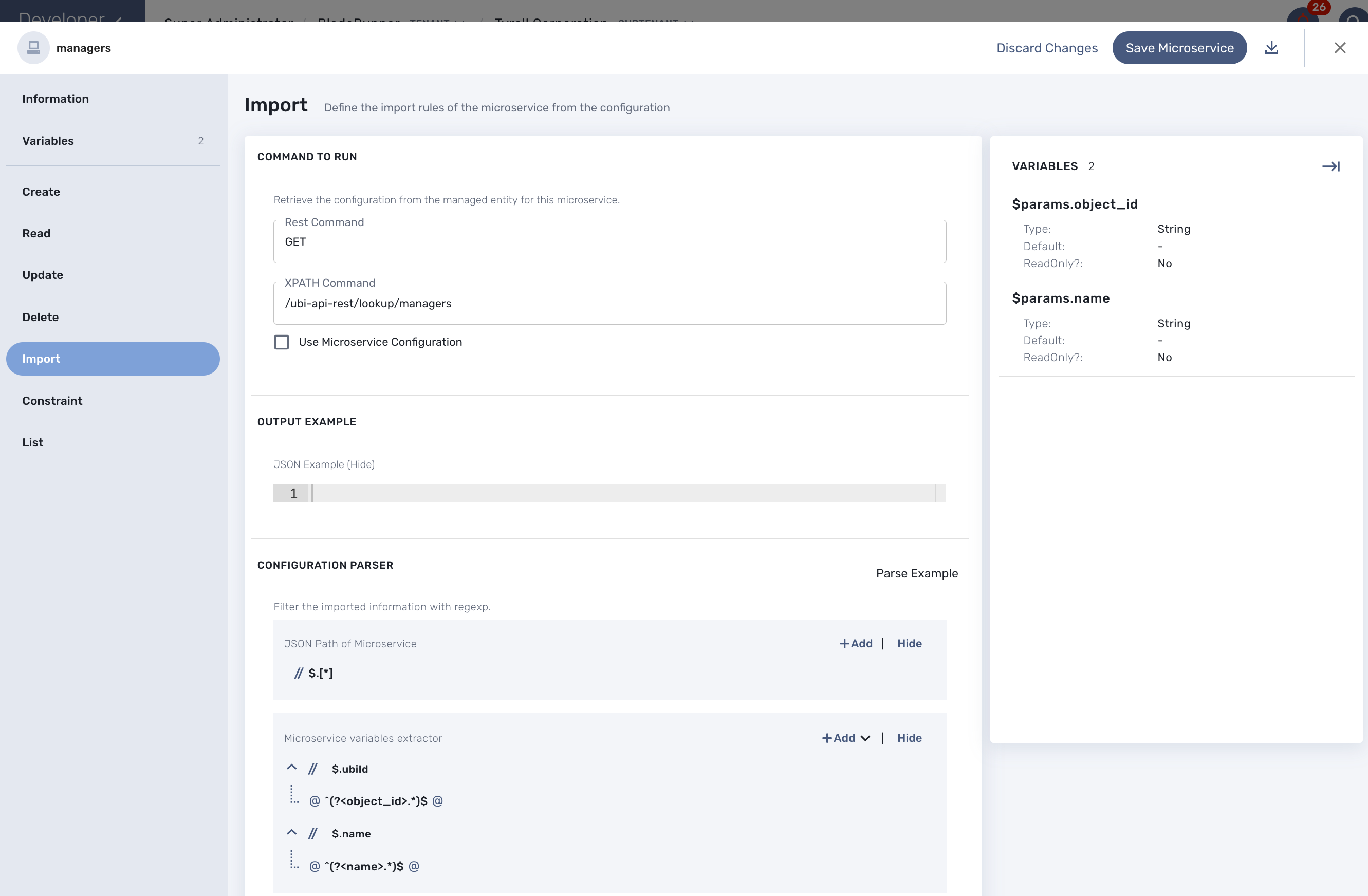

endImport

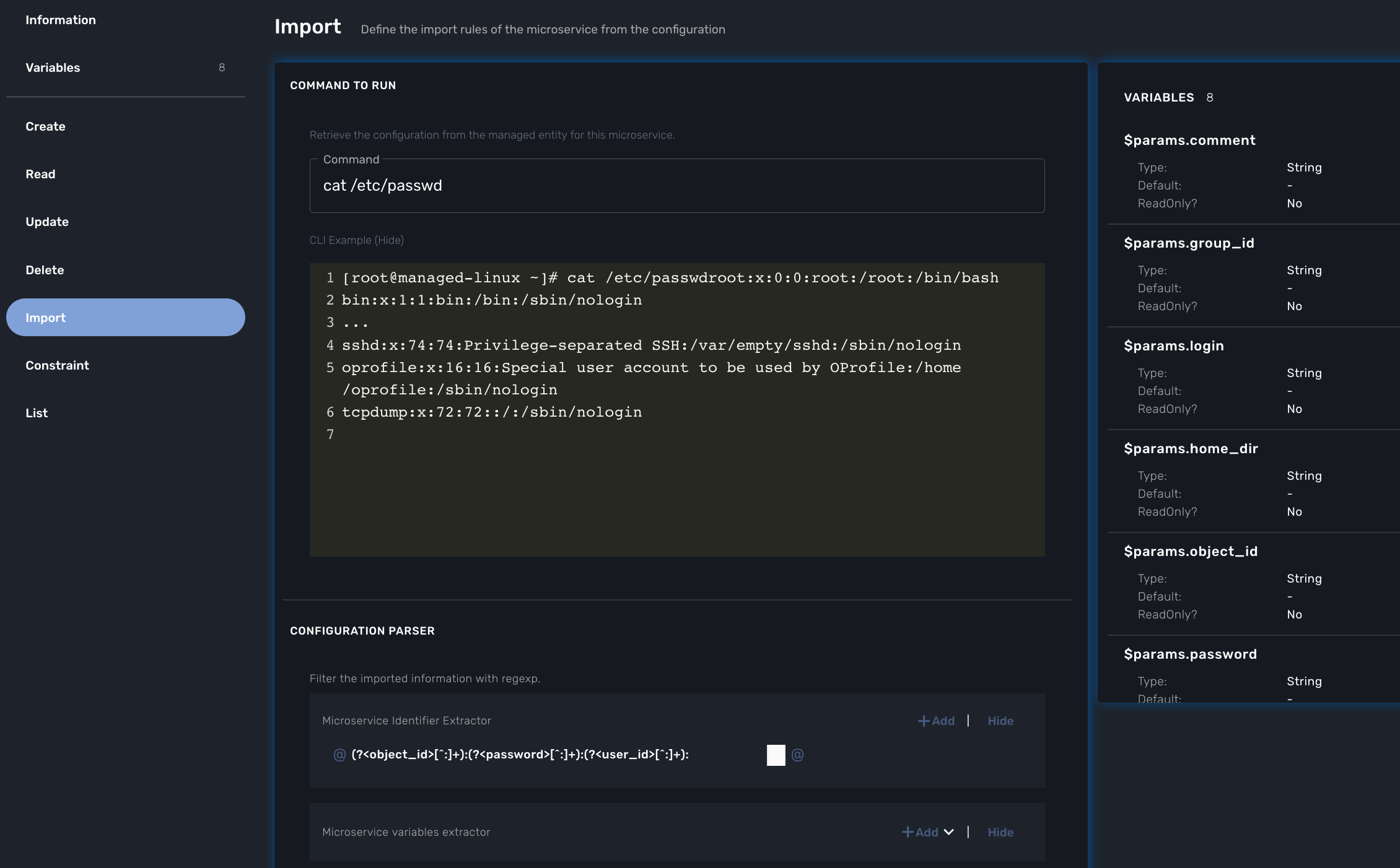

The role of the Import function is to import the actual configuration of the managed entity into the MSactivator™ database.

The implementation of the Import is either based on a set of regular expressions or a set of XPath expressions that build a parser that will extract the values of the variables.

The Import is made of 3 parts:

-

the command to run on the device for CLI command based device or the REST API to call.

-

the configuration parser, implemented with a set of regular expressions or XPath expressions. Only the microservice identifier extractor is mandatory.

-

a set of optional post import operations implemented in Smarty language (https://www.smarty.net/).

Post-import

This example shows how to use the post import section of a microservice in order to fulfill one of it’s variable with data coming from other microservice instances already imported in MSactivator™ database.

This example is based on Fortigate configuration example, more precisely on the web filtering configuration sub-part.

This first relies on the ability to define an import rank in the microservice definition. In our example the microservice Web_Filter(.xml) will need the instances of the microservice URL_Filter(.xml) to be imported first in order to have relevant information. So Web_Filter microservice as an import rand higher than URL_Filter, so that during the import import/synchronize process, URL_Filter microservice will be imported first in MSactivator™ database so they can be read during the post import of Web_Filter microservice in order to enrich the instance with detailed values/info.

Instead of displaying/proposing to enter meaningless numbers as identifier of URL filtering rules, the post import , the Web filtering microservice will display the URL filter details gathered during the post import. In order to read the MS instance, the syntax is simply based on the microservice definition file name.

In the below Web_Filter post import example, by using the foreach loop on the variable $URL_Filter, we can seek MSactivator™ microservice database instance.

{foreach $URL_Filter as $filter}

{if isset($params.filter_id) && $params.filter_id == $filter.object_id}

{assign_object_variable var="filter_id" value=$filter.object_id}

{assign_object_variable var="filter_name" value=$filter.filter_name}

{if !empty($filter.urls)}

{foreach $filter.urls as $index => $url}

{assign_object_variable var="urlfilter.{$index}.url_id" value=$url.url_id}

{assign_object_variable var="urlfilter.{$index}.url" value=$url.url}

{assign_object_variable var="urlfilter.{$index}.action" value=$url.action}

{assign_object_variable var="urlfilter.{$index}.type" value=$url.type}

{/foreach}

{/if}

{break}

{/if}

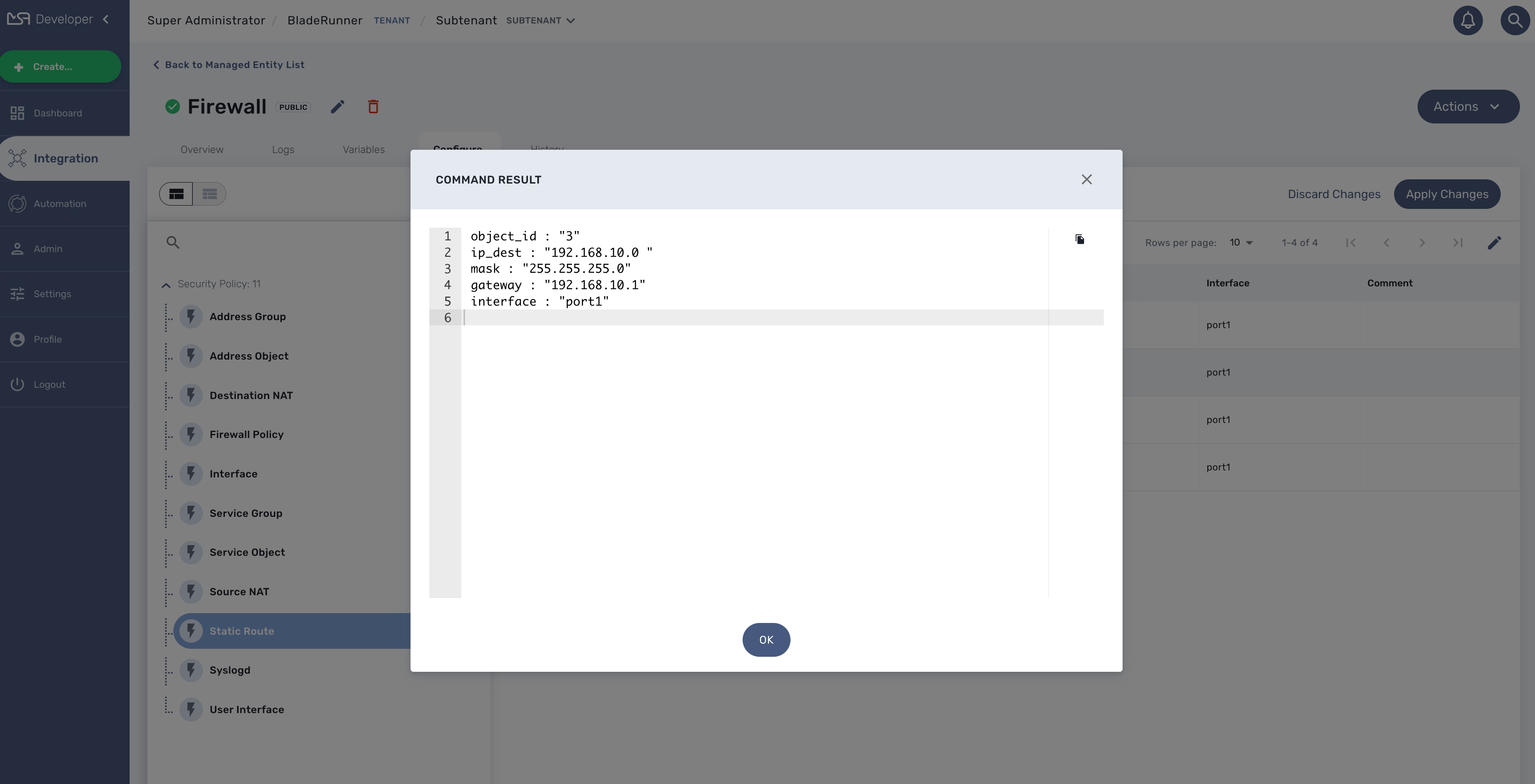

{/foreach}Read

The Read function can be implemented to allow the user or the API to generate a text file based on a Smarty template and the microservice instance variables store in the database.

Example

This example generate a text based on a template and the microservice instance variables values as stored in the database

config router static

edit {$routing.$object_id.object_id}

set dst {$routing.$object_id.ip_dest} {$params.mask}

set gateway {$routing.$object_id.gw}

set device {$routing.$object_id.interface}

{if empty($routing.$object_id.comment)}

unset comment

{else}

set comment "{$routing.$object_id.comment}"

{/if}

next

end

Another template could be:

object_id : "{$routing.$object_id.object_id}"

ip_dest : "{$routing.$object_id.ip_dest} "

mask : "{$params.mask}"

gateway : "{$routing.$object_id.gw}"

interface : "{$routing.$object_id.interface}"

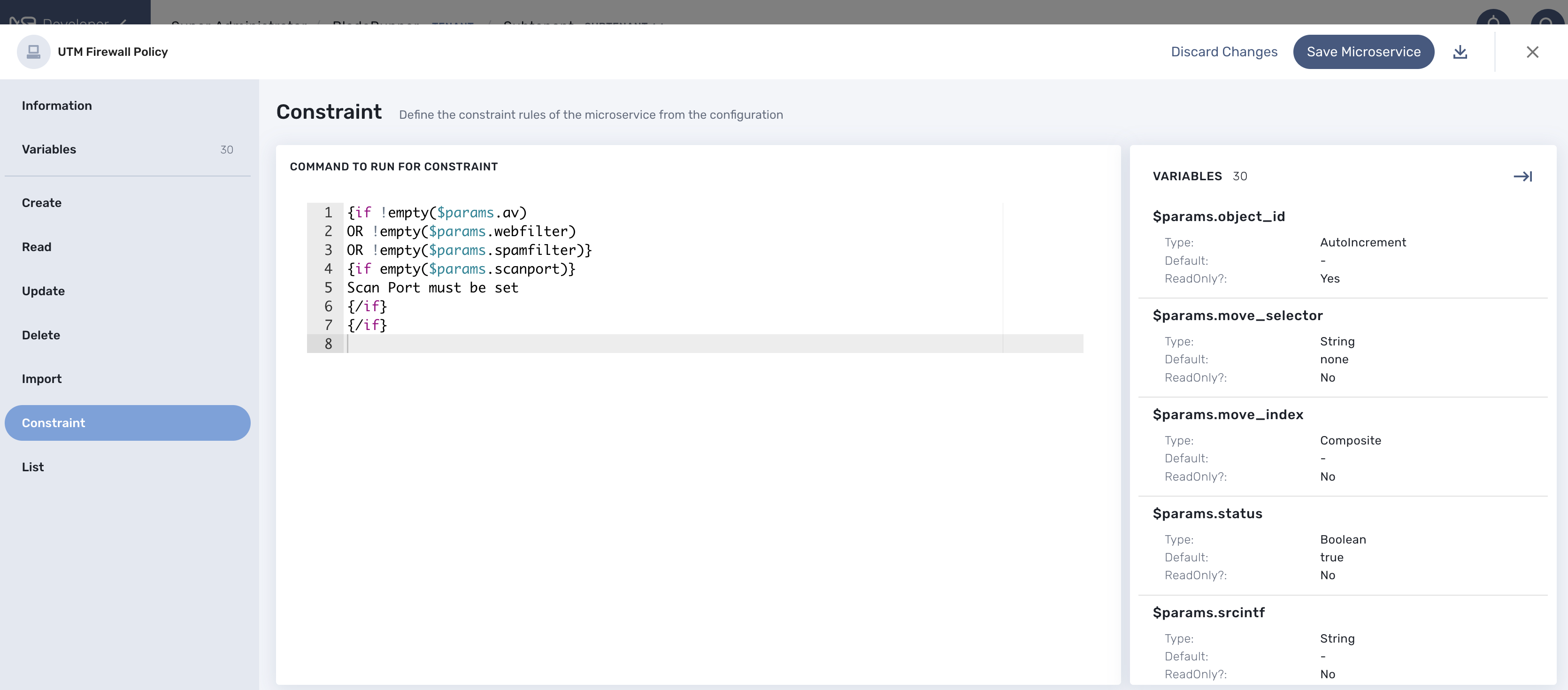

Constraint

In most cases, the constraint definition is straight forward when defining a microservice variable. Based on the type of the variable, the UI will check if the provided value is consistent with the type. For example if the type is "IP Address", the format of the value should be an IP address.

In some cases, though, the constraint is more complex and may depend on a combination of several conditions that can involve multiple variables.

For example, the 5 variables params.av, params.webfilter, params.spamfilter, params.ips, and params.scanport have the following dependencies:

-

if

params.avis set thenparams.scanportmust be set -

if

params.webfilteris set thenparams.scanportmust be set -

if

params.spamfilteris set thenparams.scanportmust be set -

if

params.ipsis set thenparams.scanportis not mandatory

To make things simpler, it is possible to add a custom constraint evaluation to the object definition.

The custom constraint is implemented as a smarty template:

{if !empty($params.av)

OR !empty($params.webfilter)

OR !empty($params.spamfilter)}

{if empty($params.scanport)}

Scan Port must be set

{/if}

{/if}

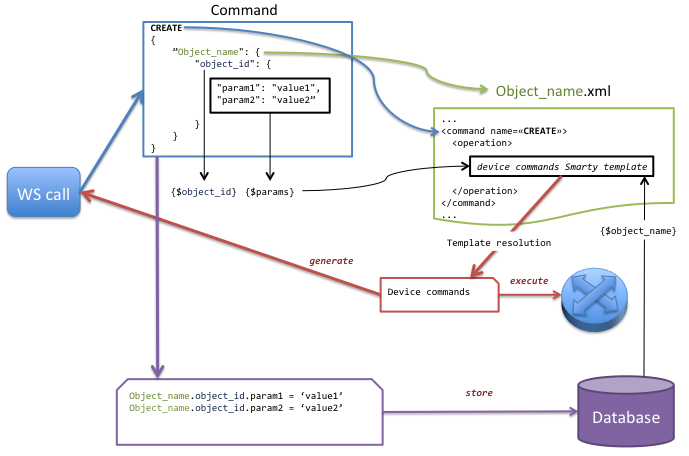

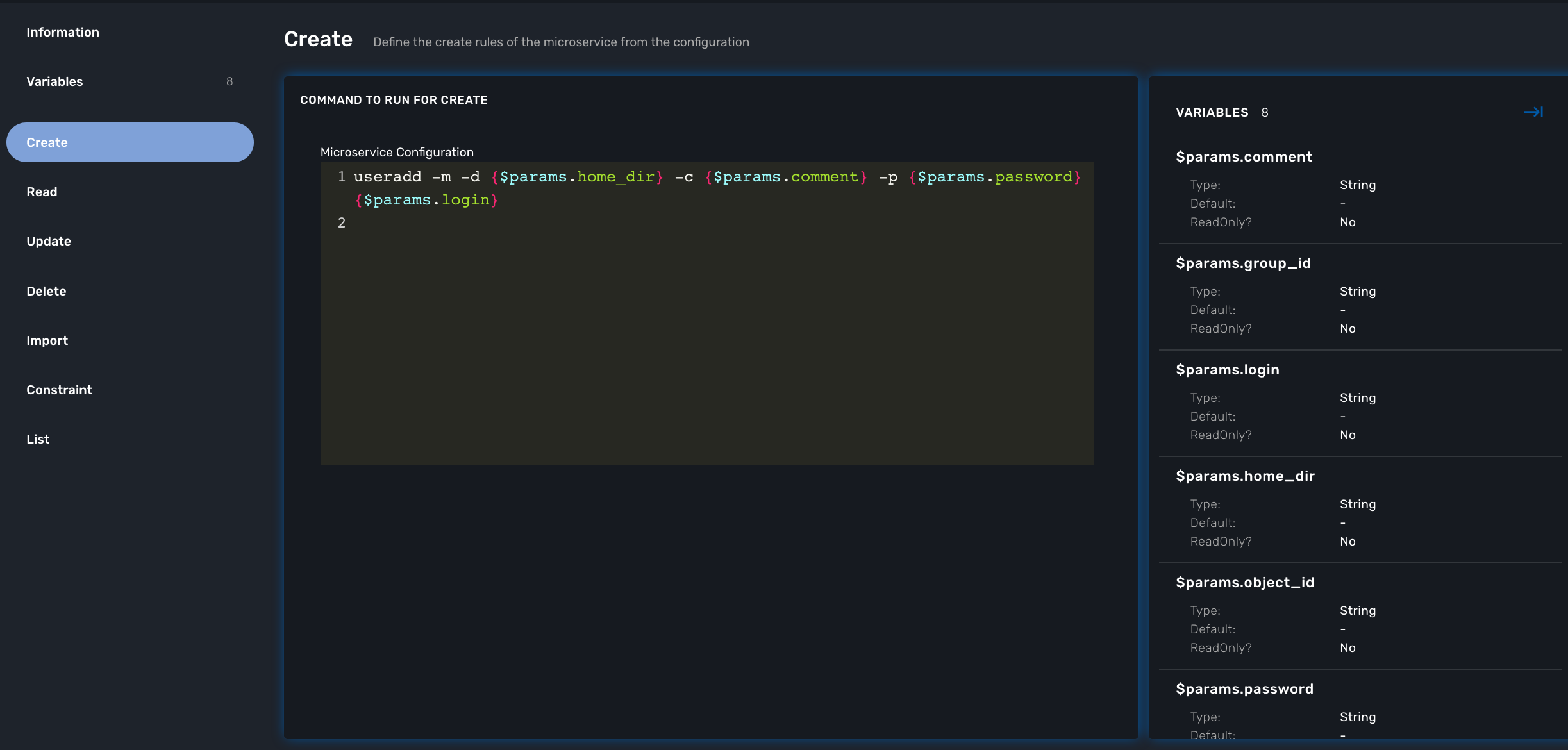

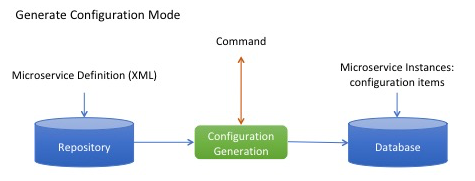

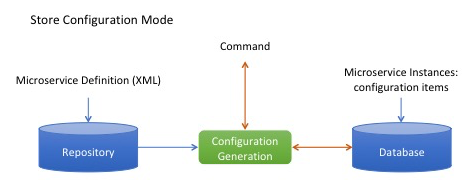

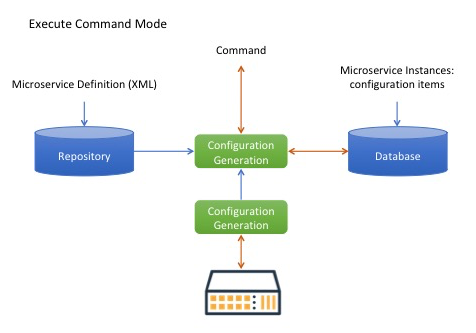

Create, Update or Delete Execution flow

The diagram below shows the flow of execution when one of the Create, Update or Delete function is executed by the REST API (directly or from the UI)

-

A command (CREATE here) is send via web service.

-

The microservice name is used to retrieve the associated XML definition file associated to the managed entity.

-

The command name is used to find the <command> section in the XML definition file.

-

The variables {$object_id} and {$params} are created to parse the template of the XML definition file.

-

Variables from the database are also created to parse the template of the XML definition file.

-

The template generates device commands used to perform the requested command on the object.

-

The device commands generated are returned to the web service call.

-

Optionally the device commands generated are applied to the managed entity.

-

Optionally the object extracted from the web service call is stored in the database.

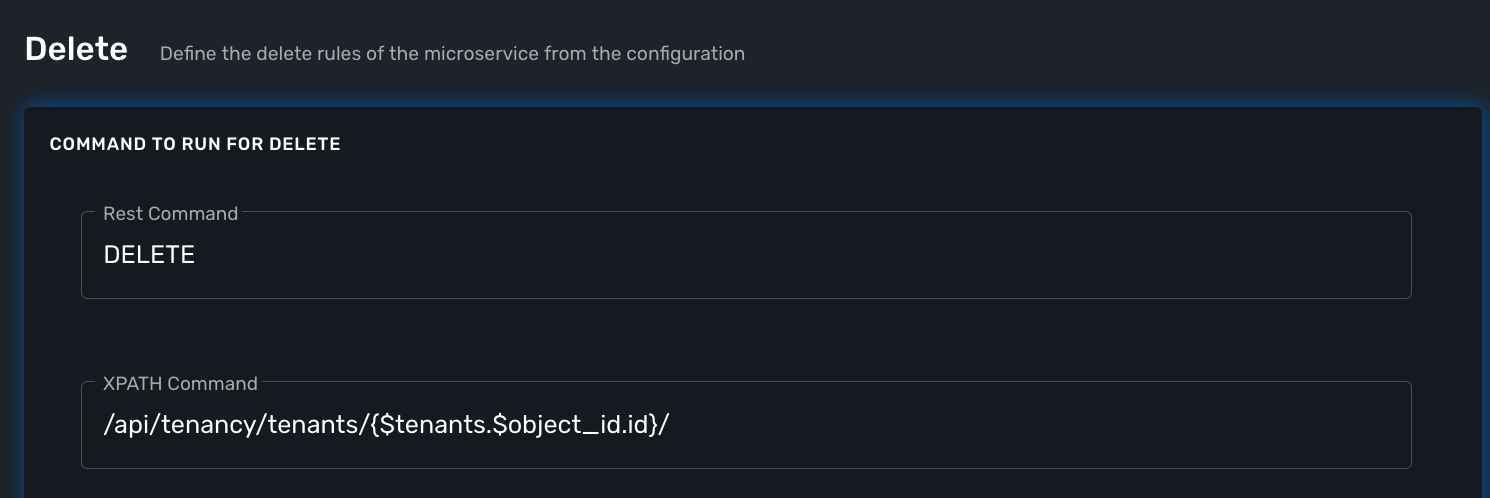

XML Microservice Editor

Overview

XML microservice are used to manage entities that expose a REST API that return a response formatted in XML or for Adapter that are not supporting JSON microservice and JsonPath.

To create a XML microservice, you need to check XML for the configuration type when creating a new microservice

| do not try to change the configuration type of an existing microservice as this is not supported. |

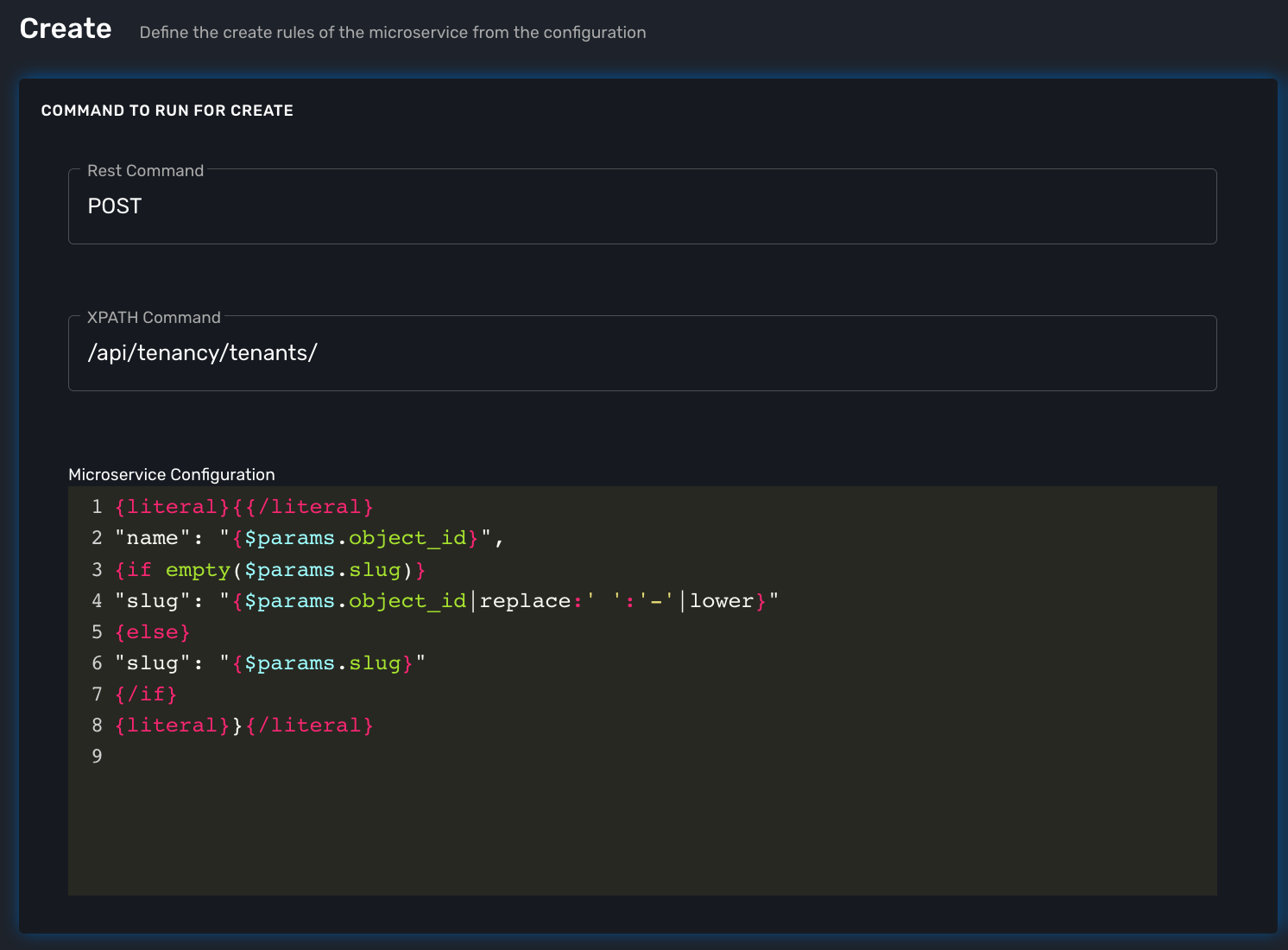

The functions Create, Update and Delete

Create and Update

These functions will call the REST API design to create or update the managed entity configuration.

For instance, the REST API to create a new tenant is

HTTP Request: /operator/{$prefix}

Method: POST

| Parameter Name | Type | Description |

|---|---|---|

name |

String |

the name of the tenant |

Example:

POST /api/tenancy/tenants/In case you need to pass in a JSON payload with the parameters, the microservice Create will look like this.

Import

The main difference between the CLI and REST (Json/XML) Microservice definition is the implementation of the functions Create/Update/…

CLI Microservice definition is covered in the documentation about the CLI microservice editor.

This documentation uses the Netbox REST adapter and the Netbox REST API to illustrate the design of XML Microservice.

JSON Microservice Editor

Overview

JSON microservice are used to manage entities that expose a REST API that returns a response formatted in JSON.

To create a JSON microservice, you need to check JSON for the configuration type when creating a new microservice.

| do not try to change the configuration type of an existing microservice as this is not supported. |

The functions Create, Update and Delete

Create and Update

These functions will call the REST API design to create or update the managed entity configuration.

For instance, the REST API to create a new tenant is

HTTP Request: /operator/{$prefix}

Method: POST

| Parameter Name | Type | Description |

|---|---|---|

name |

String |

the name of the tenant |

Example:

POST /api/tenancy/tenants/In case you need to pass in a JSON payload with the parameters, the microservice Create will look like this.

Import

The main difference between the CLI and REST (Json/XML) Microservice definition is the implementation of the functions Create/Update/…

CLI Microservice definition is covered in the documentation about the CLI microservice editor.

This documentation uses the Netbox REST adapter and the Netbox REST API to illustrate the design of XML Microservice.

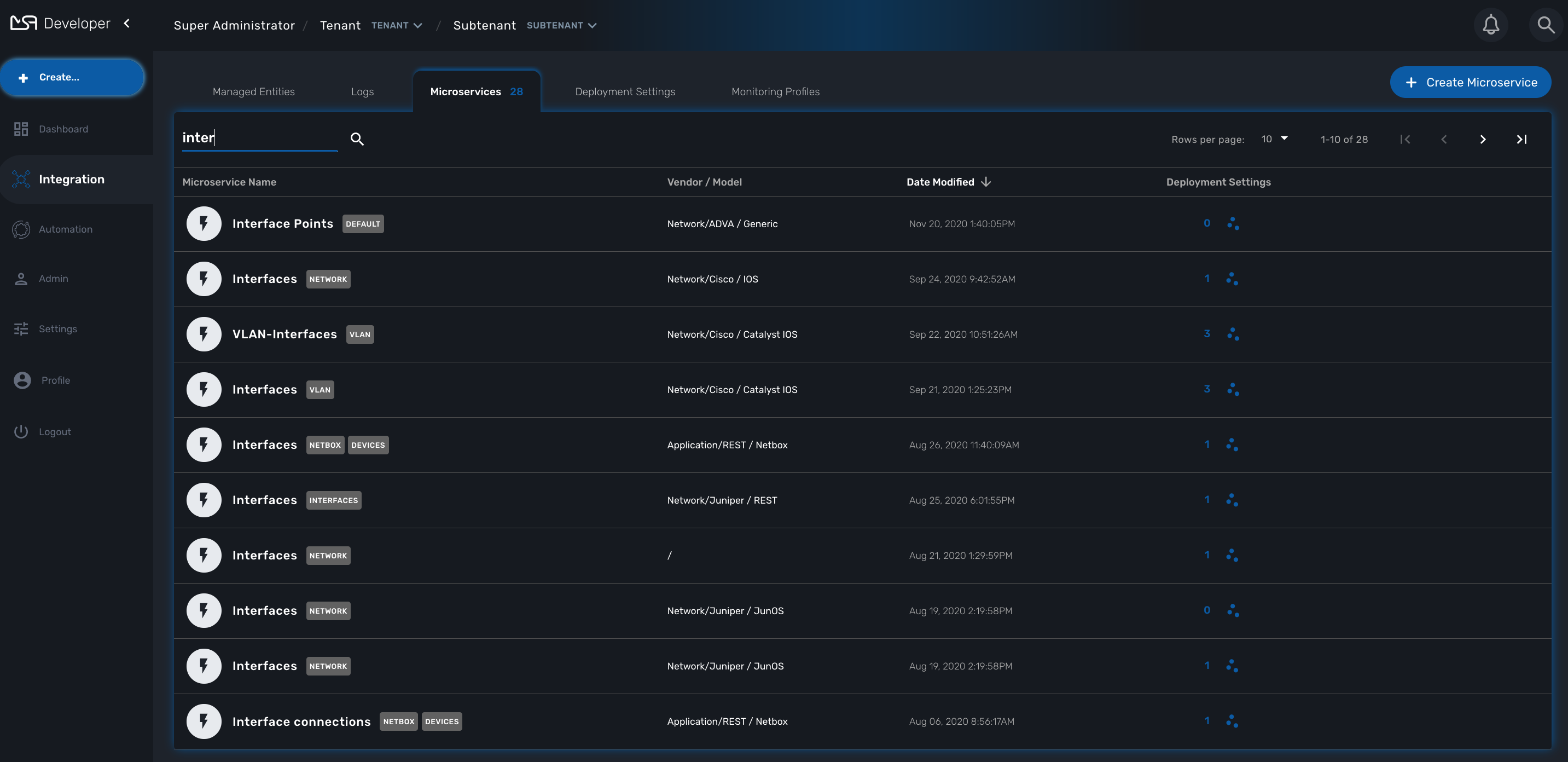

CLI Microservice Editor

You can use the the microservice editor UI to create or update a microservice.

To create or edit a microservice you can go to the "Integration" section and select the Microservice tab

CLI microservice implementation

The Microservice API is made of several functions that can be implemented. It is not mandatory to implement all the functions, this will depend on your requirements and can be done incrementally.

The functions Create, Update and Delete

Create and Update

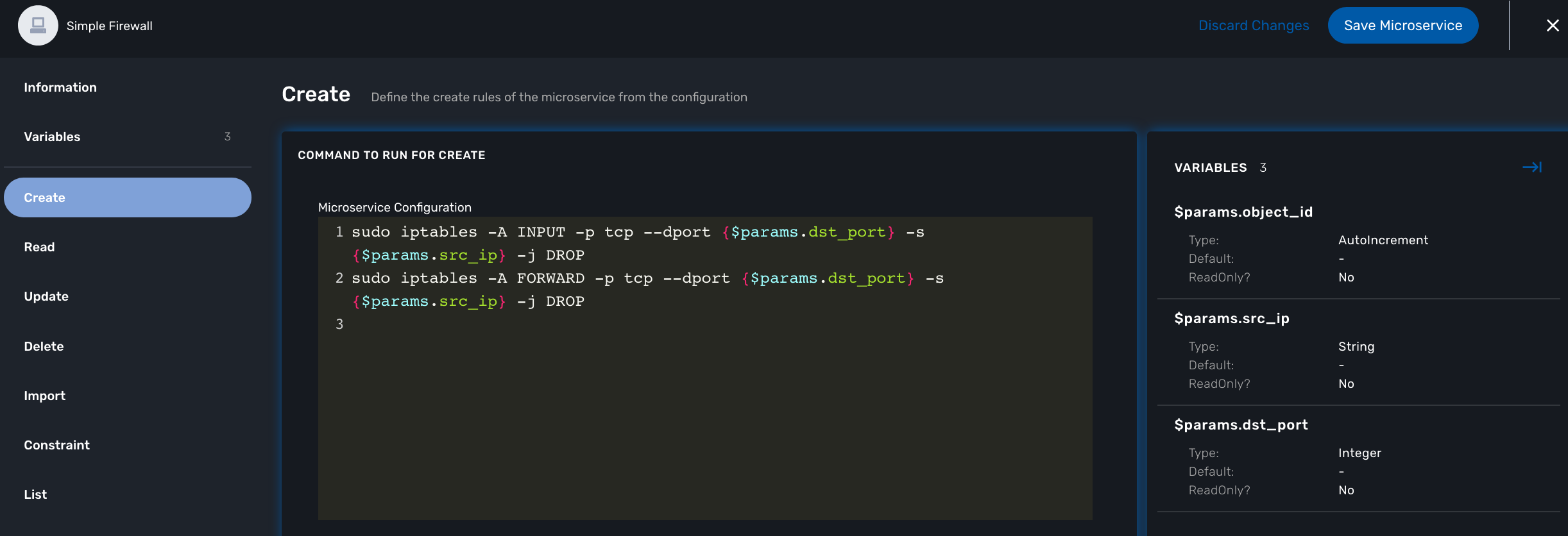

The CLI commands to create or delete an iptable rule to allow or block a port and an IP are:

sudo iptables -A INPUT -p tcp --dport <PORT TO BLOCK> -s <IP TO BLOCK> -j DROP sudo iptables -A FORWARD -p tcp --dport <PORT TO BLOCK> -s <IP TO BLOCK> -j DROP

this is how it would be implemented in the Create function of the Microservice

sudo iptables -A INPUT -p tcp --dport {$params.dst_port} -s {$params.src_ip} -j DROP

sudo iptables -A FORWARD -p tcp --dport {$params.dst_port} -s {$params.src_ip} -j DROP

As you can see the parameters are prefixed with $params. and this is the reason why the variable editor section will automatically add $params. to the variable.

The implementation of the Update will be similar and will of course depend on the CLI syntax.

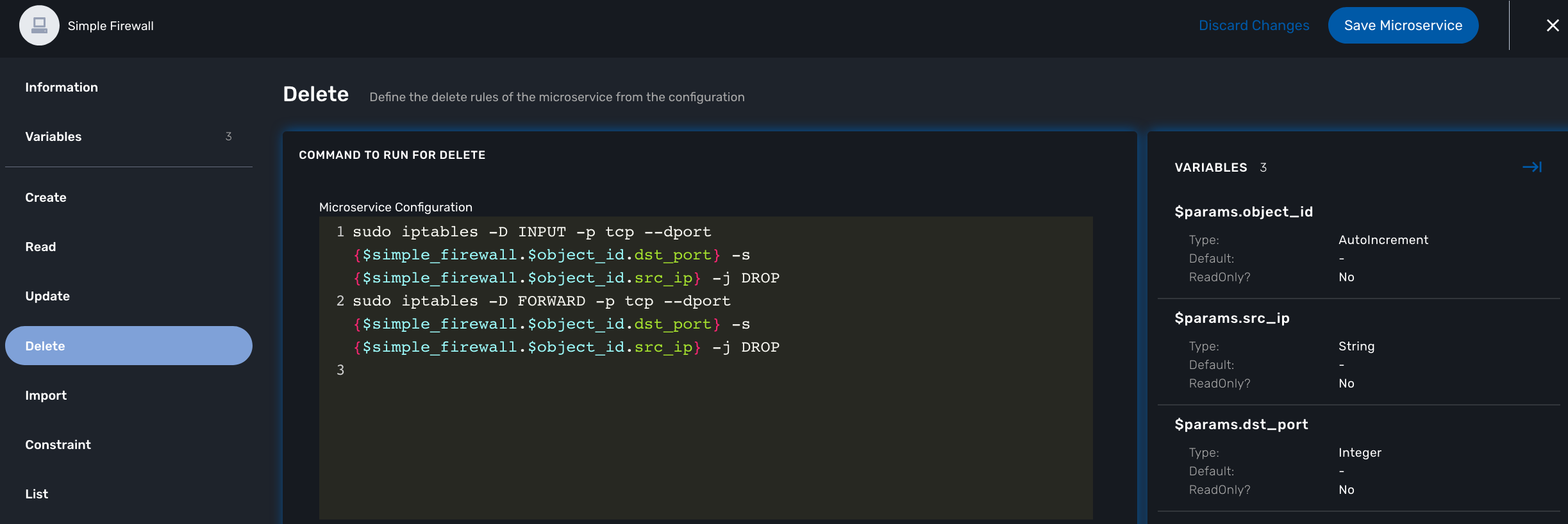

Delete

The deletion of the iptables INPUT and FORWARD rules is executed with the CLI command below:

sudo iptables -D INPUT -p tcp --dport <PORT TO BLOCK> -s <IP TO BLOCK> -j DROP sudo iptables -D FORWARD -p tcp --dport <PORT TO BLOCK> -s <IP TO BLOCK> -j DROP

This will be implemented as:

sudo iptables -D INPUT -p tcp --dport {$simple_firewall.$object_id.dst_port} -s {$simple_firewall.$object_id.src_ip} -j DROP

sudo iptables -D FORWARD -p tcp --dport {$simple_firewall.$object_id.dst_port} -s {$simple_firewall.$object_id.src_ip} -j DROP

The syntax {$simple_firewall.$object_id.dst_port} provides a way to access the Microservice variable values in the MSactivator™ configuration database.

The convention is as follow:

{$<MICROSERVICE NAME>.$object_id.<VARIABLE NAME>}

In our case:

-

MICROSERVICE NAME ⇒ simple_firewall

-

VARIABLE NAME ⇒ dst_port

-

MICROSERVICE NAME is the name of the Microservice file without the .xml extension.

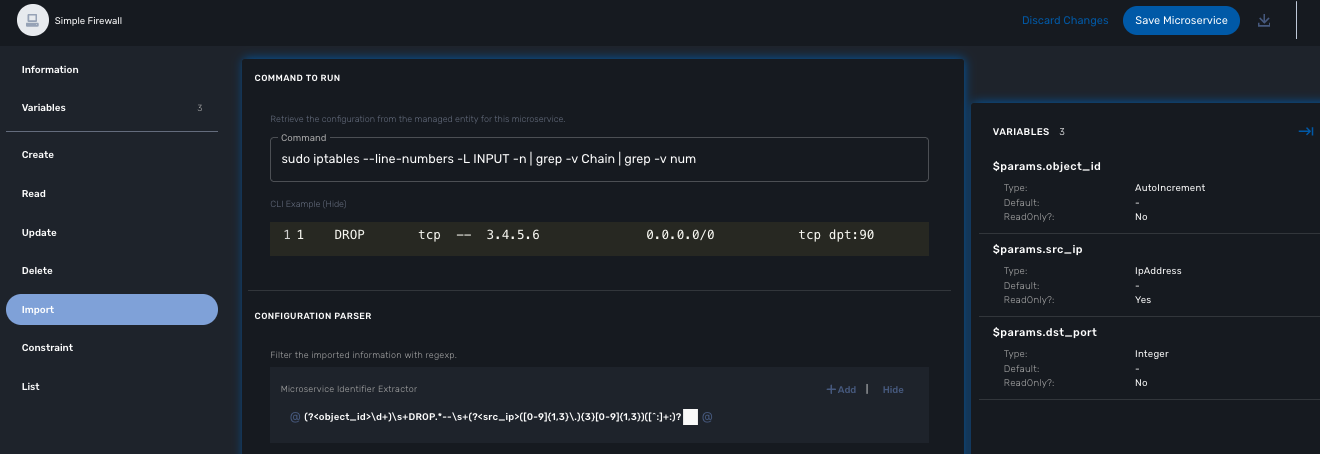

The function Import

This regex will extract the firewall parameter and store them in the database

@(?<object_id>\d+) DROP tcp -- (?<src_ip>([0-9]{1,3}\.){3}[0-9]{1,3})[^:]+:(?<dst_port>\d+)@

the variable object_id is a mandatory parameter and will be used to identify the Microservice instance in the database.

|

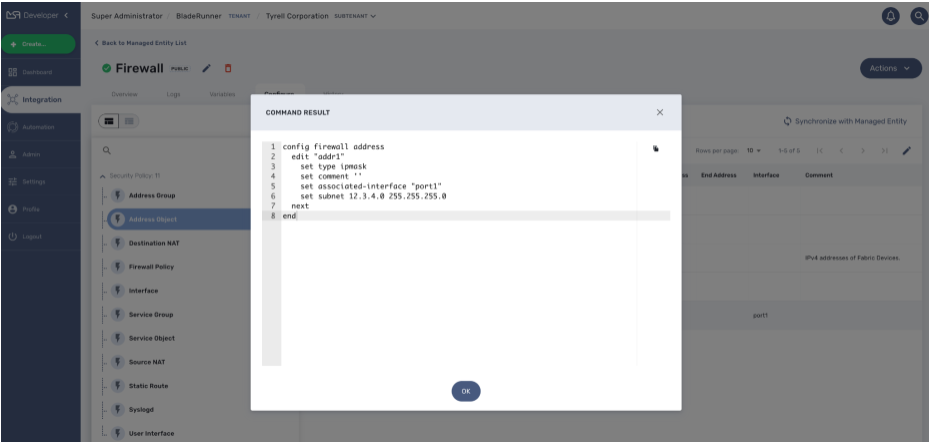

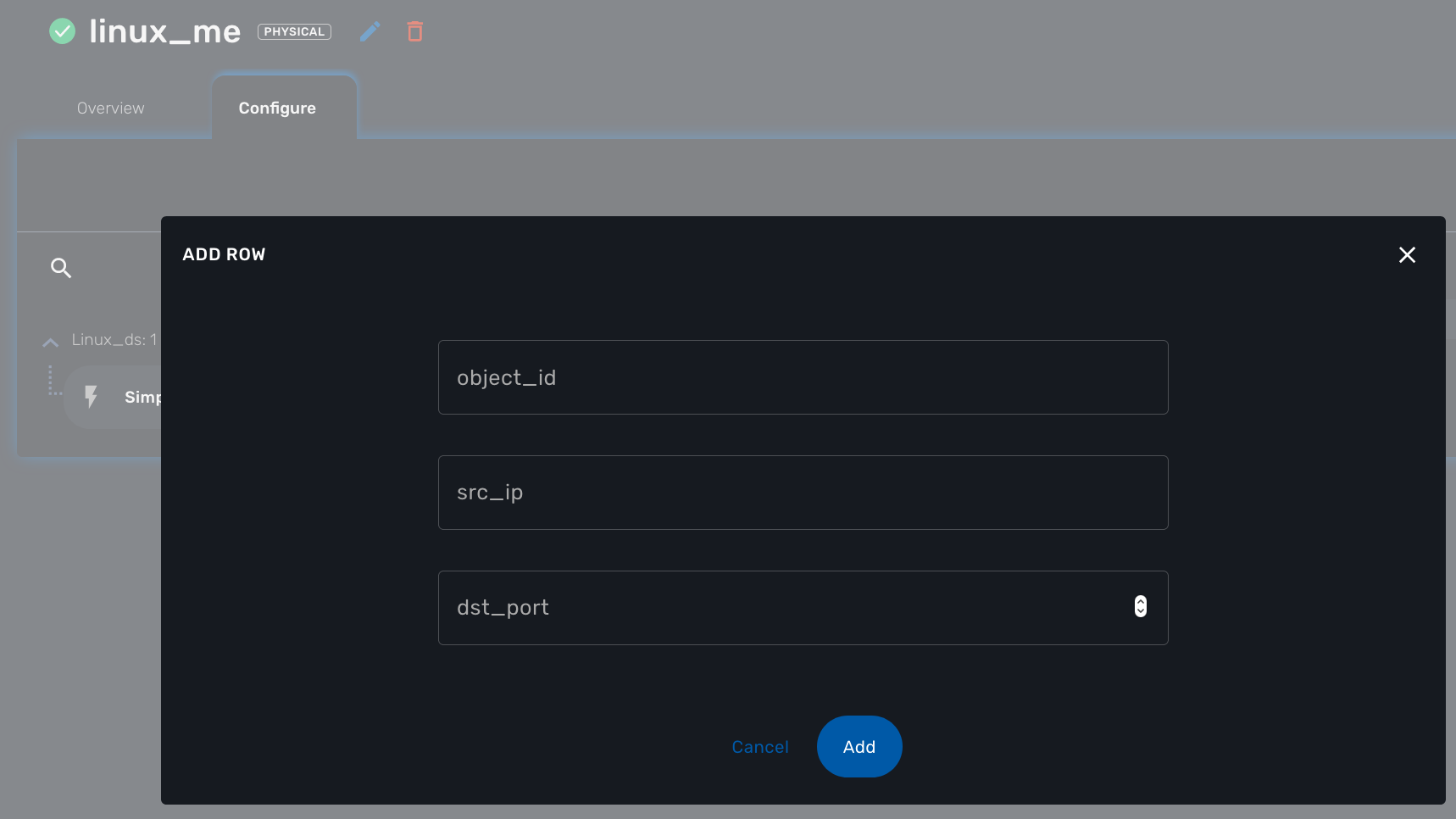

Testing the microservice

The Microservice is ready to be tested.

Make sure that you can add and delete a policy rule, that it’s reflected on the Linux firewall, and that the parameters are also properly synchronised after a call to Create or Delete.

You can also add some iptables rules manually on the Linux CLI and run a configuration synchronization to make sure that your manual changes are properly imported.

Import function: tips and examples

Below you’ll find some example of CLI based configuration and the regex that can be used to extract the variables.

These are only provided as example and you may have to modify them to match you needs.

To help with testing and validating your regular expression, there are many online tools. We, at UBiqube, usually use this one: https://regexr.com/3bhgg

Example 1 : Fortigate, get the syslogd3 config

CLI command: how full-configuration log syslogd3 setting

result:

config log syslogd3 setting

set status enable

set server "91.167.210.90"

set mode udp

set port 514

set facility local7

set source-ip ''

set format default

end

Here is the Import function implementation to extract the object_id, the status, the server IP and the port.

| config | regex | instance key | value |

|---|---|---|---|

config log syslogd3 setting |

config log (?<object_id>\S+) setting |

syslogd.syslogd3.object_id |

syslogd3 |

set status enable |

\s*set status (?<syslogd3_status>\S+) |

syslogd.syslogd3.syslogd3_status |

enable |

set port 514 |

\s*set port (?<syslogd3_port>\d+) |

syslogd.syslogd3.syslogd3_port |

514 |

set server "91.167.210.90" |

\s*set server "(?<syslogd3_server_ip>[^"]+)" |

syslogd.syslogd3.syslogd3_server_ip |

91.167.210.90 |

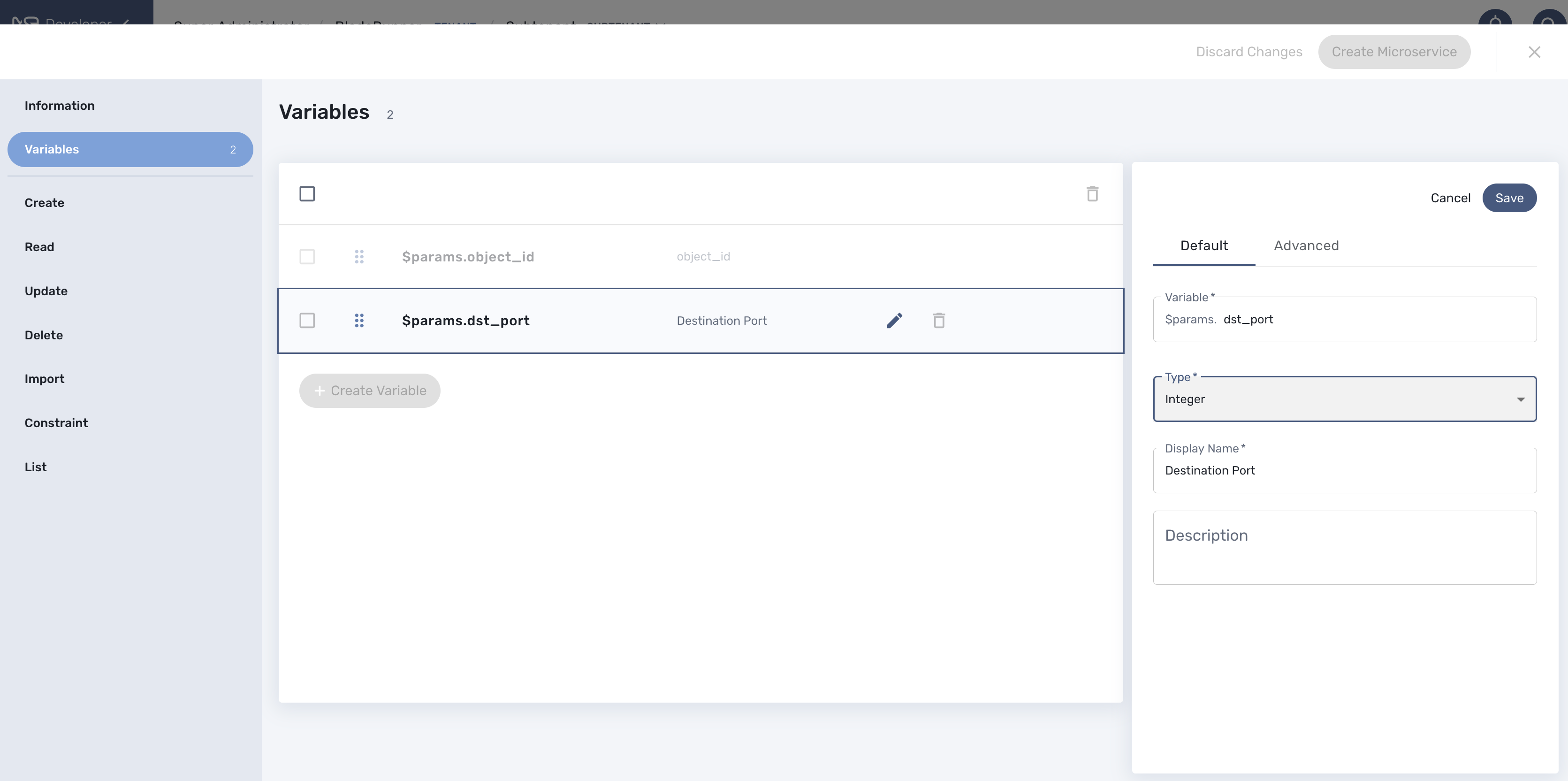

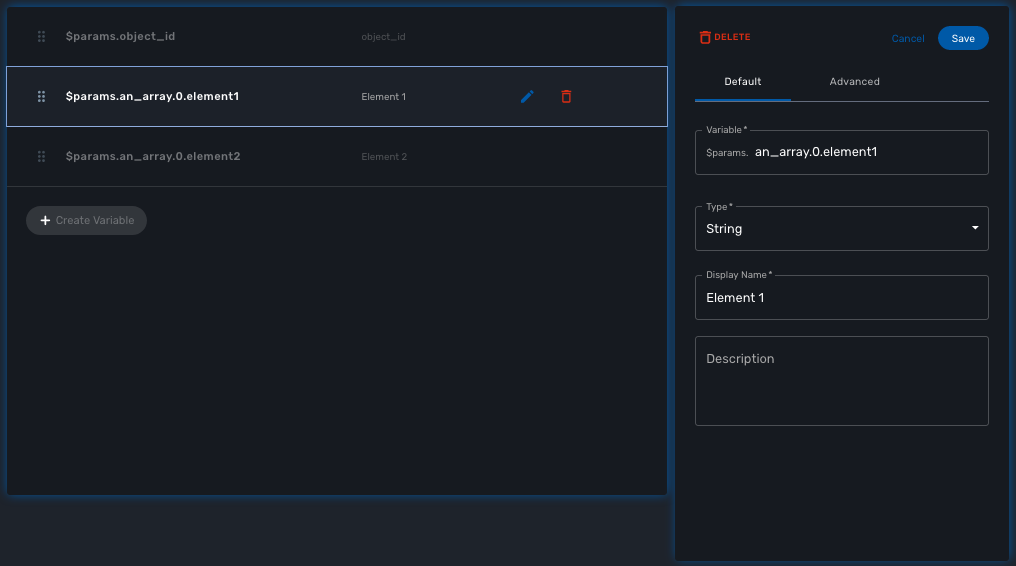

Microservice Variables

Variables are usually defined to hold the parameters to be passed to a microservice. For instance, the port and IP address variables to block for a firewall policy. Variables can also be used to display user information that is not meant to be used for configuring the managed entity.

Setting variables is done from the section "Variables" on the microservice editor screen.

When you create a new microservice, the variable object_id, which is mandatory, will be set and cannot be deleted. It can be edited to set its type and other advanced properties.

All variables are referenced with the prefix $params which is automatically set in the variable editor screen and when a variable has to be referenced in one of the microservice functions, you need to use the syntax {$params.your_variable} (see below for more examples).

By default the type of a variable is String but other types are supported such as Integer, Boolean, Password, IpAddress, ObjectRef,…

Overview

The variables are usually defined to hold the parameters to be passed to the microservice. For instance, the port and IP address variables to block for a firewall policy. Variables can also be used to display user information that is not meant to be used for configuring the managed entity.

Setting different types for variables will affect the way the microservice end user form, for creating or updating it, will render.

For instance, the type boolean will render the variable with true/false radio buttons.

Default settings

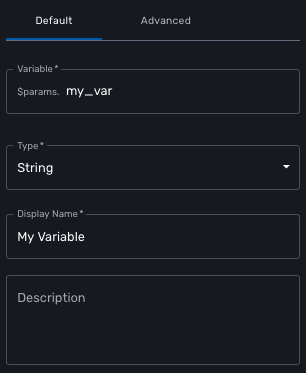

When creating or editing a variable, there are some information that need to be provided in the "Default" section.

Variable

Name of the variable to use in the implementation of the microservice or when calling the REST API.

Type

The type of the variable should be one from the list below

String